Just noticed that such noisy pixel borders also exist in the released keras examples, e.g.,

https://keras.io/examples/generative/finetune_stable_diffusion/

Closed rex-yue-wu closed 1 year ago

Just noticed that such noisy pixel borders also exist in the released keras examples, e.g.,

https://keras.io/examples/generative/finetune_stable_diffusion/

I have been trying very hard to figure out this bug... it is super tricky. I'll do some work on it now.

Thank you very much. Yes, I also notice that the same set of models sometimes does generate outputs without noisy borders.

Regarding the question step#3, I can answer it myself. Yes, we need it, but we need to change some code in Step#2, as it is not the correct way of getting an image's latent embedding.

In short, I misunderstood the VQVAE model's architecture. The VQVAE's output should not be directly used as the input of a VQVAE decoder, because it is actually a noisy version. The right way of getting VQVAE's embedding is not intuitive for someone has little domain knowledge about VQVAE, and it is only available in some tutorial's source code (one may easily omit it).

The right way of getting an image's latent embedding is,

logvar of the noise. # get a new model that outputs the 2nd to last layer of original VQVAE image encoder

vae=tf.keras.Model(

image_encoder.input,

image_encoder.layers[-2].output,

)

# get the output of the 2nd to the last layer

outputs=image_encoder(image)

# further break it down into two halves

mean, logvar = tf.split(outputs, 2, axis=-1)

# we should use mean and ignore logvar for VQVAE decoderHey @rex-yue-wu - I figured it out! Opened a PR to fix in #1693

Hey folks,

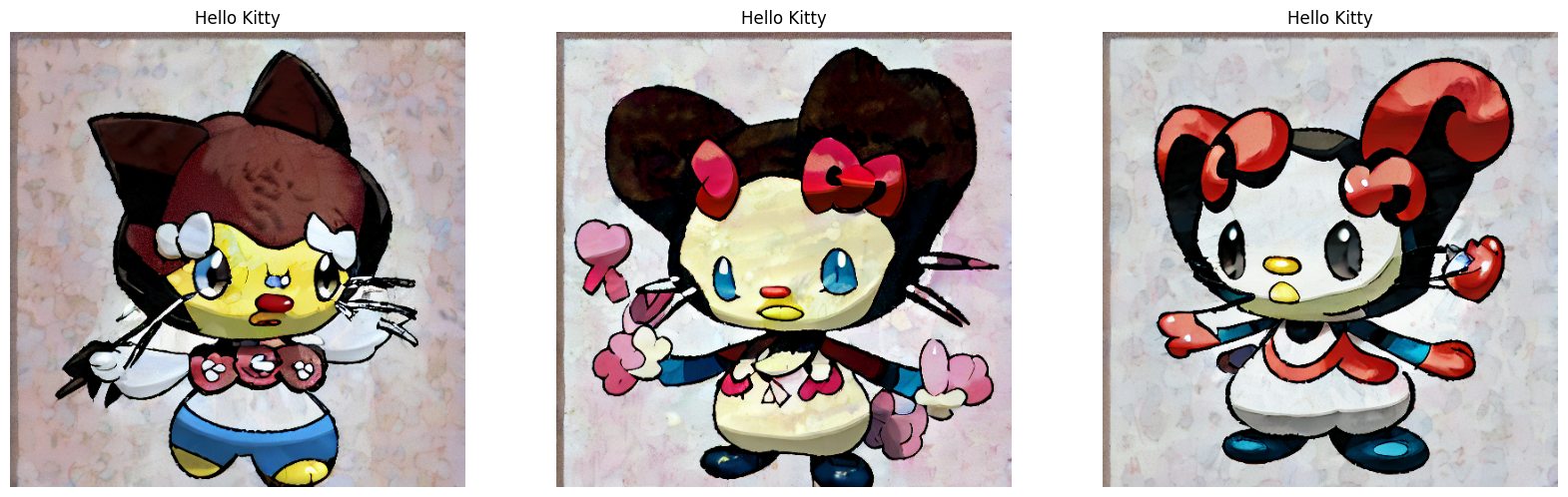

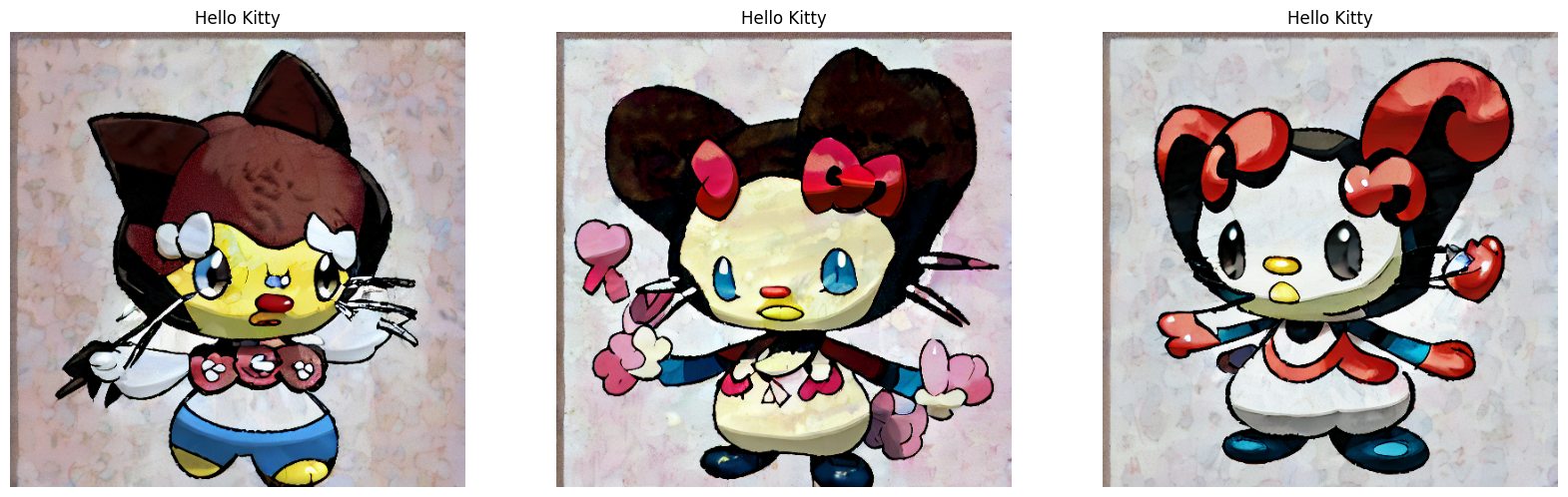

I encountered something strange in

keras-cv-0.4.2. Long story short, I believe there are something wrong with the provided stable diffusion model's VQVAE image encoder/decoder or related pre/post-processing -- the reconstructed image doesn't align with the original (where the reconstructed one has random noise in the first 8 rows and 8 cols).Below is the code block that I used to generate the provided image, and a colab notebook can be found here

In addition, I would appreciate if anyone can explain to me why the step#3 is not needed, i.e. why we don't need to multiply the magic number 0.18215 before feeding the latents into the decoder, whose input is supposed to be a latent of range (-1, 1). I manually checked and confirmed that the provided decoder has a rescaling layer with a scale parameter of 5.4899, which is the reciprocal of 0.18215.