It's not a cycle because you aren't running things infinitely. Also, what kind of merge? If you concat the input dim will increase for each loop so you can't use the same dense layer. Sum or another type of merge would be fine.

The first time through the loop, there is nothing to merge, so you could start with initializing to zeros or ones depending on your type of merge.

If you want to be fancy, you could try to use scan and other functions, but just defining the model in a loop is fine.

i = Input(...)

o = zero(...)

d = Dense(512)

for j in depth:

m = merge([i,o], mode='sum')

o = d(m)Cheers

Now if we create a layer called

Now if we create a layer called  This should be easy isn't it?

This should be easy isn't it?

[x] Check that you are up-to-date with the master branch of Keras. You can update with: pip install git+git://github.com/fchollet/keras.git --upgrade --no-deps

[x] If running on TensorFlow, check that you are up-to-date with the latest version. The installation instructions can be found here.

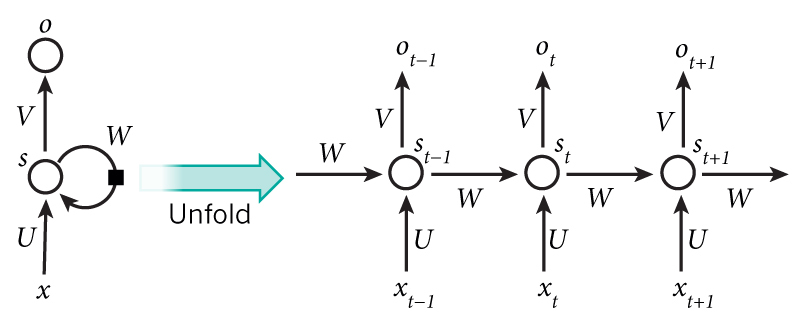

Hi, I was wondering if a model of the following kind is possible in keras. I am trying to implement a recurrent version of conditional variational auto encoder and would like to try some different models so in one case this cyclic nature arises. I simplified the whole model but his is the problem which I can't solve. i.e. can we have a cyclic graph in keras? Looks like a cycle is allowed in tensor-flow and theano by the methods tf.while_loop . What are your thoughts?