do you see any error in the kserve logs? it hard to tell which layer could have an issue

Closed gawsoftpl closed 2 years ago

do you see any error in the kserve logs? it hard to tell which layer could have an issue

Error resolved. Error was because during high load I run new revision and change 100% traffic to new revision immediately. During this process server reach bottleneck. When I change deployment to Canary with 10% step everything works fine.

thanks for the update, will close the issue for now and please feel free to reopen if you see the issue again

/close

@nader-ziada: Closing this issue.

/kind bug

When I create new revision for model I received a lots http code 0 for high load (200 requests per second)

After install new revisions only 50% of pods received traffic for inference. Rest 50% has 0 traffic.

I dont know that this is a error for knative or istio?

When I delete all models, wait for delete all pods, create models from beginning. Everything works great.

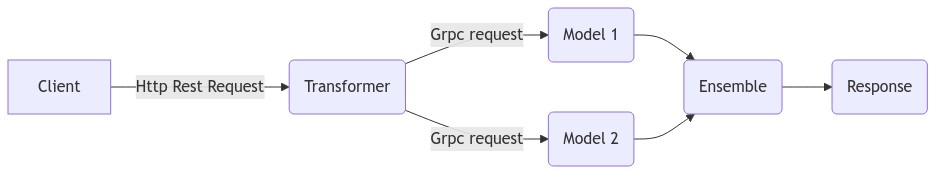

Model architecture

Error is When I create new revision of model 1 or model 2. Transformer split traffic of grpc request only for part of new models version.

Error in istio:

Environment:

kubectl version): K3S v1.24.2/etc/os-release): Ubuntu 22