I really love this idea.

So to put this into my own words: A program asks kitty about the height and width of a character, then it chops the image to display, into fitting pieces and sends them piece by piece to kitty, finally kitty starts to draw this pixel-characters at the current cursor position.

Assuming I got it right, the first issue that comes to my mind would be the drawing at cursor position, some applications like ranger show some image preview like this.  So setting a position to render to could be useful.

But I have no clue about how this ncurse shell rendering works so maybe its not an issue.

So setting a position to render to could be useful.

But I have no clue about how this ncurse shell rendering works so maybe its not an issue.

Next thought: Define a header after the escape code which is extensible something like this: \<ESC>r x=100,y=100,format=ARGB\n

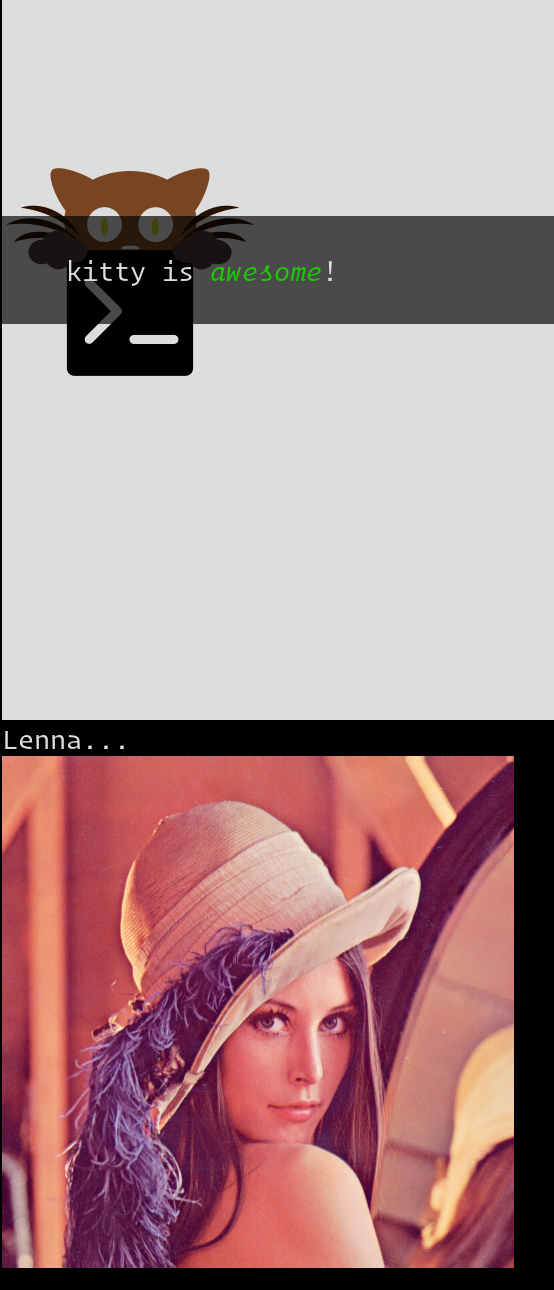

Thoughts on implementing support for raster graphics in kitty (and terminals more generally). Out of curiosity, I spent some time looking into the existing imaging solutions in terminals, I found:

1) https://github.com/saitoha/libsixel (seems the most advanced, but is fundamentally limited by backwards compatibility) 2) https://www.iterm2.com/documentation-images.html (pretty basic, only supports displaying image files) 3) https://git.enlightenment.org/apps/terminology.git/tree/README (seems to be file based so useless over ssh) 4) https://github.com/withoutboats/notty (seems largely similar to (2))

My question is, why are we limiting ourselves to this image file display paradigm? Why not allow programs to render arbitrary pixel data in the terminal? The way I envision this working is:

1) An escape code that allows programs running in the terminal to query the terminal for the current character cell size in pixels (this is similar to how querying for cursor position works)

2) An escape code that allows the program running in the terminal to specify arbitrary pixel data to render at the current cursor position (in a single cell, think of it as sending a "graphical character" instead of text character). The pixel data can be binary for maximum efficiency (taking care to escape the C0 control codes for maximum robustness).

With these two primitives, programs will be able to draw arbitrary graphics (including image files) in terminals. This, to me, seems like a more general, and powerful, abstraction to build rather than just the ability to send image files in a few formats.

I am considering building this into kitty, so I thought, that before I do so, it would be good get some more opinions on the subject. Maybe get a little consensus going. Note that once this is built it is easy to support displaying image files on top of it, if needed.

A specification for this protocol is here: https://github.com/kovidgoyal/kitty/blob/gr/graphics-protocol.asciidoc

Progress on implementing the specification: