I'm having the same issue. I also tried removing the Network ARGS as suggested on another issue. It still hangs at waiting for control plane to be ready.

Closed rushabh268 closed 7 years ago

I'm having the same issue. I also tried removing the Network ARGS as suggested on another issue. It still hangs at waiting for control plane to be ready.

Did you reload Daemon and restart kubelet service after making the changes. It worked after changing the driver and commenting network. It takes 10 to 11 minutes for the control plane to get ready for the first time for me, I would suggest leaving it for 15 minutes for the first time.

I did reload the daemon and restarted the kubelet service every single time. I have even left the setup undisturbed for entire night but it was still waiting for the control plane.

I've reloaded daemon (systemctl daemon-reload) and restarted kubelet aswell. I run kubeadm reset, edit the service configuration, reload the daemon, then run thekubeadm init.

Apiserver and etcd docker containers fail to run after commenting out the networking options. I also tried installing weave-net manually so the cni config directory would be populated, but that didn't work neither. To do this i installed weave, ran weave setup and weave launch. I don't really know how Kubeadm configures Docker to use the CNI settings, but there maybe an step i'm missing here.

Looks like kubelet cannot reach kube api server.

I noticed that etcd wasn't able to listen on port 2380, i've followed these steps again and my cluster started up:

kubeadm reset to remove any changes made to the server.kubelet doesn't run.weave setup and weave launch.kubeadm init.If you want to get rid of managing weave by hand...

weave resetKubeadm join should work on other servers.

@Yengas Can you please provide more details on the weave steps? Did you run them on all nodes, or just the master?

@jruels just the master node. Weave is just a single binary. The setup command without any args, downloads weave docker images and creates the CNI config. The launch command without any args starts the weave containers on host only.

@Yengas Still not sure, what you mean by - "get and install weave. Don't run it" I cannot obviously do kubectl apply -f https://git.io/weave-kube-1.6 so how do I install weave?

What do the apiserver logs say?

@rushabh268

To install weave run the following on the master

sudo curl -L git.io/weave -o /usr/local/bin/weave && chmod a+x /usr/local/bin/weave

Then run

weave setup

When that completes run

weave launch

you don't need to do that. kubectl apply -f https://git.io/weave-kube-1.6 should be sufficient.

The API server logs say the exact same as I mentioned in the bug. Also, I cannot do kubectl because the Kubernetes is not installed

@jruels I'll try it out and update this thread!

In the bug description there are kubeadm logs and kubelet logs. There are no apiserver logs.

@mikedanese How do I get the apiserver logs?

@jruels I am able to bring up weave

@Yengas Even after following your steps, I see the following error in the kubelet logs:

Apr 06 12:55:57 hostname kubelet[5174]: E0406 12:55:57.556067 5174 reflector.go:190] k8s.io/kubernetes/pkg/kubelet/kubelet.go:382: Failed to list *v1.Service: Get https://10.X.X.X:6443/api/v1/services?resourceVersion=0: dial tcp 10.X.X.X:6443: getsockopt: connection refused

Apr 06 12:55:57 hostname kubelet[5174]: E0406 12:55:57.557441 5174 reflector.go:190] k8s.io/kubernetes/pkg/kubelet/kubelet.go:390: Failed to list *v1.Node: Get https://10.X.X.X:6443/api/v1/nodes?fieldSelector=metadata.name%3Dhostname&resourceVersion=0: dial tcp 10.X.X.X:6443: getsockopt: connection refused

Apr 06 12:55:57 hostname kubelet[5174]: E0406 12:55:57.558822 5174 reflector.go:190] k8s.io/kubernetes/pkg/kubelet/config/apiserver.go:46: Failed to list *v1.Pod: Get https://10.X.X.X:6443/api/v1/pods?fieldSelector=spec.nodeName%3Dhostname&resourceVersion=0: dial tcp 10.X.X.X:6443: getsockopt: connection refused

Apr 06 12:55:58 hostname kubelet[5174]: I0406 12:55:58.347460 5174 kubelet_node_status.go:230] Setting node annotation to enable volume controller attach/detach

Apr 06 12:55:58 hostname kubelet[5174]: I0406 12:55:58.405762 5174 kubelet_node_status.go:77] Attempting to register node hostname1```

Also, I have stopped the firewall so not sure why I am getting the connection refused.Apr 12 02:10:00 localhost audit: SERVICE_START pid=1 uid=0 auid=4294967295 ses=4294967295 msg='unit=kubelet comm="systemd" exe="/usr/lib/systemd/systemd" hostname=? addr=? terminal=? res=success'

Apr 12 02:10:00 localhost audit: SERVICE_STOP pid=1 uid=0 auid=4294967295 ses=4294967295 msg='unit=kubelet comm="systemd" exe="/usr/lib/systemd/systemd" hostname=? addr=? terminal=? res=success'

Apr 12 02:10:00 localhost audit: SERVICE_START pid=1 uid=0 auid=4294967295 ses=4294967295 msg='unit=kubelet comm="systemd" exe="/usr/lib/systemd/systemd" hostname=? addr=? terminal=? res=success'

Apr 12 02:10:00 localhost systemd: kubelet.service: Service hold-off time over, scheduling restart.

Apr 12 02:10:00 localhost systemd: Stopped kubelet: The Kubernetes Node Agent.

Apr 12 02:10:00 localhost systemd: Started kubelet: The Kubernetes Node Agent.

Apr 12 02:10:00 localhost systemd: Starting system activity accounting tool...

Apr 12 02:10:00 localhost audit: SERVICE_START pid=1 uid=0 auid=4294967295 ses=4294967295 msg='unit=sysstat-collect comm="systemd" exe="/usr/lib/systemd/systemd" hostname=? addr=? terminal=? res=success'

Apr 12 02:10:00 localhost audit: SERVICE_STOP pid=1 uid=0 auid=4294967295 ses=4294967295 msg='unit=sysstat-collect comm="systemd" exe="/usr/lib/systemd/systemd" hostname=? addr=? terminal=? res=success'

Apr 12 02:10:00 localhost systemd: Started system activity accounting tool.

Apr 12 02:10:00 localhost kubelet: I0412 02:10:00.924529 3445 feature_gate.go:144] feature gates: map[]

Apr 12 02:10:00 localhost kubelet: I0412 02:10:00.928973 3445 docker.go:364] Connecting to docker on unix:///var/run/docker.sock

Apr 12 02:10:00 localhost kubelet: I0412 02:10:00.929201 3445 docker.go:384] Start docker client with request timeout=2m0s

Apr 12 02:10:00 localhost kubelet: W0412 02:10:00.941088 3445 cni.go:157] Unable to update cni config: No networks found in /etc/cni/net.d

Apr 12 02:10:00 localhost kubelet: I0412 02:10:00.948892 3445 manager.go:143] cAdvisor running in container: "/system.slice"

Apr 12 02:10:00 localhost kubelet: W0412 02:10:00.974540 3445 manager.go:151] unable to connect to Rkt api service: rkt: cannot tcp Dial rkt api service: dial tcp [::1]:15441: getsockopt: connection refused

Apr 12 02:10:00 localhost kubelet: I0412 02:10:00.997599 3445 fs.go:117] Filesystem partitions: map[/dev/root:{mountpoint:/var/lib/docker/devicemapper major:8 minor:0 fsType:ext4 blockSize:0}]

Apr 12 02:10:01 localhost kubelet: I0412 02:10:01.001662 3445 manager.go:198] Machine: {NumCores:1 CpuFrequency:2799998 MemoryCapacity:1037021184 MachineID:5e9a9a0b58984bfb8766dba9afa8a191 SystemUUID:5e9a9a0b58984bfb8766dba9afa8a191 BootID:7ed1a6ff-9848-437b-9460-981eeefdfe5a Filesystems:[{Device:/dev/root Capacity:15447539712 Type:vfs Inodes:962880 HasInodes:true}] DiskMap:map[43:0:{Name:nbd0 Major:43 Minor:0 Size:0 Scheduler:none} 43:11:{Name:nbd11 Major:43 Minor:11 Size:0 Scheduler:none} 43:12:{Name:nbd12 Major:43 Minor:12 Size:0 Scheduler:none} 43:15:{Name:nbd15 Major:43 Minor:15 Size:0 Scheduler:none} 43:7:{Name:nbd7 Major:43 Minor:7 Size:0 Scheduler:none} 8:0:{Name:sda Major:8 Minor:0 Size:15728640000 Scheduler:cfq} 252:0:{Name:dm-0 Major:252 Minor:0 Size:107374182400 Scheduler:none} 43:1:{Name:nbd1 Major:43 Minor:1 Size:0 Scheduler:none} 43:13:{Name:nbd13 Major:43 Minor:13 Size:0 Scheduler:none} 43:8:{Name:nbd8 Major:43 Minor:8 Size:0 Scheduler:none} 8:16:{Name:sdb Major:8 Minor:16 Size:536870912 Scheduler:cfq} 9:0:{Name:md0 Major:9 Minor:0 Size:0 Scheduler:none} 43:3:{Name:nbd3 Major:43 Minor:3 Size:0 Scheduler:none} 43:9:{Name:nbd9 Major:43 Minor:9 Size:0 Scheduler:none} 43:10:{Name:nbd10 Major:43 Minor:10 Size:0 Scheduler:none} 43:14:{Name:nbd14 Major:43 Minor:14 Size:0 Scheduler:none} 43:2:{Name:nbd2 Major:43 Minor:2 Size:0 Scheduler:none} 43:4:{Name:nbd4 Major:43 Minor:4 Size:0 Scheduler:none} 43:5:{Name:nbd5 Major:43 Minor:5 Size:0 Scheduler:none} 43:6:{Name:nbd6 Major:43 Minor:6 Size:0 Scheduler:none}] NetworkDevices:[{Name:dummy0 MacAddress:5a:34:bf:e4:23:cc Speed:0 Mtu:1500} {Name:eth0 MacAddress:f2:3c:91:1f:cd:c3 Speed:-1 Mtu:1500} {Name:gre0 MacAddress:00:00:00:00 Speed:0 Mtu:1476} {Name:gretap0 MacAddress:00:00:00:00:00:00 Speed:0 Mtu:1462} {Name:ip6_vti0 MacAddress:00:00:00:00:00:00:00:00:00:00:00:00:00:00:00:00 Speed:0 Mtu:1500} {Name:ip6gre0 MacAddress:00:00:00:00:00:00:00:00:00:00:00:00:00:00:00:00 Speed:0 Mtu:1448} {Name:ip6tnl0 MacAddress:00:00:00:00:00:00:00:00:00:00:00:00:00:00:00:00 Speed:0 Mtu:1452} {Name:ip_vti0 Ma

Apr 12 02:10:01 localhost kubelet: cAddress:00:00:00:00 Speed:0 Mtu:1428} {Name:sit0 MacAddress:00:00:00:00 Speed:0 Mtu:1480} {Name:teql0 MacAddress: Speed:0 Mtu:1500} {Name:tunl0 MacAddress:00:00:00:00 Speed:0 Mtu:1480}] Topology:[{Id:0 Memory:1037021184 Cores:[{Id:0 Threads:[0] Caches:[{Size:32768 Type:Data Level:1} {Size:32768 Type:Instruction Level:1} {Size:4194304 Type:Unified Level:2}]}] Caches:[]}] CloudProvider:Unknown InstanceType:Unknown InstanceID:None}

Apr 12 02:10:01 localhost kubelet: I0412 02:10:01.013353 3445 manager.go:204] Version: {KernelVersion:4.9.15-x86_64-linode81 ContainerOsVersion:Fedora 25 (Server Edition) DockerVersion:1.12.6 CadvisorVersion: CadvisorRevision:}

Apr 12 02:10:01 localhost kubelet: I0412 02:10:01.014086 3445 server.go:509] --cgroups-per-qos enabled, but --cgroup-root was not specified. defaulting to /

Apr 12 02:10:01 localhost kubelet: W0412 02:10:01.016562 3445 container_manager_linux.go:218] Running with swap on is not supported, please disable swap! This will be a fatal error by default starting in K8s v1.6! In the meantime, you can opt-in to making this a fatal error by enabling --experimental-fail-swap-on.

Apr 12 02:10:01 localhost kubelet: I0412 02:10:01.016688 3445 container_manager_linux.go:245] container manager verified user specified cgroup-root exists: /

Apr 12 02:10:01 localhost kubelet: I0412 02:10:01.016717 3445 container_manager_linux.go:250] Creating Container Manager object based on Node Config: {RuntimeCgroupsName: SystemCgroupsName: KubeletCgroupsName: ContainerRuntime:docker CgroupsPerQOS:true CgroupRoot:/ CgroupDriver:cgroupfs ProtectKernelDefaults:false EnableCRI:true NodeAllocatableConfig:{KubeReservedCgroupName: SystemReservedCgroupName: EnforceNodeAllocatable:map[pods:{}] KubeReserved:map[] SystemReserved:map[] HardEvictionThresholds:[{Signal:memory.available Operator:LessThan Value:{Quantity:100Mi Percentage:0} GracePeriod:0s MinReclaim:

@acloudiator I think you need to set cgroup-driver in kubeadm config.

vi /etc/systemd/system/kubelet.service.d/10-kubeadm.conf

Environment="KUBELET_EXTRA_ARGS=--cgroup-driver=systemd"And then restart the kubelet service

It would be great if kubeadm could in some way deal with the cgroup configuration issue. Ideas:

Just an update, whatever workaround I tried didn't work. So I moved to CentOS 7.3 for the master and it works like a charm! I kept the minions on CentOS 7.2 though.

@rushabh268 hi, I have the same issue on Redhat Linux 7.2. After updating the systemd, this problem is solved. You can try to update systemd before installation.

yum update -y systemd

and the error log from kubelet:

kubelet.go:1752] skipping pod synchronization - [Failed to start ContainerManager systemd version does not support ability to start a slice as transient unit]

I hit this issue on CentOS 7.3. The problem is gone after I uninstall docker-ce and then install docker-io. I am not sure if it's the root cause. Anyway, you can have a try if above methods do not work.

@ZongqiangZhang I have docker 1.12.6 installed on my nodes. @juntaoXie I tried updating systemd as well and its still stuck

So I've been running Centos 7.3 w/1.6.4 without issue on a number of machines.

Did you make certain you disabled selinux?

@timothysc I have CentOS 7.2 and not CentOS 7.3 and selinux is disabled

I have a CentOS Linux release 7.3.1611 (Core) and KubeAdm 1.6.4 does not work.

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://yum.kubernetes.io/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

EOF

setenforce 0

# edit /etc/selinux/config and set SELINUX=disabled

yum install docker kubelet kubeadm kubectl kubernetes-cni

systemctl enable docker

systemctl start docker

systemctl enable kubelet

systemctl start kubelet

reboot

kubeadm initOutput:

kubeadm init

[kubeadm] WARNING: kubeadm is in beta, please do not use it for production clusters.

[init] Using Kubernetes version: v1.6.4

[init] Using Authorization mode: RBAC

[preflight] Running pre-flight checks

[preflight] WARNING: hostname "kubernet01.localdomain" could not be reached

[preflight] WARNING: hostname "kubernet01.localdomain" lookup kubernet01.localdomain on XXXXXXX:53: read udp XXXXXXX:56624->XXXXXXX:53: i/o timeout

[preflight] Starting the kubelet service

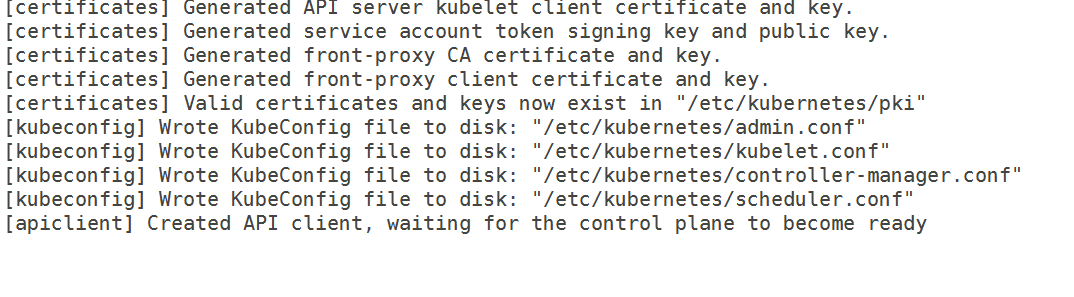

[certificates] Generated CA certificate and key.

[certificates] Generated API server certificate and key.

[certificates] API Server serving cert is signed for DNS names [kubernet01.localdomain kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 10.11.112.51]

[certificates] Generated API server kubelet client certificate and key.

[certificates] Generated service account token signing key and public key.

[certificates] Generated front-proxy CA certificate and key.

[certificates] Generated front-proxy client certificate and key.

[certificates] Valid certificates and keys now exist in "/etc/kubernetes/pki"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/kubelet.conf"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/controller-manager.conf"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/scheduler.conf"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/admin.conf"

[apiclient] Created API client, waiting for the control plane to become readyJun 06 17:13:12 kubernet01.localdomain kubelet[11429]: W0606 17:13:12.881451 11429 cni.go:157] Unable to update cni config: No networks found in /etc/cni/net.d

Jun 06 17:13:12 kubernet01.localdomain kubelet[11429]: E0606 17:13:12.882145 11429 kubelet.go:2067] Container runtime network not ready: NetworkReady=false reason:NetworkPluginNotReady message:docker: network plugin is not ready: cni config uninitialized

Jun 06 17:13:13 kubernet01.localdomain kubelet[11429]: E0606 17:13:13.519992 11429 reflector.go:190] k8s.io/kubernetes/pkg/kubelet/config/apiserver.go:46: Failed to list *v1.Pod: Get https://10.11.112.51:6443/api/v1/pods?fieldSelector=spec.nodeName%3Dkubernet01.localdomain&resourceVersion=0: dial tcp 10.11.112.51:6443: getsockopt: connection refused

Jun 06 17:13:13 kubernet01.localdomain kubelet[11429]: E0606 17:13:13.520798 11429 reflector.go:190] k8s.io/kubernetes/pkg/kubelet/kubelet.go:382: Failed to list *v1.Service: Get https://10.11.112.51:6443/api/v1/services?resourceVersion=0: dial tcp 10.11.112.51:6443: getsockopt: connection refused

Jun 06 17:13:13 kubernet01.localdomain kubelet[11429]: E0606 17:13:13.521493 11429 reflector.go:190] k8s.io/kubernetes/pkg/kubelet/kubelet.go:390: Failed to list *v1.Node: Get https://10.11.112.51:6443/api/v1/nodes?fieldSelector=metadata.name%3Dkubernet01.localdomain&resourceVersion=0: dial tcp 10.11.112.51:6443: getsockopt: connection refused

Jun 06 17:13:14 kubernet01.localdomain kubelet[11429]: E0606 17:13:14.337588 11429 event.go:208] Unable to write event: 'dial tcp 10.11.112.51:6443: getsockopt: connection refused' (may retry after sleeping)@paulobezerr can you share a bit more of kube-apiserver logs? (those in the end of your comment)

Do the rows above what you've already included mention the same ip address? I tried running k8s on two new KVMs recently, one with Ubuntu 16.04 and one with CentOS 7.3. Both gave this:

[restful] 2017/05/30 19:31:38 log.go:30: [restful/swagger] listing is available at https://x.x.x.x:6443/swaggerapi/

[restful] 2017/05/30 19:31:38 log.go:30: [restful/swagger] https://x.x.x.x:6443/swaggerui/ is mapped to folder /swagger-ui/

E0530 19:31:38.313090 1 reflector.go:201] k8s.io/kubernetes/pkg/client/informers/informers_generated/internalversion/factory.go:70: Failed to list *rbac.RoleBinding: Get https://localhost:6443/apis/rbac.authorization.k8s.io/v1beta1/rolebindings?resourceVersion=0: dial tcp y.y.y.y:6443: getsockopt: connection refusedNote that first the ip address mentioned is x.x.x.x, but then localhost is resolved to y.y.y.y (which in my case was a public ip of another KVM sitting on the same physical server). I could start kubeadm on Ubuntu in the end, but only after installing dnsmasq in a similar way to https://github.com/kubernetes/kubeadm/issues/113#issuecomment-273115861. The same workaround on CentOS did not help.

Can this be a bug in kubedns or something? Interestingly, the same steps on AWS VMs did bring kubeadm up. But EC2 instances are too expensive for my personal projects.

I have the same problem as @paulobezerr.

## Versions: kubelet-1.6.4-0.x86_64 kubernetes-cni-0.5.1-0.x86_64 kubectl-1.6.4-0.x86_64 kubeadm-1.6.4-0.x86_64 docker-client-1.12.6-28.git1398f24.el7.centos.x86_64 docker-common-1.12.6-28.git1398f24.el7.centos.x86_64 docker-1.12.6-28.git1398f24.el7.centos.x86_64

SO uname -r > 3.10.0-229.1.2.el7.x86_64 cat /etc/redhat-release > CentOS Linux release 7.3.1611 (Core)

## Steps followed:

1. sudo yum install -y docker

2. sudo groupadd docker

3. sudo usermod -aG docker $(whoami)

4. curl -LO https://storage.googleapis.com/kubernetes-release/release/$(curl -s https://storage.googleapis.com/kubernetes-release/release/stable.txt)/bin/linux/amd64/kubectl

5. chmod +x ./kubectl

6. sudo mv ./kubectl /usr/local/bin/kubectl

7. echo "source <(kubectl completion bash)" >> ~/.bashrc

8. sudo -i

9. cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

EOF

10. setenforce 0

11. yum install -y docker kubelet kubeadm kubectl kubernetes-cni

12. systemctl enable docker && systemctl start docker

13. systemctl enable kubelet && systemctl start kubelet

14. echo -e "net.bridge.bridge-nf-call-ip6tables = 1\nnet.bridge.bridge-nf-call-iptables = 1" >> /etc/sysctl.d/99-sysctl.conf && sudo service network restart

15. firewall-cmd --zone=public --add-port=6443/tcp --permanent && sudo firewall-cmd --zone=public --add-port=10250/tcp --permanent && sudo systemctl restart firewalld

16. firewall-cmd --permanent --zone=trusted --change-interface=docker0## api-server logs: --> 37.247.XX.XXX is the public IP

[restful] 2017/06/08 10:45:19 log.go:30: [restful/swagger] listing is available at https://37.247.XX.XXX:6443/swaggerapi/ [restful] 2017/06/08 10:45:19 log.go:30: [restful/swagger] https://37.247.XX.XXX:6443/swaggerui/ is mapped to folder /swagger-ui/ E0608 10:45:19.429839 1 reflector.go:201] k8s.io/kubernetes/pkg/client/informers/informers_generated/internalversion/factory.go:70: Failed to list api.Secret: Get https://localhost:6443/api/v1/secrets?resourceVersion=0: dial tcp 108.59.253.109:6443: getsockopt: connection refused E0608 10:45:19.430419 1 reflector.go:201] k8s.io/kubernetes/pkg/client/informers/informers_generated/internalversion/factory.go:70: Failed to list api.ResourceQuota: Get https://localhost:6443/api/v1/resourcequotas?resourceVersion=0: dial tcp 108.59.253.109:6443: getsockopt: connection refused E0608 10:45:19.430743 1 reflector.go:201] k8s.io/kubernetes/pkg/client/informers/informers_generated/internalversion/factory.go:70: Failed to list api.ServiceAccount: Get https://localhost:6443/api/v1/serviceaccounts?resourceVersion=0: dial tcp 108.59.253.109:6443: getsockopt: connection refused E0608 10:45:19.431076 1 reflector.go:201] k8s.io/kubernetes/pkg/client/informers/informers_generated/internalversion/factory.go:70: Failed to list storage.StorageClass: Get https://localhost:6443/apis/storage.k8s.io/v1beta1/storageclasses?resourceVersion=0: dial tcp 108.59.253.109:6443: getsockopt: connection refused E0608 10:45:19.431377 1 reflector.go:201] k8s.io/kubernetes/pkg/client/informers/informers_generated/internalversion/factory.go:70: Failed to list api.LimitRange: Get https://localhost:6443/api/v1/limitranges?resourceVersion=0: dial tcp 108.59.253.109:6443: getsockopt: connection refused E0608 10:45:19.431678 1 reflector.go:201] k8s.io/kubernetes/pkg/client/informers/informers_generated/internalversion/factory.go:70: Failed to list rbac.RoleBinding: Get https://localhost:6443/apis/rbac.authorization.k8s.io/v1beta1/rolebindings?resourceVersion=0: dial tcp 108.59.253.109:6443: getsockopt: connection refused E0608 10:45:19.431967 1 reflector.go:201] k8s.io/kubernetes/pkg/client/informers/informers_generated/internalversion/factory.go:70: Failed to list rbac.ClusterRoleBinding: Get https://localhost:6443/apis/rbac.authorization.k8s.io/v1beta1/clusterrolebindings?resourceVersion=0: dial tcp 108.59.253.109:6443: getsockopt: connection refused E0608 10:45:19.432165 1 reflector.go:201] k8s.io/kubernetes/pkg/client/informers/informers_generated/internalversion/factory.go:70: Failed to list api.Namespace: Get https://localhost:6443/api/v1/namespaces?resourceVersion=0: dial tcp 108.59.253.109:6443: getsockopt: connection refused E0608 10:45:19.432386 1 reflector.go:201] k8s.io/kubernetes/pkg/client/informers/informers_generated/internalversion/factory.go:70: Failed to list rbac.ClusterRole: Get https://localhost:6443/apis/rbac.authorization.k8s.io/v1beta1/clusterroles?resourceVersion=0: dial tcp 108.59.253.109:6443: getsockopt: connection refused E0608 10:45:19.432619 1 reflector.go:201] k8s.io/kubernetes/pkg/client/informers/informers_generated/internalversion/factory.go:70: Failed to list rbac.Role: Get https://localhost:6443/apis/rbac.authorization.k8s.io/v1beta1/roles?resourceVersion=0: dial tcp 108.59.253.109:6443: getsockopt: connection refused I0608 10:45:19.481612 1 serve.go:79] Serving securely on 0.0.0.0:6443 W0608 10:45:19.596770 1 storage_extensions.go:127] third party resource sync failed: Get https://localhost:6443/apis/extensions/v1beta1/thirdpartyresources: dial tcp 108.59.253.109:6443: getsockopt: connection refused E0608 10:45:19.596945 1 client_ca_hook.go:58] Post https://localhost:6443/api/v1/namespaces: dial tcp 108.59.253.109:6443: getsockopt: connection refused F0608 10:45:19.597174 1 controller.go:128] Unable to perform initial IP allocation check: unable to refresh the service IP block: Get https://localhost:6443/api/v1/services: dial tcp 108.59.253.109:6443: getsockopt: connection refused

@albpal I had exactly the same issue a week ago: dial tcp X.X.X.X was showing an odd IP address and I could not solve this on CentOS even after installing dnsmasq and switching to google DNS servers instead of DNS from my hosting provider. Just for curiosity: can you check if that wrong IP address you are seeing is in the the same data center as your VM? You can use http://ipinfo.io to estimate that with some degree of certainty or just traceroute.

In my case the wrong IP address was referring to another KVM on the same physical server. Can be something to do with DNS on a physical machine, which may require a workaround inside kube api or kube dns, otherwise starting a cluster becomes a huge pain for many newcomers! I've wasted a few evenings before noticing dial tcp with a wrong IP in logs, which was a pretty sad first k8s experience. Still don't have a good out-of-box solution for CentOS KVMs on my hosting provider (firstvds.ru).

What can cause this very odd mismatch in IP addresses?

@albpal Please open a new issue, what you described and what this issue is about are separate issues (I think, based on that info)

@kachkaev I have just checked what you suggested.

I found that the wrong IP ends at a CPANEL: vps-1054290-4055.manage.myhosting.com.

On the other hand, my VPS's public IP is from Italy and this wrong IP is from USA... so despite the fact that the wrong IP has something related with hosting (CPANEL) It doesn't seem to be referring to another KVM on the same physical server.

Was you able to install k8s?

@luxas I have the same behavior but I copied the docker logs

Both /var/log/messages and kubeadm init's output are the same that the original issue.

@albpal so your VM and that second machine are both on CPANEL? Good sign, because then my case is the same! The fact that it was the same physical machine could be just a co-incident.

I used two KVMs in my experiments, one with Ubuntu 16.04 and another with CentOS 7.3 Both had the same dial tcp IP address issue. I was able to start kubeadm on Ubuntu in the end by getting rid of my provider's DNS servers. The solution was based on crilozs' advice:

apt-get install dnsmasq

rm -rf /etc/resolv.conf

echo "nameserver 127.0.0.1" > /etc/resolv.conf

chmod 444 /etc/resolv.conf

chattr +i /etc/resolv.conf

echo "server=8.8.8.8

server=8.8.4.4" > /etc/dnsmasq.conf

service dnsmasq restart

# reboot just in caseThis brought the right ip address after dial tcp in logs on Ubuntu and kubeadm initialized after a couple of minutes! I tried setting up dnsmasq on CentOS the same way, but this did not solve the issue. But I'm a total newbie in this OS, so it can be that I just forgot to restart some service or clean some cache. Give this idea a try!

In any case, it feels wrong to do an extra step of re-configuring DNS because it's very confusing (I'm not a server/devops guy and that whole investigation I went through almost made me crying :fearful:). I hope kubeadm will once be able to detect if the provider's DNS servers are working in a strange way and automatically fix whatever needed in the cluster.

If anyone from the k8s team is willing to see what's happening, I'll be happy to share root access on a couple of new FirstVDS KVMs. Just email me or DM on twitter!

Thanks @kachkaev ! I will try it tomorrow

cc @kubernetes/sig-network-bugs Do you have an idea of why the DNS resolution fails above?

Thanks @kachkaev we'll try to look into it. I don't think it's really kubeadm's fault per se, but if many users are stuck on the same misconfiguration we might add it to troubleshooting docs or so...

My logs are very likely to @albpal logs. But I'll try the dnsmasq. Thank you all!

@kachkaev, it does not work. Same problem 😢 The complete log is attached.

I have been able to fix it!! Thanks so much @kachkaev for your hints!

I think the problem was:

### Scenario: A VPS with the following configuration schema:

resolv.conf [root@apalau ~]# cat resolv.conf nameserver 8.8.8.8 nameserver 8.8.4.4 nameserver 2001:4860:4860::8888 nameserver 2001:4860:4860::8844

There is not any searchdomain!

hosts [root@apalau ~]# cat /etc/hosts 127.0.0.1 localhost.localdomain localhost 37.XXX.XX.XXX name.vpshosting.com

As per logs, kubernetes container tries to connect to:

Get https://localhost:6443/api/v1/secrets?resourceVersion=0

And when I ask for: $ nslookup "localhost.$(hostname -d)" The IP I get is the wrong one, ie, 108.59.253.109.

So I think that these containers are trying to resolve localhost (without domain) and they are getting a wrong IP. Probably because "localhost.$(hostname -d)" is resolving to that IP which I think it's gonna happen on almost any VPS services.

## What I did to fix the issue on a VPS CentOS 7.3 (apart of those steps showed at https://kubernetes.io/docs/setup/independent/install-kubeadm/#installing-kubelet-and-kubeadm):

As root:

I added the hostname -i at step 5 because if I don't, docker will add 8.8.8.8 to resolv.conf on the containers.

I hope it helps to others as well.

Thanks!!

Glad to hear that @albpal! I went through your steps before kubeadm init and the cluster finally initialised inside my test FirstVDS KVM with CentOS 7.3! The only extra thing I had to do was to stop and disable firewalld as it was blocking external connections to port 6443:

systemctl disable firewalld

systemctl stop firewalldI do not recommend doing this because I'm not aware of the consequences - this just helped me to complete a test on an OS I normally don't use.

Now I'm wondering what could be done to ease the install process for newbies like myself. The path between getting stuck at Created API client, waiting for the control plane to become ready and sorting things out is still huge, especially if we take into account the time needed to dig out this issue and read through all the comments. What can you guys suggest?

@paulobezerr from what I see in your attachment I believe that your problem is slightly different. My apiserver logs contain something like:

reflector.go:190] k8s.io/kubernetes/pkg/kubelet/config/apiserver.go:46: Failed to list *v1.Pod:

Get https://localhost:6443/api/v1/pods?fieldSelector=spec.nodeName%3Dhostname&resourceVersion=0:

dial tcp RANDOM_IP:6443: getsockopt: connection refusedwhile yours say:

reflector.go:190] k8s.io/kubernetes/pkg/kubelet/config/apiserver.go:46: Failed to list *v1.Pod:

Get https://10.X.X.X:6443/api/v1/pods?fieldSelector=spec.nodeName%3Dhostname&resourceVersion=0:

dial tcp 10.X.X.X:6443: getsockopt: connection refused(in the first case it is localhost / RANDOM_IP, while in the second case it is always 10.X.X.X)

Unfortunately, I do not know what to advise except trying various --apiserver-advertise-address=??? when you kubeadm init (see docs). My hands-on k8s experience just reached 10 days, most of which were vain attempts to start a single-node cluster on FirstVDS :-)

Hope you get this sorted and share the solution with others!

@kachkaev I forgot to mention I've applied the following firewall rule:

$ firewall-cmd --zone=public --add-port=6443/tcp --permanent && sudo firewall-cmd --zone=public --add-port=10250/tcp --permanent && sudo systemctl restart firewalld

It works fine on my environment when applied this rule without deactivating the firewall. I'll add it to my previous comment to collect all the needed steps.

@juntaoXie Thanks. Updating systemd version per your comment worked for me.

Still getting this issue for two days now, I am running all this behind a proxy and there doesn't seem to be a problem. kubeadm init hangs on waiting for the control plane to become ready. When I do docker ps, the containers are pulled and running but no ports are behind allocated (I don't know if it's supposed to but ok). etcd is also running fine. However when I look at my kubelet service, it says Unable to update cni config: No networks found in /etc/cni/net.d which https://github.com/kubernetes/kubernetes/issues/43815 says is ok, you need to apply a cni network. Which I do as per https://www.weave.works/docs/net/latest/kubernetes/kube-addon/. Now, kubectl says 8080 was refused - did you specify the right host or port? Seems like a chicken and egg problem, how do I apply a cni network when my kubeadm init hangs??? This is so confusing

This is also not a cgroup problem, both docker and my kubelet service use systemd.

FWIW, I had this same problem on GCP i tried using Ubuntu 16.04 and CentOS using the following commands in a clean project:

$ gcloud compute instances create test-api-01 --zone us-west1-a --image-family ubuntu-1604-lts --image-project ubuntu-os-cloud --machine-type f1-micro --description 'node 1 for api testing'

$ gcloud compute instances create test-api-02 --zone us-west1-b --image-family ubuntu-1604-lts --image-project ubuntu-os-cloud --machine-type f1-micro --description 'node 2 for api testing'

$ gcloud compute instances create test-api-03 --zone us-west1-c --image-family ubuntu-1604-lts --image-project ubuntu-os-cloud --machine-type f1-micro --description 'node 3 for api testing'

$ apt-get update

$ curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add -

$ apt-get update && apt-get install -qy docker.io && apt-get install -y apt-transport-https

$ echo "deb http://apt.kubernetes.io/ kubernetes-xenial main" > /etc/apt/sources.list.d/kubernetes.list

$ apt-get update && apt-get install -y kubelet kubeadm kubernetes-cni

$ systemctl restart kubelet

$ kubeadm init

So after beating my head against it for several hours I ended up going with:

$ gcloud beta container --project "weather-177507" clusters create "weather-api-cluster-1" --zone "us-west1-a" --username="admin" --cluster-version "1.6.7" --machine-type "f1-micro" --image-type "COS" --disk-size "100" --scopes "https://www.googleapis.com/auth/compute","https://www.googleapis.com/auth/devstorage.read_only","https://www.googleapis.com/auth/logging.write","https://www.googleapis.com/auth/monitoring.write","https://www.googleapis.com/auth/servicecontrol","https://www.googleapis.com/auth/service.management.readonly","https://www.googleapis.com/auth/trace.append" --num-nodes "3" --network "default" --enable-cloud-logging --no-enable-cloud-monitoring --enable-legacy-authorization

I was able to get a cluster up and running where I couldn't from a blank image.

Even I am facing the same issue with Kubeadm version :

its getting stuck in

its getting stuck in

[apiclient] Created API client, waiting for the control plane to become ready

Same problem as @paulobezerr - my env: CentOS 7.4.1708 kubeadm version: &version.Info{Major:"1", Minor:"8", GitVersion:"v1.8.0", GitCommit:"6e937839ac04a38cac63e6a7a306c5d035fe7b0a", GitTreeState:"clean", BuildDate:"2017-09-28T22:46:41Z", GoVersion:"go1.8.3", Compiler:"gc", Platform:"linux/amd64"}

For me this issue was not running with SELinux disabled. The clue was his steps, the comment:

The install steps here (https://kubernetes.io/docs/setup/independent/install-kubeadm/) for CentOS say: "Disabling SELinux by running setenforce 0 is required to allow containers to access the host filesystem" but it doesn't mention (at least on the CentOS/RHEL/Fedora tab) that you should edit /etc/selinux/config and set SELINUX=disabled

For me, even though I had run setenforce 0, I was still getting the same errors. Editing /etc/selinux/config and setting SELINUX=disabled, then rebooting fixed it for me.

There seems to be a lot of (potentially orthogonal) issues at play here, so I'm keen for us to not let things diverge. So far we seem to have pinpointed 3 issues:

DNS fails to resolve localhost correctly on some machines. @kachkaev @paulobezerr Did you manage to fix this? I'm wondering how to make this more explicit in our requirements, any ideas?

Incorrect cgroup-driver match between kubelet and Docker. We should add this to our requirements list.

SELinux is not disabled. We should add this to our requirements list.

Once all three are addressed with PRs, perhaps we should close this and let folks who run into problems in the future create their own issues. That will allow us to receive more structured information and provide more granular support, as opposed to juggling lots of things in one thread. What do you think @luxas?

For me, i went with docker 17.06 (17.03 is recommended, but not available at docker.io) and ran in the same problem. Upgrading to 17.09 magically fixed the issue.

As this thread have got so long, and there are probably a lot of totally different issues, the most productive thing I can add besides @jamiehannaford's excellent comment is that please open new, targeted issues with all relevant logs / information in case something fails with the newest kubeadm v1.8, which automatically detects a faulty state much better than earlier versions. We have also improved our documentation around requirements and edge cases that hopefully will save time for people.

Thank you everyone!

i had the same issue with 1.8 in CENTOS 7 with 1.8 ? anyone had the same issue or know how to fix.

@rushins If you want to get help with the possible issue you're seeing, open a new issue here with sufficient details.

After downloading kubeadm 1.6.1 and starting kubeadm init, it gets stuck at [apiclient] Created API client, waiting for the control plane to become ready

I have the following 10-kubeadm.conf

So, its no longer a cgroup issue. Also, I have flushed the iptables rules and disabled selinux. I have also specified the IP address of the interface which I want to use for my master but it still doesn't go through.

From the logs,

Versions

kubeadm version (use

kubeadm version): kubeadm version kubeadm version: version.Info{Major:"1", Minor:"6", GitVersion:"v1.6.1", GitCommit:"b0b7a323cc5a4a2019b2e9520c21c7830b7f708e", GitTreeState:"clean", BuildDate:"2017-04-03T20:33:27Z", GoVersion:"go1.7.5", Compiler:"gc", Platform:"linux/amd64"}Environment:

Kubernetes version (use

kubectl version):Cloud provider or hardware configuration: Bare metal nodes

OS (e.g. from /etc/os-release): cat /etc/redhat-release CentOS Linux release 7.2.1511 (Core)

Kernel (e.g.

uname -a): uname -a Linux hostname 3.10.0-327.18.2.el7.x86_64 #1 SMP Thu May 12 11:03:55 UTC 2016 x86_64 x86_64 x86_64 GNU/LinuxOthers: docker -v Docker version 1.12.6, build 96d83a5/1.12.6 rpm -qa | grep kube kubelet-1.6.1-0.x86_64 kubernetes-cni-0.5.1-0.x86_64 kubeadm-1.6.1-0.x86_64 kubectl-1.6.1-0.x86_64

What happened?

Kubeadm getting stuck waiting for control plane to get ready

What you expected to happen?

It should have gone through and finished the init