Hi @chriswmackey to quote the glossary from Rendering with Radiance..

"diffraction: The deviation from linear propagation that occurs when light passes a small object or opening. This phenomenon is significant only when the object or opening is on the order of the wavelength of light, between 380 and 780 nanometers for human vision. For this reason, diffraction effects are ignored in most rendering algorithms, since most modeled geometry is on a much larger scale."

In addition to the above, there is also an issue with Radiance being a three channel (RGB) system. The algorithms inside Radiance appear to be focused primarily on handling photopic calculations. The graininess you see in your simulation appears more to be an artifact of the simulation settings.

I have tested such simulations with forward-raytracing/photon mapping and have gotten better results.

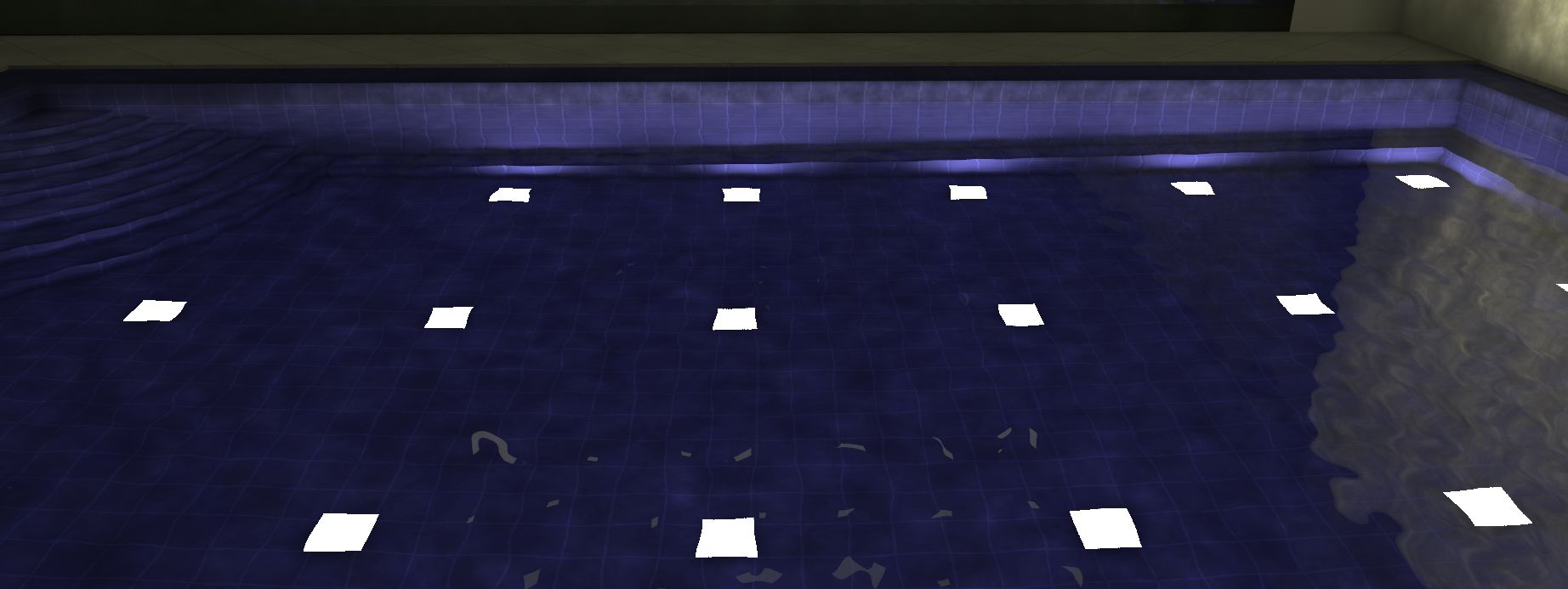

Here is an actual pool with underwater lights.

Here is an actual pool with underwater lights.

@sariths and @mostaphaRoudsari ,

I have to admit that I am asking this question while only having a tangential practical application in mind. Part of it is because I want to know if Radiance is accounting for the "dappling" or diffraction of light around some louvers on a project at my office, which seems to be the case from these high quality renderings:

The other reason, though, is that this question has been bugging me intellectually over the past few weeks after I watched several documentaries on Quantum Physics. Mainly, I want to know, is Radiance capable of simulating the double-slit experiment, which was the original basis for the argument that light behaves like a wave. If so, it would be great to have an explanation for how Radiance models this because it seems like something that might not be accommodated by simple ray-tracing.

I remember that you mentioned in our workshop, @sariths , that Radiance can simulate "practically anything with the exception of underwater renderings." Does this "practically anything" include the diffraction of light?

Thank you, as always, great gurus of Radiance, -Chris