Here is the "algorithm": https://github.com/lark-parser/lark/blob/master/lark/load_grammar.py#L260

grammar1 looks good.

grammar2 won't work. You should "classify" them after the lexing is done. Either use a lookup table, or name them appropriately. E.g.

OP_SLASH: "/" OP_STAR: "*" OP_MINUS: "-" OP_PLUS: "+"

And later: if token.type.startswith('OP_'): ...

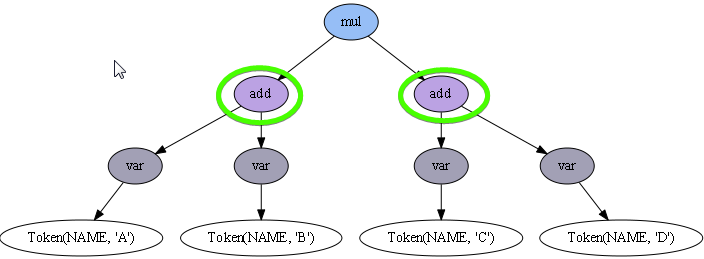

Consider the below snippet:

whose output is:

got some questions:

0) About grammar0, this set of token types

'__EQUAL', '__LPAR', '__MINUS', '__PLUS', '__RPAR', '__SLASH', '__STAR'are generated automagically, how does this work internally?1) About grammar1, following this method I'll be able to identify easily the token types so I can use the types to syntax highlight with QScintilla, is there any problem with this approach?

2) About grammar2, in case I want to syntax highlight a group of similar tokens, how can I do that? In this case the token types are still generated automatically instead becoming

OPERATOR. I'd like to be able to apply one QScintilla style to a bunch of related tokens (ie: OPERATORS= " | "(" | ")" | "/" | "*" | "-" | "+")