The current approach to generating sentinel images is to overlay 4 days worth of images to "plug" the holes that they have (gaps in the coverage of an area for a given day). This approach creates a full image, but doesn't always leave you the "best" possible image, as the output is order dependent and doesn't account for which images have better land coverage.

I have my own approach at solving this that looks at each image on a per pixel basis and does its best to avoid using pixels from clouds.

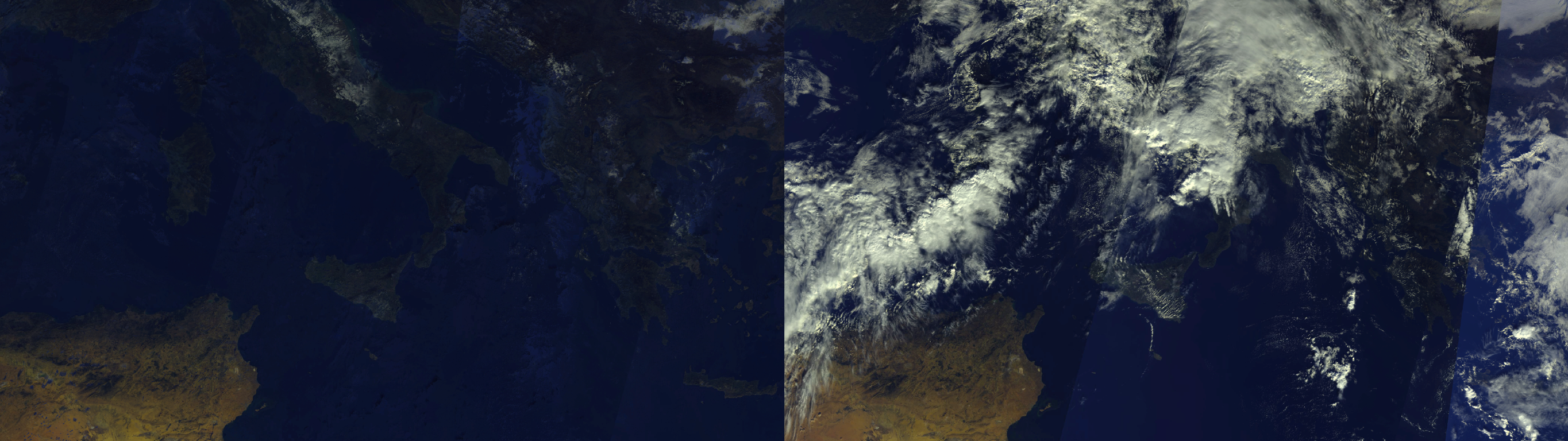

Here is an example over Italy that showcases how much land you can recover from the images that mostly get ignored.

Left: Without clouds

Right: Current image generation

Here is the source for this approach. It is by no means perfect, as you can clearly see artifacts in the water and over darker parts of land, but overall the landmass is much more visible. Also note that this approach is VERY slow atm. It's doing math, list sorting, and object generation on a per pixel basis. This can easily be parallelized though. You could work on parts of the image in parallel, then stitch them together at the end.

def fetchImage(args):

dateWithDelay = datetime.datetime.now(datetime.timezone.utc)

dateWithDelay = dateWithDelay-datetime.timedelta(hours=3)

from multiprocessing.pool import ThreadPool as Pool

def download_func(day):

time = dateWithDelay.strftime("%Y-%m-")+str(dateWithDelay.day-day).zfill(2)+"T00:00:00Z"

url = combineURL(args,"copernicus:daily_sentinel3ab_olci_l1_rgb_fulres",time)

print(f"Downloading Image from {time}...\nwith URl: {url}\n")

img = download(url)

return img

num_days = 7

with Pool(num_days) as pool:

imgs = pool.map(download_func, [i for i in range(1,num_days + 1)])

bg = Image.new('RGB', (args.width, args.height))

def dev(pixel):

cols = pixel[:4]

dev = max(cols) - min(cols)

return dev

def sm(pixel):

cols = pixel[:3]

#dev = max(cols) - min(cols)

sm = sum(cols)

if sm == 0:

return 255*3

return sm

real_images = list(filter(lambda x : x != None, imgs))

print("real: ", len(real_images))

for x in range(args.width):

for y in range(args.height):

pixels = [img.getpixel((x,y)) for img in real_images]

pixels.sort(key=sm)

sum_pixel = pixels[0] # get the lowest sum (least bright)

pixels.sort(key=dev)

dev_pixel = pixels[-1] # get the largest dev (darkest single chanel)

avg_pixel = (

int((sum_pixel[0] + dev_pixel[0])/2),

int((sum_pixel[1] + dev_pixel[1])/2),

int((sum_pixel[2] + dev_pixel[2])/2),

int((sum_pixel[3] + dev_pixel[3])/2),

)

bg.putpixel((x,y), avg_pixel)

colorGraded = white_balance(bg)

return colorGraded

The current approach to generating sentinel images is to overlay 4 days worth of images to "plug" the holes that they have (gaps in the coverage of an area for a given day). This approach creates a full image, but doesn't always leave you the "best" possible image, as the output is order dependent and doesn't account for which images have better land coverage.

I have my own approach at solving this that looks at each image on a per pixel basis and does its best to avoid using pixels from clouds.

Here is an example over Italy that showcases how much land you can recover from the images that mostly get ignored. Left: Without clouds Right: Current image generation

Here is the source for this approach. It is by no means perfect, as you can clearly see artifacts in the water and over darker parts of land, but overall the landmass is much more visible. Also note that this approach is VERY slow atm. It's doing math, list sorting, and object generation on a per pixel basis. This can easily be parallelized though. You could work on parts of the image in parallel, then stitch them together at the end.