The initial pass at implementing SourceRank 2.0 will be focused on realigning how scores are calculated and shown using existing metrics and data that we have, rather than collecting/adding new metrics.

The big pieces are:

- scale the sourcerank between 0 and 100

- usage and popularity metrics should only be considered within the packages ecosystem

- the scores of top-level dependencies of the latest version of a project should be taken into account

- Breakdown should be separated into three sections, with their own scores (Usage, Quality, Community)

- Only consider recent releases when looking at historical data (like semver validity)

- A good project with source hosted on GitLab or Bitbucket should have a similar score to one hosted on GitHub

SourceRank 2.0

Below are my thoughts on the next big set of changes to "SourceRank", which is the metric that Libraries.io calculates for each project to produce a number that can be used for sorting in lists and weighting in search results as well as encouraging good practises in open source projects to improve quality and discoverability.

Goals:

History:

SourceRank was inspired by google pagerank as a better alternative score to GitHub Stars.

The main element of the score is the number of open source software projects that depend upon a package.

If a lot of projects depend upon a package that implies a some other things about that package:

Problems with 1.0:

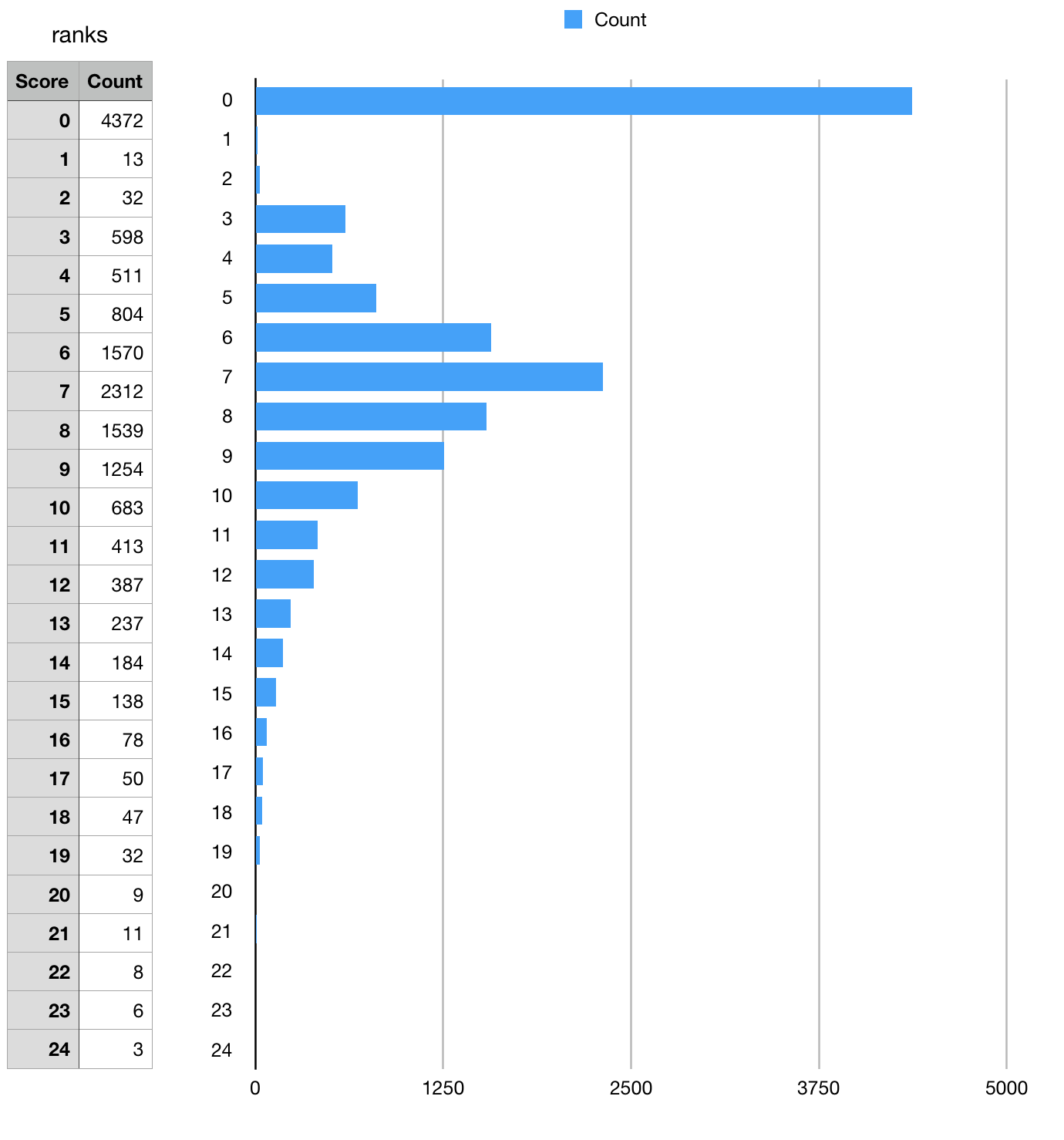

Sourcerank 1.0 doesn't have a ceiling to the score, the project with the highest score is mocha with a sourcerank of 32, when a user is shown an arbitrary number it's very difficult to know if that number is good or bad. Ideally the number should either be out of a total, i.e. 7/10, a percentage 82% or a score of some kinda like B+

Some of the elements of sourcerank cannot be fixed as they judge actions from years ago with the same level as recent actions, for example "Follows SemVer?" will punish a project for having an invalid semver number from many years ago, even if the project has followed semver perfectly for the past couple years. Recent behaviour should have more impact that past behaviour.

If a project solves a very specific niche problem or if a project tends to be used within closed source applications a lot more than open source projects (LDAP connectors, payment gateway clients etc) then usage data within open source will be small and sourcerank 1.0 would rank it lower.

Related to small usage factors, a project within a smaller ecosystem will currently get a low score even if it is the most used project within that whole ecosystem when compared to a much larger ecosystem, elm vs javascript for example.

Popularity should be based on the ecosystem which the package exists within rather than within the whole Libraries.io universe.

Quality or issues with dependencies of a project are not taken into account, if adding a package brings with it some bad quality dependencies then that should affect the score, in a similar way, the total number of direct and transitive dependencies should be taken into account.

Projects that host their development repository on GitHub currently get a much better score than ones hosted on GitLab, Bitbucket or elsewhere. Whilst being able to see and contribute to the projects development is important, where that happens should not influence the score based on the ease of access to that data.

Sourcerank is currently only calculated at the project level, but in practise the sourcerank varies by version of a project as well for a number of quality factors.

Things we want to avoid:

Past performance is not indicative of future results - when dealing with voluteeners and open source projects where the license says "THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND" we shouldn't reward extra unpaid work

Metrics that can easily be gamed by doing things bad for the community, i.e. hammering the download endpoint of a package manager to boost the numbers

Relying to heavily on any metrics that can't be collected for some package managers, download counts and dependent repository counts are

Proposed changes:

Potential 2.0 Factors/Groupings:

Usage/Popularity

Quality

Community/maintenance

Reference Links:

SourceRank 1.0 docs: https://docs.libraries.io/overview#sourcerank

SourceRank 1.0 implementation:

Metrics repo: https://github.com/librariesio/metrics

Npms score breakdown: https://api.npms.io/v2/package/redis