It's unclear what is being asked for here, so closing the issue as invalid. However, feel free to reopen with more details to help us understand the request.

Closed ETERNALBLUEbullrun closed 4 months ago

It's unclear what is being asked for here, so closing the issue as invalid. However, feel free to reopen with more details to help us understand the request.

It's unclear what is being asked for here, so closing the issue as invalid. However, feel free to reopen with more details to help us understand the request.

LLVM has clang --analyze to run source code through the frontend, produce LLVM-IR, plus run the LLVM-IR through static analysis.

https://stackoverflow.com/questions/72496805/lift-x64-windows-executable-to-llvm-bitcode-and-then-compile-back-to-x32-one has how-to produce LLVM-IR from executables (example is to use https://github.com/lifting-bits/mcsema or https://github.com/avast/retdec to do this).

The issue is just: request to have an frontend=executable, thus clang --analyze can do static analysis of executables.

clang --analyze can catch lots of accidental (or deliberate) security issues for us.

LLVM has clang --analyze to run source code through the frontend, produce LLVM-IR, plus run the LLVM-IR through static analysis.

Correct, Clang has a static analyzer that can be run while producing build output.

The issue is just: request to have an frontend=executable, thus clang --analyze can do static analysis of executables. clang --analyze can catch lots of accidental (or deliberate) security issues for us.

Clang already has this? https://clang-analyzer.llvm.org/ has more details on the usual way to run the analyzer, but you can also invoke it automatically via clang-tidy, or manually via command line flags: https://godbolt.org/z/6b5KMe15M

The issue is just: request to have an frontend=executable, thus clang --analyze can do static analysis of executables. clang --analyze can catch lots of accidental (or deliberate) security issues for us.

Clang already has this? https://clang-analyzer.llvm.org/ has more details on the usual way to run the analyzer, but you can also invoke it automatically via clang-tidy, or manually via command line flags: https://godbolt.org/z/6b5KMe15M

Oops, thought this was just with frontend=sources. So cool if can do executable analysis

@llvm/issue-subscribers-clang-static-analyzer

Author: TermuxFan (ETERNALBLUEbullrun)

Analysing already compiled executables is a whole other profession to analysing source code. Even translating machine code back into IR is a significant task.

Analysing already compiled executables is a whole other profession to analysing source code.

Once you produce LLVM-IR from the executable, do you not just use clang --analyze to perform the analysis?

Even translating machine code back into IR is a significant task.

https://github.com/lifting-bits/mcsema or https://github.com/avast/retdec can do this

@ETERNALBLUEbullrun: Clang Static Analyzer and Clang-tidy work of C/C++ level.

LLVM has clang --analyze to run source code through the frontend, produce LLVM-IR, plus run the LLVM-IR through static analysis.

No, that's incorrect, the clang static analyzer works on clang AST, not LLVM IR. The clang --analyze command neither constructs nor consumes the LLVM IR. The clang static analyzer cannot be easily adapted to work with LLVM IR without clang AST; you'd need to throw away 90% of the tool to get there so you might as well start from scratch.

@ETERNALBLUEbullrun: Clang Static Analyzer and Clang-tidy work of C/C++ level.

https://groups.google.com/g/llvm-dev/c/gYU2neC0Als has backend=C++ (converts LLVM-IR to C++)

want to convert LLVM bitcode files to cpp. I use these commands:

- clang -c -emit-llvm -fopenmp=libiomp5 oh2.c -o oh2.bc

- llc -march=cpp oh2.bc -o oh2.cxx

After this, does clang --analyze oh2.cxx allow analysis?

llc -march=cpp oh2.bc -o oh2.cxx

This does not look like a proper command to me. What should -march=cpp even mean???

clang -c -emit-llvm -fopenmp=libiomp5 oh2.c -o oh2.bc

If you have oh2.c as an input file already, then you can do the analysis on that file. Compiling it through LLVM and decompiling it with a tool that might just not even work (these commands definitely should not, the tools you linked prior actually might, but with varying levels of success) back to C(++) does not make any sense whatsoever.

Even if we had an oracle that could translate machine code from the compiled form back into IR (this is not that hard, but still unconventional) and from IR back into C++ (this part is hard, because the same optimised IR could correspond to many different source codes!), the static analysis reports from analysing such files would be useless, because the decompiled files are not the ones the project is built from. Normally, static analysis warnings are consumed to fix issues with the original source coude.

llc -march=cpp oh2.bc -o oh2.cxxThis does not look like a proper command to me. What should

-march=cppeven mean???

This is to output as C++ (sets backend=C++)

clang -c -emit-llvm -fopenmp=libiomp5 oh2.c -o oh2.bcIf you have

oh2.cas an input file already,

Don't. Quote of this was just for context. Replace that bit with the command to convert executables to LLVM-IR, after which follows is llc -march=cpp oh2.bc -o oh2.cxx && clang --analyze oh2.cxx

After this, does clang --analyze oh2.cxx allow analysis?

Technically, yes, you'll get some results. Practically, no, the results will probably be terrible.

Our entire static analysis engine is built on the assumption that the code "makes sense" to a human. For example, here's one of our most basic warnings (https://godbolt.org/z/YdbqdqKrn):

int foo(int x) {

int y = 3;

if (x == 0) // note: Assuming 'x' is equal to 0

y = 5;

return y / x; // warning: Division by zero

}

int bar(int x) {

int y = 3;

if (x == 7)

y = 5;

return y / x; // no warning here

}Is it necessarily true that division by zero can occur in foo()? No, the true-branch of the if-statement may be dead code because nobody ever passes 0 into foo().

Is it necessarily true that bar() never divides by zero? No, there's no protection against that anywhere in bar(). If we see it invoked with a 0 we'll emit a warning, but as-is we can't tell.

So either way, we can't tell whether division by zero is actually going to happen.

But from our perspective, functions foo() and bar() are fundamentally different. The difference is: the source code of foo() doesn't make sense, whereas the source code of bar() does make sense. Indeed, if the function foo() has no division of zero bug, then the true-branch of the if-statement is dead code, and dead code doesn't make sense. So no matter what, we've found "a" bug. And the note attached to the warning communicates this logic: "If x cannot be zero, why do you perform this check in the first place?". The same cannot be said about bar(); it's perfectly normal code and all code paths can be executed without ever running into division by zero.

So the idea is, when you decompile low-level IR back to a high-level language, you end up with a lot of code that makes no sense whatsoever. It will be full of dead code, unnecessary checks, to account for a bunch of niche cornercases that the original programmer has never intended to consider because they were obviously impossible.

So you might be able to find some bugs this way, but I predict terrible results: much higher false positive and false negative rates than a direct analysis of LLVM IR (or of the original source code) would yield.

So the idea is, when you decompile low-level IR back to a high-level language, you end up with a lot of code that makes no sense whatsoever. It will be full of dead code, unnecessary checks, to account for a bunch of niche cornercases that the original programmer has never intended to consider because they were obviously impossible.

So you might be able to find some bugs this way, but I predict terrible results: much higher false positive and false negative rates than a direct analysis of LLVM IR (or of the original source code) would yield.

Expect the opposite result; most release builds pass-DNDEBUG -o2 which has most of the provable dead (unreachable) code removed from the executable (such as if(!NDEBUG) { if(x = 0) {throw runtime_exception("0 should != x")}: }, which should give warning: using the result of an assignment as a condition but is optimized out),

link time optimization can remove the whole if(x == 0) block if it is not executed, with the result that analysis does not catch this.

How does the decompile/cross-compile process produce extra analysis, unless it is from some debug instrumentation (such as g++ -fsanitize=address)?

The optimizer would only carry the code even further away from the original intention. It's not guaranteed to optimize every extra branch added by codegen, and it can definitely introduce even more problems.

Inlining comes to mind: an inlined null check in the caller function will be treated very differently by the static analyzer, than a null check inside a nested function call. The former indicates that people do pass null to this function, the latter only indicates that people pass null to the callee, from which it doesn't follow that it has to be coming from this caller. So if the optimizer can't decide whether the null check is dead code, that'd be an extra false positive.

On top of that, the optimizer often simply optimizes the bugs away. It already assumes that undefined behavior - the thing we're looking for - simply doesn't exist. Eg., good luck finding this division by zero in the optimized code: https://godbolt.org/z/1br37EcWT

The problem is that compiled code often loses most of the structure and meaning that the developers originally intended with their structuring of the source code. This is the only promise and benefit of high-level languages, that you can write code on a different intelligence level and have tools deal with the rest.

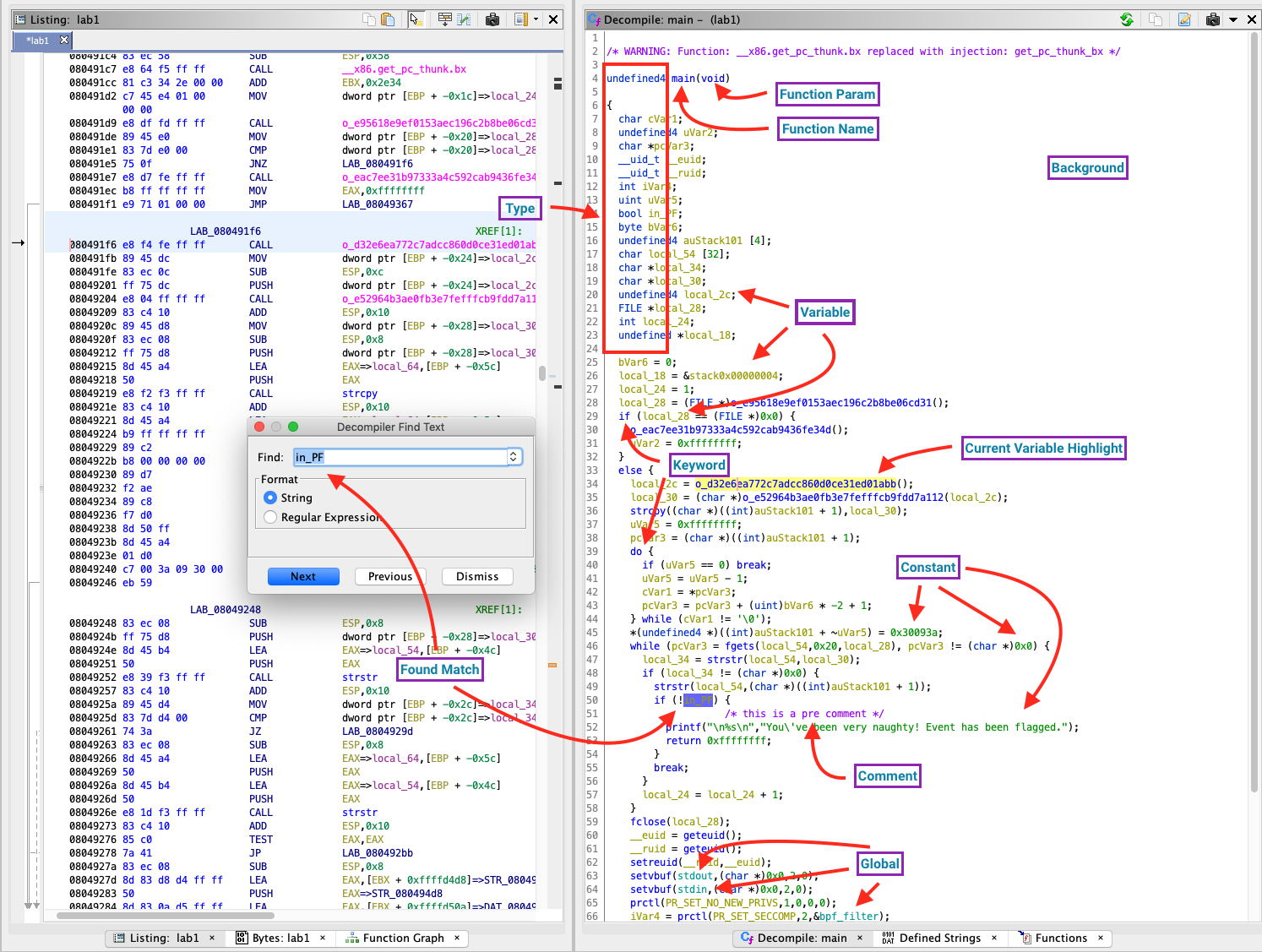

Here is a screenshot from IDA, a for-profit reverse engineering tool:

And here is a similar viewport from NSA's Ghidra, which is open-source:

The interesting bits are on the right. Now let's suppose some static analyser warning tells me that local_54 is indexed by an offset uVar5 which can overflow. What would the user be able to gather from this?

In fact, my problem with the understanding here is that it is unclear exactly what archetype of user we are hypothetically talking about. Are we to target, with this suggestion, global elite reverse engineering pros? Or are we specifically aiming for malware analysis? (I saw some issue related to cxxVirus(?) mentioned here.) The codes in the screenshots are likely dumb examples where the "only" obfuscation that happened was what your normal everyday compiler would do all the time. I would expect malware developers with intent to use even more capable obfuscators, which would just defeat the purpose of using this "syntax reforging for the sake of static analysis" even more...

If someone does want to make tooling specifically targeted for accurate static analysis (of whatever purpose) of compiled binaries, then a whole new pipeline and design model would be needed.

From https://lists.llvm.org/pipermail/llvm-dev/2016-March/096809.html : Must recompile LLVM to have backend=C++

If your g++/clang++ supports this, can do clang -S -emit-llvm foo.gas -o foo.ll && clang++ -Os -march=cpp foo.ll -o foo.cxx && clang++ --analyze foo.cxx, which should not just act as a regex to substitute disassembly (such as x86 GAS) with C++, but, rather, optimizes to produce the smallest C++ (due to -Os) thus inlined code is coalesced (such as above mentioned inline null-checks) into functions (thus space use is less).

The benefit of -Os for virus analysis is that it produces C++ with all of the inlined/unrolled code compressed back to smallest C++, which is what clang++ --analyze expects from you.

If the code was obfuscated this should fail miserably (which will raise tons of warnings from clang++ --analyze, versus if the source was an honest executable.)

link time optimization can remove the whole

if(x == 0)block if it is not executed, with the result that analysis does not catch this.

This is only true for a limited set of possibilities. This might be true if you are aggressively inlining and/or optimising statically linked programs. For shared libraries, it is invalid to tear the null-check out, because you can not prove that every call site will pass an appropriate non-null.

which should fold inlined code (such as above mentioned inline null-checls) into functions

The benefit of -Os for virus analysis is that it produces C++ with all of the inlined/unrolled code compressed back to small C++, which is what clang++ --analyze expects from you.

I don't think it is mathematically (or, rather, computationally) possible to figure out that a mov eax, 5; ret (as in @haoNoQ's example) came from a code that contained some sort of a null-check.

Specifying the CPP backend results in a not a core tier target error, from here:

https://github.com/llvm/llvm-project/blob/80e61e38428964c9c9abac5b7a59bb513b5b1c3d/llvm/CMakeLists.txt#L999-L1021

CppBackend was still there in 3.8.1:

https://github.com/llvm/llvm-project/blob/21e22ad709e16a8943268754eccfa6b536b8322b/llvm/CMakeLists.txt#L209

But is not there in 3.9.0: https://github.com/llvm/llvm-project/blob/ce25e1a77e895c7ef32c156f6cff3012f6c5a946/llvm/CMakeLists.txt#L221-L235

It is now easy to find the commit which removed this feature: https://github.com/llvm/llvm-project/commit/0c145c0c3aece99865a759b71fe5fbb913d7ce70

Also, it looks to me that this CppBackend had not ever generated the C++ code that would have resulted in its input IR when compiled (which is the, albeit rather sci-fi, goal you are asking for), but, rather, generated one C++ code which called LLVM's APIs!!! in order to make LLVM produce the "same" (-ish, at least this was the goal) IR on the output. So, basically, it was a glorified test case generator, at best.

Just look at the expected behaviour of this CppBackend... The input is the source IR in this file, and the expected output of the program is the C++ code in the ; CHECK: lines. It's all LLVM API calls all the way...

Used wrong word. Folds -> coalesces.

I don't think it is mathematically (or, rather, computationally) possible to figure out that a mov eax, 5; ret (as in @haoNoQ's example) came from a code that contained some sort of a null-check.

Not if that is the whole source without other contexts.

But LLVM has a vast infrastructure which parses various sources, plus backend goals such as -O2 (stable + fastest) or -Os (smallest), with complex formulas (much more complex than the original C++'s code generation which was for the most part just a graph search algorithm (such as Dijkstra's) with no purpose other than to produce the first possible output which does what the input instructs to do.)

in order to make LLVM produce the "same" (-ish, at least this was the goal) IR on the output. So, basically, it was a glorified test case generator, at best.

But the purpose of this is just to produce tests; if you have an unknown executable, disassemble to produce x86 GAS, use LLVM GAS frontend to produce LLVM-IR, with backend=C++ to produce C++ (or other language suitable to LLVM's static analysis) to perform tests. This is not just to detect deliberate vulnerabilities, but also just for random closed source executables which you do not want to execute unless you can test.

It's all LLVM API calls

This can not reproduce the original sources, but is this not enough to do static analysis?

But is not there in 3.9.0:

From the LLVM lists post:

During build, include CPP target in “LLVM_TARGETS_TO_BUILD” cmake variable.

Does this restore the backend=C++ (-march=cpp / -march=cxx) to us?

Should submit a new issue such as: Default should include this?

Lots of search results talk about other uses this backend=C++ has, such as:

https://labs.engineering.asu.edu/mps-lab/resources/llvm-resources/llvm-utilities-and-hacks/

https://groups.google.com/g/llvm-dev/c/gYU2neC0Als

https://stackoverflow.com/questions/64639639/where-llc-march-cpp-option-is-available-or-not

https://stackoverflow.com/questions/5180914/llvm-ir-back-to-human-readable-source-language

https://stackoverflow.com/questions/36322690/how-to-generate-llvm-api-code-via-ir-code-in-current-versions

https://stackoverflow.com/questions/67945430/what-is-the-alternative-to-llc-march-cpp-in-llvm-12

The fact that asu.edu references uses of backend=C++ suggests this has value

This can not reproduce the original sources, but is this not enough to do static analysis?

No. Because it will static-analyse the behaviour of LLVM's API, which we can already to by running CSA on LLVM's source code.

If anything, developing a static analyser that works on the IR level (CSA is not one!) would be the way forward.

The cross compiler has most of the code to allow compiled analysis, as the formulas to transform, for instance, Java Bytecode to C++, include most of the formulas for hex. The static analysis is so extensive and valuable, that this should have uses for virus scanners, as infections have intentional instances of lots of the unintentional vulnerabilities (such as buffer overflows) that your static analysis scans for us. Have suggested to developers of several virus scanners to work to retool your static analysis tools to do this for us, and developers from Cisco-Talos have considered this: https://github.com/Cisco-Talos/clamav/issues/1206