The easiest hacky way would be to use init="random". It won't be great, but it will get you something in the interim. I'll have to think a little harder about what could be going astray and get back to you for a better solution.

Open bianchi-dy opened 3 years ago

The easiest hacky way would be to use init="random". It won't be great, but it will get you something in the interim. I'll have to think a little harder about what could be going astray and get back to you for a better solution.

Update: I've tried init="random", metric="cosine" (because I'm using cosine distances, actually) so far – still getting the same the error.

I'm afraid I can't help too much more without being able to reproduce the problem at this end. One catch might be the disconnection distance. You could explicitly set that to something large when working with cosine distance. disconnection_distance=3.0 ought to do the job if that is what is causing the problem.

I don't have a solution but here is a small example that reproduces the problem for me

import umap

import umap.utils as utils

import umap.aligned_umap

import sklearn.datasets

from sklearn.datasets import fetch_20newsgroups

from sklearn.feature_extraction.text import CountVectorizer

dataset = fetch_20newsgroups(subset='all',

shuffle=True, random_state=42)

vectorizer = CountVectorizer(min_df=5, stop_words='english')

word_doc_matrix = vectorizer.fit_transform(dataset.data)

constant_dict = {i:i for i in range(word_doc_matrix.shape[0])}

constant_relations = [constant_dict for i in range(1)]

neighbors_mapper = umap.AlignedUMAP(

n_components=2,

metric='hellinger',

alignment_window_size=2,

alignment_regularisation=1e-3,

random_state=42,

init='random',

).fit(

[word_doc_matrix for i in range(2)], relations=constant_relations

)Thanks for the reproducer. I'll try to look into this when I get a little time.

I've fixed this issue with pull request #875. Basically the problem was in umap_.py line 919:

result[n_samples > 0] = float(n_epochs) / n_samples[n_samples > 0]

where the guard part of the statement didn't match the calculation. The easiest fix was casting n_samples from np.float32 to np.float64 to match the type of result.

result[n_samples > 0] = float(n_epochs) / np.float64(n_samples[n_samples > 0])

This could have alternatively been fixed by refining the guard part of the statement to something like:

result[n_samples/n_epochs > 0] = float(n_epochs) / n_samples[n_samples/n_epochs > 0]

but that solution looks worse.

Hi! I'm a relatively new UMAP user, using Aligned UMAP to visualize the results of K-means clustering on a a corpus of text documents across time.

As each time window has a number of documents that can be found in the succeeding time window, I generate a dictionary of

relationsand also obtain the distances of the documents from one another using the following process:My Aligned UMAP settings are as follows:

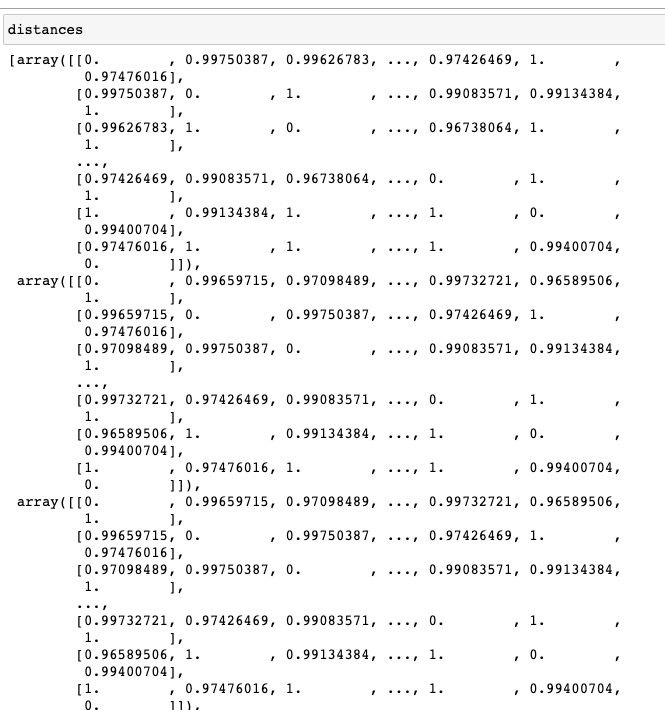

My distances array looks like this:

Previously this approach gave me no issues. However, I've been testing out new results and have been getting the error below over and over.

and the following traceback, which tells me I'm dividing by zero somewhere I'm not supposed to be?

Any ideas as to what might be causing this error or how to fix it? My suspicion is that it's to do with

distancesbut I'm not sure if I need to perform some sort of normalization or pre-processing aside from turning TFIDF similarity scores into distances. Unfortunately this error came up the night before a deadline I was intending to use Aligned UMAP for, so it'd be great if anyone could point me in the right direction to solving this even in a hacky way.