I've come up with something incredibly useful that I'd like to share. 🙂

I have to write tons of integration/functional tests for JSON APIs, and I found this to be extremely cumbersome - you get these big, structured JSON responses, and for the most part, you just want to check them for equality with your expected JSON data. But then there are all these little annoying exceptions - for example, some of the APIs I'm testing return date or time values, and in those cases, all I care is that the API returned a date or a number. There is no right or wrong value, just a type-check, sometimes a range-check, etc.

Simply erasing the differences before comparison is one way to go - but it's cumbersome (for one, because I'm using TypeScript, and so it's not easy to get the types to match) and misses the actual type/range-check, unless I hand-code those before erasing them.

It didn't spark joy, and so I came up with this, which works really well:

This is very interesting! It is a neat way to provide an extension point to the deep equal algorithm. That would require us to maintain the deep equal algo on our side rather than relying on a battle tested third party library, but why not after all. It can start with a simple fork/copy&paste at first.

The major caveat/roadblock for adoption of this feature as something "official" is the reporter - if comparison fails for some other property, the diff unfortunately will highlight any differing actual/expected values as errors.

That's kind of where this idea is stuck at the moment.

I'm definitely going to use it myself, and for now just live with this issue, since this improves the speed of writing and the readability of my tests by leaps and bounds.

But the idea/implementation would need some refinement before this feature could be made generally available.

The diff output right now is based on JSON, which is problematic in itself - the reporter currently compares JSON serialized object graphs, not the native JS object graphs that

fast-deep-equalactually uses to decide if they're equal. It's essentially a naive line diff withJSON.stringifymade canonical.

I assume you are talking about the diff reporter. I don't see it as a problem that is on the contrary an easy way to handle 95% of the cases.

Something like node-inspect-extracted would work better here - this formatter can be configured to produce canonical output as well, and since all the JSON diff function in

jsdiffappears to do is a line-diff on (canonical) JSON output, I expect this would work just as well?The point here is, the assertion API actually lets you return a different

actualandexpectedfrom the ones that were actually compared by the assertion - and so, this would enable me to produce modified object graphs, in which values that were compared by checks could be replaced with e.g. identical Symbols, causing them to show up "anonymized" as something like[numeric value]or[Date instance]in the object graph.

I am not sure using another library would solve the problem, you'll get more options to control the serialization but either way it comes down to associate a token list to a property (which you can also do with JSON.stringify):

const symbol = Symbol('meta');

const check = {

[symbol]: function () {

},

toJSON() {

return '[META]'

}

};

const obj = {

key: 'value',

keyWithCustomCheck: check

}

console.log(JSON.stringify(obj))

// > {"key":"value","keyWithCustomCheck":"[META]"}We could replace JSON.stringify by node.inspect and then run the string diff algo on the resulting serialization, that probably make things nicer in some way. But I don't think that would solve the problem.

The difficulty is that the actual object would not get all the Symbols (or meta data) so you would need to use the expected value as a kind of a blueprint to visit the actual value nodes which makes things way more complicated, going from a simple well known string diff algorithm to a totally different tree diff algorithm...

I think using something like deep-object-diff in the first place an work on the output would be required.

That's always the trade-off: how much effort you want to make compared to the added value. As I said, I believe the simple string diff has a nice output in 95% of the cases. It remains a very interesting area of investigation though.

Going back to your example, I would simply do something like

integration test example

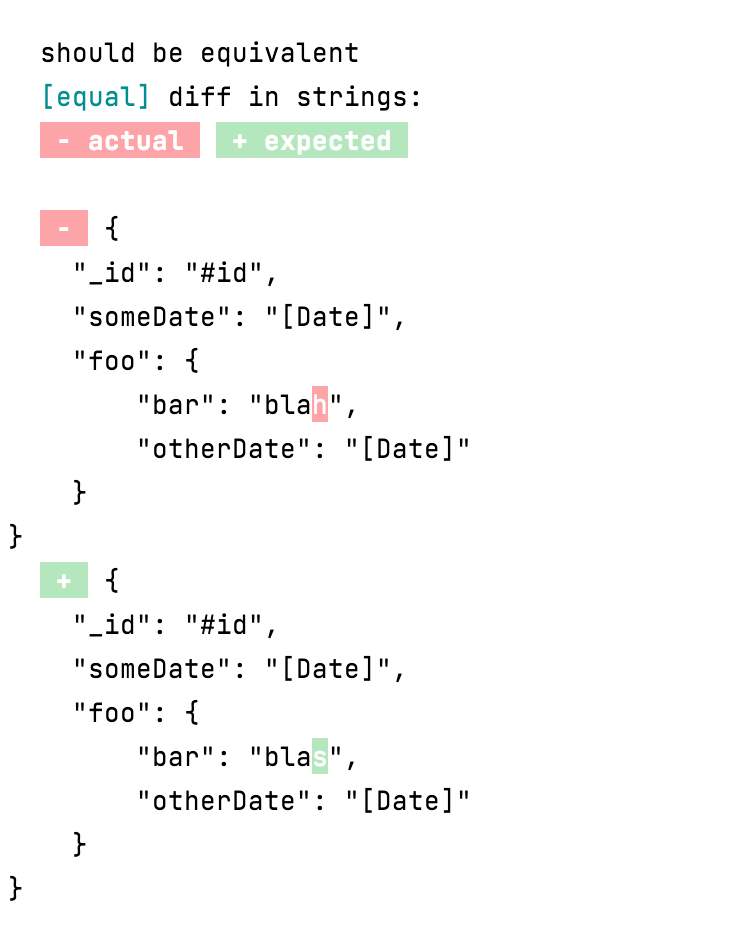

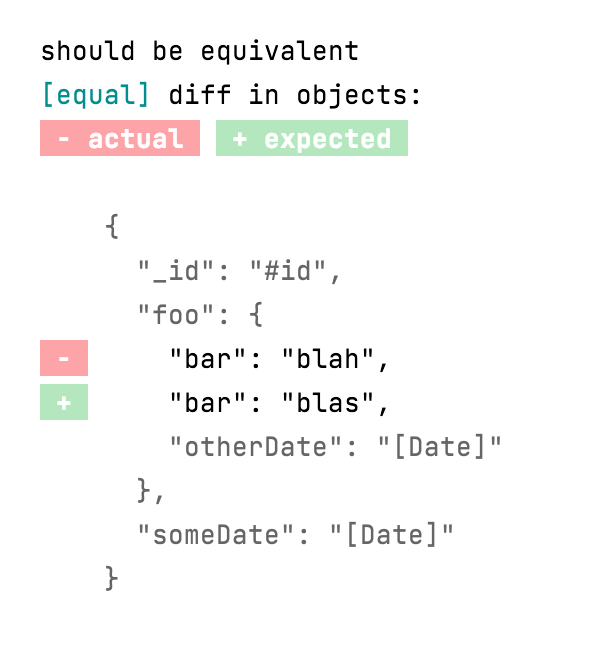

```Javascript import {test} from 'zora'; const isDate = (value) => value?.constructor === Date; const createReplacer = () => { let ctx; return (key, value) => { if (typeof value === 'object') { ctx = value; } // you need to go with ctx[key] rather than "value" because value is already serialized for things like Date if (isDate(ctx?.[key])) { return '[Date]'; } if (key === '_id') { return '#id'; } return value; }; }; const createCompareFn = (t) => (a, b) => { // comparing string t.eq(JSON.stringify(a, createReplacer(), 4), JSON.stringify(b, createReplacer(), 4)); // or comparing objects but removing the moving parts if you prefer t.eq(JSON.parse(JSON.stringify(a, createReplacer(), 4)), JSON.parse(JSON.stringify(b, createReplacer(), 4))); }; test(`some json`, (t) => { const compare = createCompareFn(t); const obj1 = { _id: 'asdfjalkdfj', someDate: new Date(Math.random() * 10e6), foo: { bar: 'blah', otherDate: new Date(Math.random() * 10e6) } }; const obj2 = { _id: 'adfsdfsdf', someDate: new Date(Math.random() * 10e6), foo: { bar: 'blas', otherDate: new Date(Math.random() * 10e6) } }; compare(obj1, obj2) }); ```which gives you in string mode

or in object mode

It does not have the assertive part ("it is a date and so"), but that is not something important here, is it ?

I've come up with something incredibly useful that I'd like to share. 🙂

I have to write tons of integration/functional tests for JSON APIs, and I found this to be extremely cumbersome - you get these big, structured JSON responses, and for the most part, you just want to check them for equality with your expected JSON data. But then there are all these little annoying exceptions - for example, some of the APIs I'm testing return date or time values, and in those cases, all I care is that the API returned a date or a number. There is no right or wrong value, just a type-check, sometimes a range-check, etc.

Simply erasing the differences before comparison is one way to go - but it's cumbersome (for one, because I'm using TypeScript, and so it's not easy to get the types to match) and misses the actual type/range-check, unless I hand-code those before erasing them.

It didn't spark joy, and so I came up with this, which works really well:

Now I can have a code layout for

expectedthat matches theactualdata structure I'm testing - just sprinkle withcheck, anywhere in theexpecteddata structure, and those checks will override the default comparison for values in theactualdata structure.It's an extension to

Assert.equal, along with a helper functioncheck, which basically just creates a "marker object", so that the comparison function internally can distinguish checks on theexpectedside from other objects/values.In the example here, I'm using the

isNPM package - most of the functions in this library (and similar libraries) work out of the box, although those that require more than one argument need a wrapper closure, e.g.check(v => is.within(v, 0, 100)). (This might could look nicer with some sort of wrapper library, e.g.check.within(0, 100)- but it works just fine as it is.)I'm posting the code, in case you find this interesting:

Unfortunately, as you can see,

compareis a verbatim copy/paste offast-deep-equal, which is a bit unfortunate - the function is recursive, so there is no way to override it's own internal references to itself, hence no way to just expand this function with the 3 lines I added at the top. The feature itself is just a few lines of code and some type-declarations.Note that, in my example above, I've replaced

Assert.equalwith my own assertions - not that interesting, but just to clarify for any readers:I would not proposition this as a replacement, but probably as a new addition, e.g.

Assert.compareor something.The major caveat/roadblock for adoption of this feature as something "official" is the reporter - if comparison fails for some other property, the diff unfortunately will highlight any differing actual/expected values as errors.

That's kind of where this idea is stuck at the moment.

I'm definitely going to use it myself, and for now just live with this issue, since this improves the speed of writing and the readability of my tests by leaps and bounds.

But the idea/implementation would need some refinement before this feature could be made generally available.

The diff output right now is based on JSON, which is problematic in itself - the reporter currently compares JSON serialized object graphs, not the native JS object graphs that

fast-deep-equalactually uses to decide if they're equal. It's essentially a naive line diff withJSON.stringifymade canonical.Something like node-inspect-extracted would work better here - this formatter can be configured to produce canonical output as well, and since all the JSON diff function in

jsdiffappears to do is a line-diff on (canonical) JSON output, I expect this would work just as well?The point here is, the assertion API actually lets you return a different

actualandexpectedfrom the ones that were actually compared by the assertion - and so, this would enable me to produce modified object graphs, in which values that were compared by checks could be replaced with e.g. identical Symbols, causing them to show up "anonymized" as something like[numeric value]or[Date instance]in the object graph.(I suppose I could achieve something similar now by replacing checked values with just strings - but then we're back to the problem of strings being compared as equal to things that serialize to the same string value... e.g. wouldn't work for actually writing a unit-test for the comparison function itself. Might be an opportunity to kill two birds with one stone?)