For the plot, you can have a look at #634, last update.

From my experience, a loss in the order of 1e-2 is generally not enough. My suggestions:

- Impose all the BC in the hard way

- After the training with Adam, continue it with L-BFGS-B. Remember to set the dtype to float64 to avoid early stops

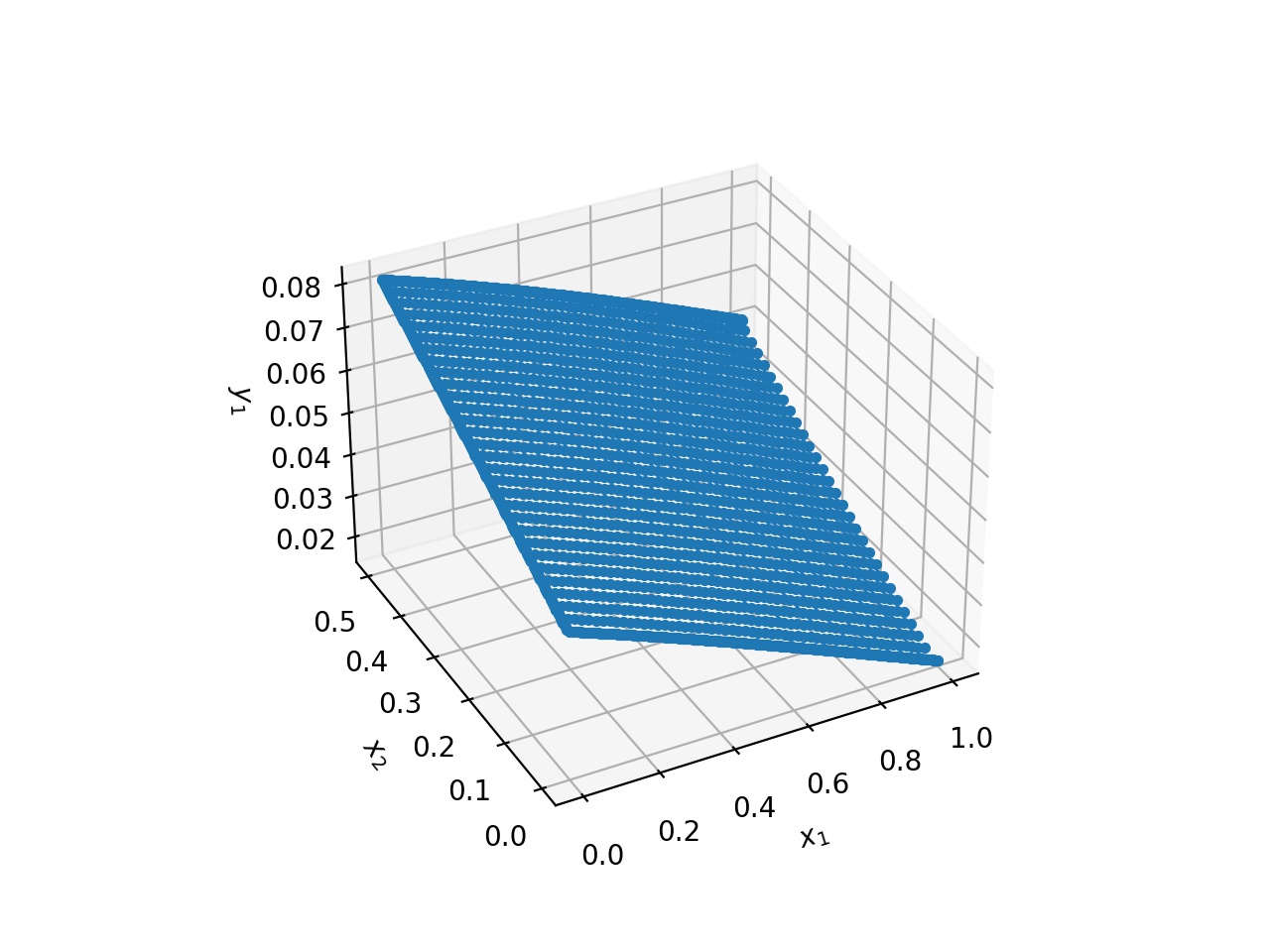

Hi Lu Lu, I am attempting a basic 2d flow (steady state, incompressible) problem through a pipe-like geometry. My losses are very low (lowest around 1e-7, highest around 1e-2), but the plots are no good. I would appreciate a second set of eyes. Here is my code. I've attached my plot of x-direction velocity as reference. I am also wondering how I can plot a colormap instead of traditional plot.