First pass on this here:

Closed Luxonis-Brandon closed 4 years ago

First pass on this here:

We're starting to route the design:

Getting closer. Did some research on the the constraints on where the IMU should be... the circled location is what seemed to be the best from everything we've understood, particularly for such a small design.

100% routed, but still need to tune the high speed lines, clean up design layers, and run design rule checks.

Brandon, the idea of leveraging such a low powered device is quite exciting! Are you aware that there's an ESP32 camera driver that allows users to use it as an IP camera? The resolution and frame rate are extremely limited, but it does work: https://github.com/espressif/esp32-camera

I understand that the goal here is primarily to provide low-bandwidth structured data for a Visually-Impaired assistance device design, but there's a lot of potential value to be able to transmit video as well, perhaps with substantial compression done by DepthAI so that the ESP32 would only be passing data along.

Brandon, the idea of leveraging such a low powered device is quite exciting!

Thanks @ksitko !

Are you aware that there's an ESP32 camera driver that allows users to use it as an IP camera? The resolution and frame rate are extremely limited, but it does work: https://github.com/espressif/esp32-camera

Thanks for pointing that out. I actually was not aware of that (although I had seen remote wifi camera demos with ESP32, which must have been using that, now that I think of it).

I understand that the goal here is primarily to provide low-bandwidth structured data for a Visually-Impaired assistance device design, but there's a lot of potential value to be able to transmit video as well, perhaps with substantial compression done by DepthAI so that the ESP32 would only be passing data along.

Totally agree.

So I had been daydreaming about this for a while. So for a datapoint the 3mbps 1080p/30FPS h.264 video off DepthAI (the standard compression settings IIRC, although they're programmable, here and here) looks pretty decent.

And 3mbps seems reasonably achievable both for SPI communication (I say that w/out looking at any drivers, so I risk being completely wrong) and also for WiFi on the ESP32.

So yes we're excited to see if this can be done. Would be pretty neat for a variety of use-cases, including among them remote debugging and getting field data back easily for training neural networks/etc.

Thoughts?

@Luxonis-Brandon Hi Brandon,

This is really exciting news! :) However, I'd just want to clarify a few things, if you don't mind:

WiFi — If the board is already equipped with WiFi, would it be possible for the data it produces to be wirelessly transmitted to a host in the same WiFi network? (i.e. Neural network inference results and XYZ depth details)

BT & BLE — Since the board's also equipped with BT & BLE, is it possible to do the same thing (transmit the data straight to a pair of Bluetooth earbuds / speakers)?

If both scenarios are possible, then how can code be added to the board's system, just to do some post-processing stuff and to transmit this wirelessly through WiFi or BT / BLE? I guess what I'm asking is, if the board has an accessible EPROM or eMMC.

If all 3 cases were true, then what's left to do is to supply the board with power through USB-C?

If number 3 were true, then would it be possible to use message passing libraries ( ZMQ and ImageZMQ ) in OpenCV, similar to how Adrian of PyImageSearch did it here for WiFi video streaming through OpenCV and an RPi? >> https://www.pyimagesearch.com/2019/04/15/live-video-streaming-over-network-with-opencv-and-imagezmq/

Please advise. Thanks! :)

And 3mbps seems reasonably achievable both for SPI communication (I say that w/out looking at any drivers, so I risk being completely wrong) and also for WiFi on the ESP32.

@Luxonis-Brandon, looking at the SPI documentation I suspect you're correct as your default max SPI clock rate tops out at 40Mhz, though if you can get that to 80Mhz so long as you use the proper IO_MUX pins. I would note the frequency and input delay figure there, as using the non IO_MUX pins not only limits your maximum clock rate but introduces input delay.

As for wifi, espressif's documentation claims 30 mbps UDP and 20 mbps TCP upload speeds, but these would be probably under ideal conditions. There is an Iperf example that could be used to test wifi throughput once the ESP32 reference design has been assembled.

You'd likely want to give yourself as much breathing room as you can, but all in all, so long as the ESP32 is only receiving bits which it just sends over wifi it does all appear to be very much achievable!

Great questions @MXGray !

WiFi — If the board is already equipped with WiFi, would it be possible for the data it produces to be wirelessly transmitted to a host in the same WiFi network? (i.e. Neural network inference results and XYZ depth details)

Yes, for sure. That's the idea. And you could have say multiple of them transfer data to a single host (say a Pi zero) that could then control modality of which one to listen to in which situations, and which data to report.

BT & BLE — Since the board's also equipped with BT & BLE, is it possible to do the same thing (transmit the data straight to a pair of Bluetooth earbuds / speakers)?

I'm not 100% sure on this, but I think so. In Googling I was reading this question here and it seems folks have used it as a bluetooth source and a bluetooth sync. So I think it will work. The code for playing to a bluetooth speaker or headset seems pretty up-to-date as well... here.

If both scenarios are possible, then how can code be added to the board's system, just to do some post-processing stuff and to transmit this wirelessly through WiFi or BT / BLE? I guess what I'm asking is, if the board has an accessible EPROM or eMMC.

Great question. So one can program the ESP32 via Arduino (easier, but less powerful) and ESP-IDF (harder, most powerful). Fortunately there are a bunch of examples for ESP32 programming online.

And the unit we'll have on there by default will have 8MB of flash for code storage, but the footprint Brian used (wisely) allows populating the ESP32 variant that has 16MB flash and 16MB PSRAM, allowing a lot heavier-duty code if needed.

If all 3 cases were true, then what's left to do is to supply the board with power through USB-C?

That's right! It would be cool to later make a version that has a built-in battery charger and a slot for LiPo batteries. Would like to do that later.

But for now it's just a matter of providing power!

If number 3 were true, then would it be possible to use message passing libraries ( ZMQ and ImageZMQ ) in OpenCV, similar to how Adrian of PyImageSearch did it here for WiFi video streaming through OpenCV and an RPi? >> https://www.pyimagesearch.com/2019/04/15/live-video-streaming-over-network-with-opencv-and-imagezmq/

I love Adrian's articles. So the ESP32 runs embedded code so no operating system or OpenCV. But, it supports MQTT which is great for transmitting messages. And it does have a video streaming capability it seems too.

Unfortunately I'm not familiar w/ ZMQ... I'd learn up now but I'm way behind so I'm going to add that to my to-do list. Thoughts?

And 3mbps seems reasonably achievable both for SPI communication (I say that w/out looking at any drivers, so I risk being completely wrong) and also for WiFi on the ESP32.

@Luxonis-Brandon, looking at the SPI documentation I suspect you're correct as your default max SPI clock rate tops out at 40Mhz, though if you can get that to 80Mhz so long as you use the proper IO_MUX pins. I would note the frequency and input delay figure there, as using the non IO_MUX pins not only limits your maximum clock rate but introduces input delay.

As for wifi, espressif's documentation claims 30 mbps UDP and 20 mbps TCP upload speeds, but these would be probably under ideal conditions. There is an Iperf example that could be used to test wifi throughput once the ESP32 reference design has been assembled.

You'd likely want to give yourself as much breathing room as you can, but all in all, so long as the ESP32 is only receiving bits which it just sends over wifi it does all appear to be very much achievable!

Thanks for the quick investigation and data points! Agreed on all points.

Funnily enough on a previous project I -almost- used the iperf test you mentioned for some factory-test stuff (for an ESP32 product, see here), but it ended up unnecessary as the ESP32 modules we extremely consistent, as it turned out, so we didn't need to factory iperf test. So had I done so, I could have shared what the actual measured throughput is!

Anyway, it seems like streaming 1080p video is very likely doable (as it's only 3mbps). And 4K video is actually only 25mbps (the videos here and here use those settings, make sure to set to 2160p in playback to see full resolution). So using UDP under great WiFi conditions (i.e. when using UniFi!) it may actually be possible to stream 4K video through an ESP32, which would be just jokingly nuts!

So we now have this design going into final review now so the order is imminent:

@Luxonis-Brandon The trend among a lot of home IP Cameras right now is that they're going low power and battery powered with a rechargeable LiPo battery which you only need to bother with once a month. Given that you've worked with ESP32's before I don't think I need to bring up just how low power those could be and combined with DepthAI it could be incredibly capable.

Thanks @ksitko and agreed. This is definitely where the Myriad X excels... it is capable of lower power than everything else that's remotely close to its capability. :-)

This design has been ordered. ETA on the boards: late September.

The boards itself just finished fab. Will go into assembly soon:

Boards just finished assembly and are being shipped to us now!

These are shipping to us now. :-)

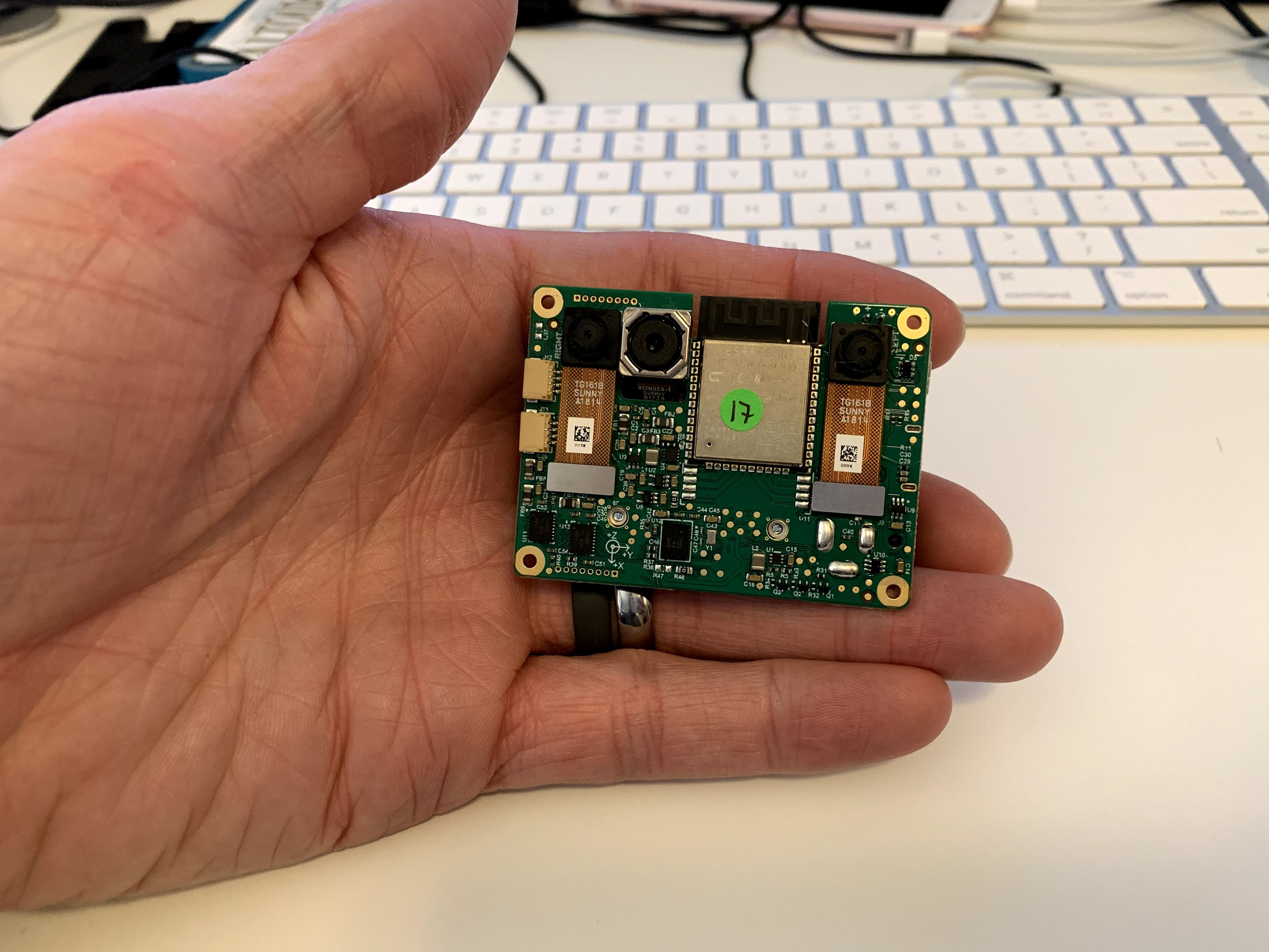

Got the board in on Saturday (2 days ago):

So far SPI works and communication seems good. Something is wrong w/ USB3, so just USB2 is working on DepthAI. But this board isn't really intended for high-speed (it's intended for low-rate communication over the ESP32), so the USB3 probably won't be an issue really for anyone (assuming it is indeed broken on this board).

Looks great! Is there any chance that we'll get a case for the WiFi variant?

We'll do our best to get a case for this as well! We'd like one too! We're making sure to focus on the cases we've already promised as well as the proper design and manufacturing of the units. If we don't have a case by launch day, we will make one available from the store not long after.

Distributing these to the dev team and to alpha customers. :-)

(And if you'd like one, you can buy Alpha samples here: https://shop.luxonis.com/collections/all/products/bw1092-pre-order

And size reference:

And size reference:

so,it's base on BoM(bw1099)?

The BW1099EMB, yes. More background on the differences here: https://docs.luxonis.com/faq/#system-on-modules

The BW1099EMB, yes. More background on the differences here: https://docs.luxonis.com/faq/#system-on-modules

I have a question,bw1099 only use ma2845‘s vpu,the mcu in ma2485 is not use,isn't it?

Hi @tuhuateng ,

The MCU in the MA2485 is being used. We use it to build all the pipelines, orchestrate everything, etc. In December we will also support customers running microPython on the DepthAI directly. We just got it initially compiling and running. See https://github.com/luxonis/depthai/issues/136 for more details on that.

Thoughts?

Thanks, Brandon

Hi @tuhuateng ,

The MCU in the MA2485 is being used. We use it to build all the pipelines, orchestrate everything, etc. In December we will also support customers running microPython on the DepthAI directly. We just got it initially compiling and running. See luxonis/depthai#136 for more details on that.

Thoughts?

Thanks, Brandon

Hi @tuhuateng ,

The MCU in the MA2485 is being used. We use it to build all the pipelines, orchestrate everything, etc. In December we will also support customers running microPython on the DepthAI directly. We just got it initially compiling and running. See luxonis/depthai#136 for more details on that.

Thoughts?

Thanks, Brandon

I am clare!one more question ,i see the openVINO is for stick,so ,the ma2485 also can use it?

Strangely this comment had disappeared before. Yes, it our stack is fully OpenVINO compatible. Some more information is here: https://docs.luxonis.com/faq/#how-do-i-integrate-depthai-into-our-product

Thanks, Brandon

The design files for this are here: https://github.com/luxonis/depthai-hardware/tree/master/BW1092_ESP32_Embedded_WIFI_BT And this is now orderable here: https://shop.luxonis.com/collections/all/products/bw1092

I feel like am intruding here, but the backer kit FAQ points here for MQTT info. (though I don't see any)

I have the OakD-POE (so cool!) So I've already got standalone connectivity. Could you direct me to instructions on how load an MQTT client (Mosquitto preferred).

(I'll take this question elsewhere if someone can recommend another place)

Hi @LaudixGit ,

Not intruding at all. So actually that BackerKit FAQ is indeed referencing this model line; the BW1092 design, which is the basis for the OAK-D-IoT-40, OAK-D-IoT-75. The OAK-D-IoT-75 was called "OAK-D-WIFI" in the KickStarter which we retroactively learned is a terrible name - it should have been called OAK-D-IoT as that's really what it is for (and so we renamed it).

So these OAK-D-IoT models have an ESP32 built-in, which is one of the most popular embedded devices for MQTT use. So for example this tutorial here (to pick a random one) could be used with either of those OAK-D-IoT models. So we then have an SPI API that has effectively feature-parity with our USB and POE, where the ESP32 runs the other side of the API, so then the ESP32 can host the user-side code and connect/send over MQTT/etc.

On the OAK-D-POE (thanks for the kind words on it!) there is no ESP32, as the ESP32 is a bit more intended for IoT-like applications, so although you can do Ethernet with it (one of our friends made this wESP32 with it, here), it is lower-throughput than would be desired for wired-ethernet applications. Even the 1gbps of OAK-D-POE makes things a bit "slow" compared to the 5gbps of USB3 (and 10gbps USB3 is coming soon to existing USB3 DepthAI models).

So that is a long way to say that we do not currently support MQTT on the POE versions (OAK-D-POE and OAK-1-POE). That said, this may be something we could implement. I'll ask the team on the effort to get Mosquito running natively on DepthAI (on the Myriad X).

Thanks and sorry for maybe not the news you were hoping for.

Thoughts?

Thanks, Brandon

On the OAK-D-POE ... there is no ESP32, ... so although you can do Ethernet with it [, it's] a bit "slow" ... [MQTT] may be something we could implement.

@Luxonis-Brandon That'd be great.

My thought (justification) was that if the Oak does all the inference, the only data to transmit are the results and only when detection occurs - the communication limit would not be a constraint. eg: {time, object detected (dog), scale (H,W,L), position (x, y, z)}

This would be inappropriate for an auto-drive car, but very good for intrusion detection.

Agreed and makes sense. Will circle back what we find. There are two other things that could be super useful here as well on POE:

As then WebRTC and/or RTSP could be output from the device as desired, and perhaps controllable based on what is seen.

@Luxonis-Brandon BTW I thought the wESP32 seemed familiar. I use one of their other products quite a lot.

Ok, the one-hit-wonder for the Christmas season. 🎄 Person identification, pose detection, and emotion detection, plus send an alert.

Unobtrusively plug in the OakD at the office entrance and... voilà: Is the boss coming your way and is s/he happy.

(Ok, okay. silly and irreverent. just a little levity for this thread 😏 )

Sp @LaudixGit we have HTTP GET/POST now via script node. This might be the best way to go to start.

We're also going to see if there's some light MQQT that could be made to work in the script node.

Thoughts?

Thanks, Brandon

I certainly could create a gateway that accepts HTTP and translates to MQTT. But that requires a 'middle man' to host the gateway. Using OakD as-is also requires a middle-man to host the python script. Of course the HTTP gateway could service many OakDs; but I think the as-is python gateway can service many OakDs too. (but not as many) In short, a nice step forward, and versatile, but not sufficient to be standalone.

If the OakD had a browser-esque service, I could use html with embedded javascript, but that sounds far more extensive than MQTT.

BTW, if I were the dev team I'd start with the simple HTTP as it appeals to a broader audience

Thanks. All makes sense. We'll see if there's a lightweight MQTT that we can get into the scripting node, as that would provide the most flexibility.

Did anyone ever figure out streaming video via the esp32?

@Luxonis-Brandon Was just wondering, did you make any progress on that lightweight MQTT client on the OAK-D-IOT?

Guess this is what we could use: https://docs.espressif.com/projects/esp-idf/en/v4.1.2/api-reference/protocols/mqtt.html Will look into that tomorrow.

Thanks sounds good! And sorry I had forgotten to circle back on this one.

Start with the

why:One of the core value-adds of DepthAI is that it runs everything on-board. So when used with a host computer over USB, this offloads the host from having to do any of these computer vision or AI work-loads.

This can actually be even more valuable in embedded applications - where the

hostis a microcontroller communicated with over SPI, UART, or I2C and is either running no operating system at all or some very-light-weight RTOS like freeRTOS - so running any CV on the host is challenging or outright intractable.DepthAI allows such use-cases as it convert high-bandwidth video (up to 12MP) into extremely low-bandwidth structured data (in the low-kbps range; e.g. person at 3 meters away, 0.7 meters to the right).

So this allows tiny microcontrollers to easily parse this output data and take action on it. For example an ATTiny8 microcontroller is thousands of times faster than what is required to parse and take action off of this metadata, and it's a TINY microcontroller.

So for applications which already have microcontrollers, where full operating-system capable 'edge' systems have disadvantages or are intractable (like size, weight, power, boot-time, cost, etc.), or where such systems are just not overkill, being able to cleanly/easily use DepthAI with embedded systems is extremely valuable.

So we plan to release an API/spec for embedded communication with DepthAI over SPI, I2C, and UART to support this.

However, a customer having to write against this spec from scratch is a pain, particularly if hardware connections/prototyping need to be done to physically connect DepthAI to the embedded target of interest. And having to do these steps -before- being able to do a quick prototype is annoying and sometimes a show-stopper.

So the goal of this design is to allow an all-in-one reference design for embedded application of DepthAI which someone can purchase, get up and running on, and then leverage for their own designs/etc. after having done a proof-of-concept and shown what they want works with a representative design.

So to have something embedded working right away, which can then serve as a reference in terms of hardware, firmware, and software.

The next decision is what platform to build this reference design around. STM32? MSP430? ESP32? ATMega/Arduino? TI cc31xx/32xx?

We are choosing the ESP32 as it is very popular, provides nice IoT connectivity to Amazon/etc. (e.g. AWS IoT here) and includes Bluetooth, Bluetooth Low Energy (BTLE), and WiFi, and comes in a convenient FCC/CE-certified module.

And it also has a bunch of examples for communicating over WiFi, communicating with smartphone apps over BT and/or WiFi, etc.

And since we want users to be able to take the hardware design and modify it to their needs we plan to:

Often embedded applications are space-constrained, so making this reference design as compact as reasonable (while still using the modular approach above) is important.

Another

whyis this will serve as the basis for the Visually-Impaired assistance device (https://github.com/luxonis/depthai-hardware/issues/9).Move to the

how:Modify the BW1098OBC to add an ESP32 System on Module, likely the ESP32-WROOM-32.

To keep the design small, it may be necessary to increase the number of layers on the BW1098OBC (from the 4 currently) to 6 or perhaps 8 and/or use HDI techniques such that the ESP32 module can be on the opposite side of the boards (but overall in the same physical location) as the BW1099 module, and perhaps the cameras above or below this ESP32 module.

In this system DepthAI SoM will be the SPI

peripheraland the microcontroller (ESP32 in this case) will be the SPIcontroller.Have a GPIO line from the ESP32 go to the DepthAI SoM, for DepthAI to be able to poke the ESP32 when it has new data.

That way we can support interrupt-driven data transfer on the ESP32.

So the flow would be:

Note that this would also still allow the ESP32 to do polling instead.

Move to the

what:Here's a super-initial idea on what this could look like, based on setting cameras and a sheet of paper the size of the ESP32 module above (18mm x 25.5mm) on an old BW1098EMB design, which is 60x40x20.5mm including heatsink and 60x40x7mm without heatsink (which is likely how it would be used in a product... simply heatsink to the product enclosure).