和每个 2 维坐标点对应的 3 维坐标

在实际应用中,不需要获取一个精确的人脸三维模型并且也没法获取这样的模型,因此,作者设置了上述 6 个二维点对应的 3 维坐标,分别为:

Tip of the nose : (0.0, 0.0, 0.0)

Chin : (0.0, -330.0, -65.0)

Left corner of the left eye : (-225.0f, 170.0f, -135.0)

Right corner of the right eye : (225.0, 170.0, -135.0)

Left corner of the mouth : (-150.0, -150.0, -125.0)

Right corner of the mouth : (150.0, -150.0, -125.0)

objectPoints – Array of object points in the world coordinate space. I usually pass vector of N 3D points. You can also pass Mat of size Nx3 (or 3xN) single channel matrix, or Nx1 ( or 1xN ) 3 channel matrix. I would highly recommend using a vector instead.

imagePoints – Array of corresponding image points. You should pass a vector of N 2D points. But you may also pass 2xN (or Nx2) 1-channel or 1xN ( or Nx1 ) 2-channel Mat, where N is the number of points.

cameraMatrix – Input camera matrix A =

[fx0cx0fycy001]

. Note that f_x, f_y can be approximated by the image width in pixels under certain circumstances, and the c_x and c_y can be the coordinates of the image center.

distCoeffs – Input vector of distortion coefficients (k_1, k_2, p_1, p_2[, k_3[, k_4, k_5, k_6],[s_1, s_2, s_3, s_4]]) of 4, 5, 8 or 12 elements. If the vector is NULL/empty, the zero distortion coefficients are assumed. Unless you are working with a Go-Pro like camera where the distortion is huge, we can simply set this to NULL. If you are working with a lens with high distortion, I recommend doing a full camera calibration.

rvec – Output rotation vector.

tvec – Output translation vector.

useExtrinsicGuess – Parameter used for SOLVEPNP_ITERATIVE. If true (1), the function uses the provided rvec and tvec values as initial approximations of the rotation and translation vectors, respectively, and further optimizes them.

flags –

Method for solving a PnP problem:

SOLVEPNP_ITERATIVE Iterative method is based on Levenberg-Marquardt optimization. In this case, the function finds such a pose that minimizes reprojection error, that is the sum of squared distances between the observed projections imagePoints and the projected (using projectPoints() ) objectPoints .

SOLVEPNP_P3P Method is based on the paper of X.S. Gao, X.-R. Hou, J. Tang, H.-F. Chang “Complete Solution Classification for the Perspective-Three-Point Problem”. In this case, the function requires exactly four object and image points.

SOLVEPNP_EPNP Method has been introduced by F.Moreno-Noguer, V.Lepetit and P.Fua in the paper “EPnP: Efficient Perspective-n-Point Camera Pose Estimation”.

The flags below are only available for OpenCV 3

SOLVEPNP_DLS Method is based on the paper of Joel A. Hesch and Stergios I. Roumeliotis. “A Direct Least-Squares (DLS) Method for PnP”.

SOLVEPNP_UPNP Method is based on the paper of A.Penate-Sanchez, J.Andrade-Cetto, F.Moreno-Noguer. “Exhaustive Linearization for Robust Camera Pose and Focal Length Estimation”. In this case the function also estimates the parameters f_x and f_y assuming that both have the same value. Then the cameraMatrix is updated with the estimated focal length.

iterationsCount – The number of times the minimum number of points are picked and the parameters estimated.

reprojectionError – As mentioned earlier in RANSAC the points for which the predictions are close enough are called “inliers”. This parameter value is the maximum allowed distance between the observed and computed point projections to consider it an inlier.

minInliersCount – Number of inliers. If the algorithm at some stage finds more inliers than minInliersCount , it finishes.

inliers – Output vector that contains indices of inliers in objectPoints and imagePoints .

转载自:http://www.learnopencv.com/head-pose-estimation-using-opencv-and-dlib/

定义

计算机视觉领域的姿态估计用目标相对于摄像机的平移量和旋转矩阵进行表示。

计算机视觉领域将姿态估计问题当作 N 个点的透视变化问题 (Perspective-n-Point,PNP),解决方案是对于一个标定好的摄像机,根据目标在 3 维世界坐标系中 n 个点的坐标和对应投影到 2 维图像坐标系中的点集之间的变换关系矩阵进行求解。

相机运动

3D 刚性目标相对于摄像机只有两种运动关系,即平移和旋转。

平移

将一个 3 维点 (X,Y,Z) 变换到另一个 3 维点 (X′,Y′,Z′),平移有 3 个坐标系上的自由度参数,可以用一个向量 t 进行表示。

旋转

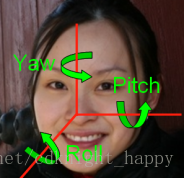

同样可以沿着 X,Y,Z 三个轴进行旋转。可以用一个 3*3 的旋转矩阵或欧拉角 (roll,pitch,yaw) 进行表示。

姿态估计所需的输入信息

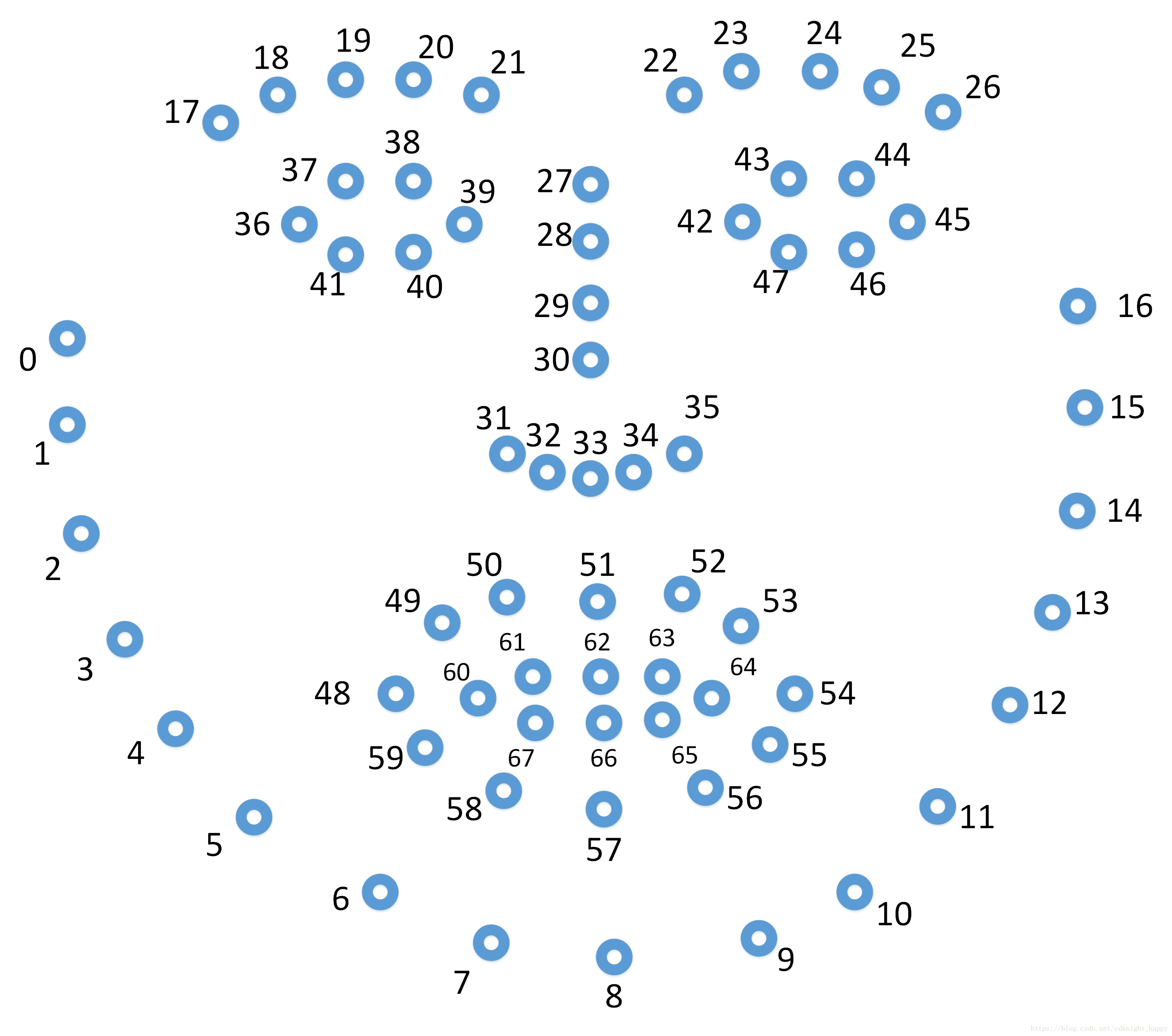

人脸 68 个关键点分布图

使用人脸特征点检测算法对二维人脸图像进行特征点检测。本文中,作者使用鼻尖、下巴、左眼左眼角、右眼右眼角、左嘴角和右嘴角的坐标。

在实际应用中,不需要获取一个精确的人脸三维模型并且也没法获取这样的模型,因此,作者设置了上述 6 个二维点对应的 3 维坐标,分别为:

Tip of the nose : (0.0, 0.0, 0.0)

Chin : (0.0, -330.0, -65.0)

Left corner of the left eye : (-225.0f, 170.0f, -135.0)

Right corner of the right eye : (225.0, 170.0, -135.0)

Left corner of the mouth : (-150.0, -150.0, -125.0)

Right corner of the mouth : (150.0, -150.0, -125.0)

进行相机参数估计时首先需要对相机进行标定,精确的相机标定需要使用张正友的棋盘格标定,这里还是进行近似。相机的内参数矩阵需要设定相机的焦距、图像的中心位置并且假设不存在径向畸变。这里设置相机焦距为图像的宽度 (以像素为单位),图像中心位置为 (image.width/2,image.height/2).

如何进行姿态估计

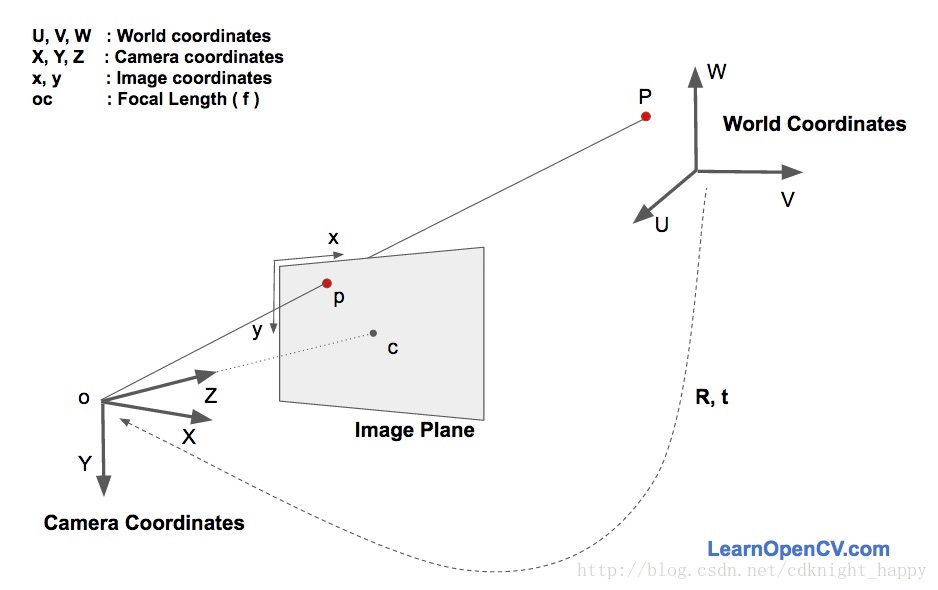

姿态估计过程中有三个坐标系,分别为世界坐标系、相机坐标系和图像坐标系。一个世界坐标系中的三维点 (U,V,W) 通过旋转矩阵 R 和平移向量 t 映射到相机坐标系 (X,Y,Z),一个相机坐标系中的三维点 (X,Y,Z) 通过相机的内参数矩阵映射到图像坐标系 (x,y)。

Direct Linear Transform

上述类似于线性但是多一个系数 s 的问题需要通过DLT方法进行解决。

LM 最优化

上述 DLT 方法精确度不足,主要有两个原因,一是旋转矩阵 R 有三个自由度但是 DLT 方法中用 9 个数字进行表示,DLT 方法中没有限制该 3*3 矩阵必须为旋转矩阵;更重要的是,DLT 方法中没有最小化正确的目标函数。

解决办法是使用LM 最优化方法最小化投影误差。可以先随机的选取矩阵 R 和向量 t,将 3 维世界坐标系中的 6 个点映射到二维图像坐标系中,然后计算映射点和通过特征点提取算法提取的二维坐标点之间的误差,通过迭代减小映射误差可以得到更精确的 R 和 t。

OpenCV solvePnP

opencv 中可以通过 solvePnP 和 solvePnPRansac 函数进行姿态估计。

C++: bool solvePnP(InputArray objectPoints, InputArray imagePoints, InputArray cameraMatrix, InputArray distCoeffs, OutputArray rvec, OutputArray tvec, bool useExtrinsicGuess=false, int flags=SOLVEPNP_ITERATIVE)

Python: cv2.solvePnP(objectPoints, imagePoints, cameraMatrix, distCoeffs[, rvec[, tvec[, useExtrinsicGuess[, flags]]]]) → retval, rvec, tvec

Parameters:

objectPoints – Array of object points in the world coordinate space. I usually pass vector of N 3D points. You can also pass Mat of size Nx3 (or 3xN) single channel matrix, or Nx1 ( or 1xN ) 3 channel matrix. I would highly recommend using a vector instead.

imagePoints – Array of corresponding image points. You should pass a vector of N 2D points. But you may also pass 2xN (or Nx2) 1-channel or 1xN ( or Nx1 ) 2-channel Mat, where N is the number of points.

cameraMatrix – Input camera matrix A =

[fx0cx0fycy001]

. Note that f_x, f_y can be approximated by the image width in pixels under certain circumstances, and the c_x and c_y can be the coordinates of the image center.

distCoeffs – Input vector of distortion coefficients (k_1, k_2, p_1, p_2[, k_3[, k_4, k_5, k_6],[s_1, s_2, s_3, s_4]]) of 4, 5, 8 or 12 elements. If the vector is NULL/empty, the zero distortion coefficients are assumed. Unless you are working with a Go-Pro like camera where the distortion is huge, we can simply set this to NULL. If you are working with a lens with high distortion, I recommend doing a full camera calibration.

rvec – Output rotation vector.

tvec – Output translation vector.

useExtrinsicGuess – Parameter used for SOLVEPNP_ITERATIVE. If true (1), the function uses the provided rvec and tvec values as initial approximations of the rotation and translation vectors, respectively, and further optimizes them.

flags –

Method for solving a PnP problem:

SOLVEPNP_ITERATIVE Iterative method is based on Levenberg-Marquardt optimization. In this case, the function finds such a pose that minimizes reprojection error, that is the sum of squared distances between the observed projections imagePoints and the projected (using projectPoints() ) objectPoints .

SOLVEPNP_P3P Method is based on the paper of X.S. Gao, X.-R. Hou, J. Tang, H.-F. Chang “Complete Solution Classification for the Perspective-Three-Point Problem”. In this case, the function requires exactly four object and image points.

SOLVEPNP_EPNP Method has been introduced by F.Moreno-Noguer, V.Lepetit and P.Fua in the paper “EPnP: Efficient Perspective-n-Point Camera Pose Estimation”.

The flags below are only available for OpenCV 3

SOLVEPNP_DLS Method is based on the paper of Joel A. Hesch and Stergios I. Roumeliotis. “A Direct Least-Squares (DLS) Method for PnP”.

SOLVEPNP_UPNP Method is based on the paper of A.Penate-Sanchez, J.Andrade-Cetto, F.Moreno-Noguer. “Exhaustive Linearization for Robust Camera Pose and Focal Length Estimation”. In this case the function also estimates the parameters f_x and f_y assuming that both have the same value. Then the cameraMatrix is updated with the estimated focal length.

OpenCV solvePnPRansac

使用 Ransac 方法可以减小噪声点的干扰。Ransac 方法随机的选取点估计参数,然后选取能够得到最大内点数的参数作为最优估计参数。

C++: void solvePnPRansac(InputArray objectPoints, InputArray imagePoints, InputArray cameraMatrix, InputArray distCoeffs, OutputArray rvec, OutputArray tvec, bool useExtrinsicGuess=false, int iterationsCount=100, float reprojectionError=8.0, int minInliersCount=100, OutputArray inliers=noArray(), int flags=ITERATIVE )

Python: cv2.solvePnPRansac(objectPoints, imagePoints, cameraMatrix, distCoeffs[, rvec[, tvec[, useExtrinsicGuess[, iterationsCount[, reprojectionError[, minInliersCount[, inliers[, flags]]]]]]]]) → rvec, tvec, inliers

iterationsCount – The number of times the minimum number of points are picked and the parameters estimated.

reprojectionError – As mentioned earlier in RANSAC the points for which the predictions are close enough are called “inliers”. This parameter value is the maximum allowed distance between the observed and computed point projections to consider it an inlier.

minInliersCount – Number of inliers. If the algorithm at some stage finds more inliers than minInliersCount , it finishes.

inliers – Output vector that contains indices of inliers in objectPoints and imagePoints .

c++

旋转矩阵转换为欧拉角:

参考自:https://blog.csdn.net/zzyy0929/article/details/78323363

Yaw: 摇头 左正右负

Pitch: 点头 上负下正

Roll: 摆头(歪头)左负 右正

python

和上面那版代码主要思想相同,不同点有:

增加了根据旋转矩阵 R 计算欧拉角的实现。

https://blog.csdn.net/cdknight_happy/article/details/79975060