Since the output is repeated 4 times, I'd try checking the function where you generate the UVs to read from the GBuffer (mainly the inUV * 2.0 - 1.0 part).

For example try inUV or inUV * 0.5 + 0.5

Closed yyc-git closed 4 years ago

Since the output is repeated 4 times, I'd try checking the function where you generate the UVs to read from the GBuffer (mainly the inUV * 2.0 - 1.0 part).

For example try inUV or inUV * 0.5 + 0.5

use inUV fix the bug! Thanks very much!

I want to use hybrid render with gbuffer+ray tracing, so there is three passes:

gbuffer pass's shader code is: gbuffer.vert

gbuffer.frag

ray tracing pass's shader code is: ray-generation.rgen

blit pass's shader code is(is the same as ray tracing example): screen.vert

screen.frag

the output should be(the scene has 1 triangle + 1 plane):

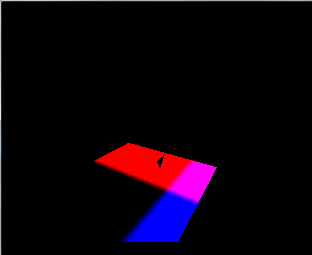

but the output actually is: