I dont know your training setting, such as epoch number.

I recommend setting lower epoch number because unaware-quantization training can lead to extreme weights and data which cause 8 bit quantization out of effective ranges. For example, the majority of data are laying around +-8 but some data goes up to -1000 for the input of some activations. When it comes to quantization, it needs to cover the range of 1000, set Q number to -2, then most of the data are down sampled.

You may try

- add batchnorm after conv. This constrains the data range.

- reduce epoch number to 10 to see if it improves. Lower epoch number

- enable "KLD" quantisation method instead of the default "max-min". KLD deletes extreme values. but sometime reduce affect the result.

Thank you @majianjia for this library.

I have been running into an issue where the accuracy in the C implementation of my model is far lower than what I am seeing in Python. I have put together a complete example here: Complete NNoM Sample.

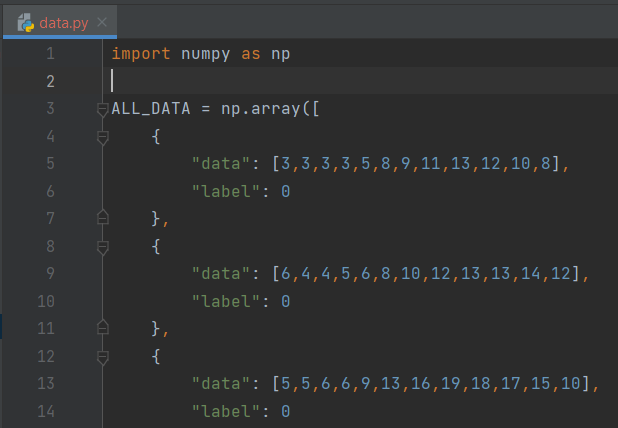

My input data when training is simply 12 values ranging between 0 and 127 inclusive. My output labels are simply 0 and 1. All my data is located in data.py in the linked repo:

My model looks like the following and is located in

model.pyin the linked repo:My training script is in

main.pyand accepts arguments for the number of epochs to use as well as details about the test/train split. I have been using the following arguments to train for 5 epochs on 80 percent of my data:python ./python_model/main.py -s .8 -r 42 -e 5. Mymain.pyscript looks like the following:When running this script I am seeing the following loss and accuracy:

However, when I run the model in C with the exported

weights.h, the accuracy I am seeing is far lower:My main.c program that runs the model in C looks like the following and is in the linked repo:

The linked repo contains powershell scripts for building the model in python, building the C application and running the C application. Would you be able to help me resolve the discrepancy in the implementations? Any help would be appreciated. Thank you again.