http://www.makehumancommunity.org/wiki/Documentation:Basemesh

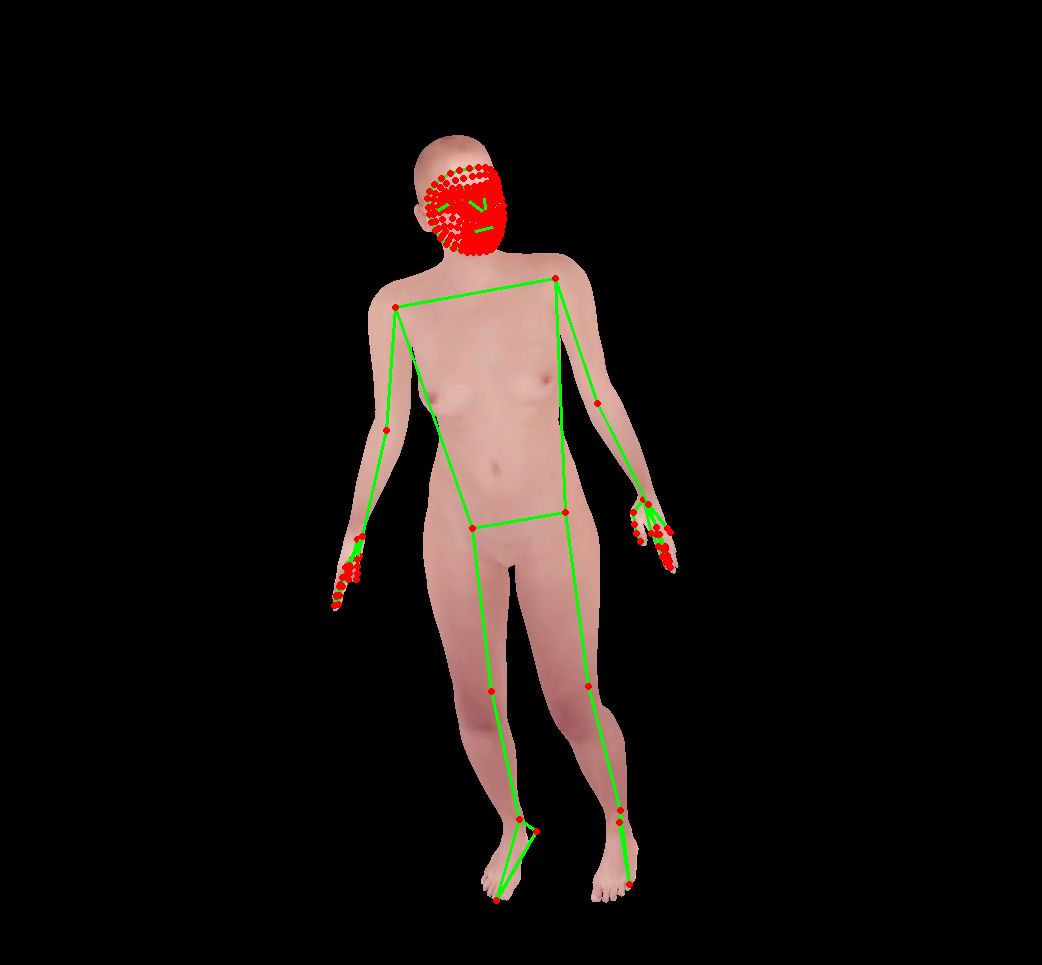

Basically in a nutshell this issue request is about adding the XPosition YPosition ZPosition channels in the output BVH files for the yellow lines/joints seen in the image above!

Open AmmarkoV opened 3 years ago

http://www.makehumancommunity.org/wiki/Documentation:Basemesh

Basically in a nutshell this issue request is about adding the XPosition YPosition ZPosition channels in the output BVH files for the yellow lines/joints seen in the image above!

First of all I would like to begin this long message with a big thank you for your amazing work..!

In order to give some context and to be as exact as I can stating my issue I will first give a small overview of my situation :) I am a PhD student from Greece and my PhD deals with training neural networks that can observe plain RGB images and videos of humans and derive their 3D skeleton in the high-level BVH format..

Ideally in the future and in the context of makehuman this kind of technology could be used as a tool that could enable a user to sit in front of their webcam, move and then automatically get a makehuman animation mirroring their action..

There is still work to be done (especially accuracy wise) to reach this goal however these are videos of what I have managed so far dealing with bodies and hands ( and not faces which is my next step and the reason for opening this issue ) ..

For the last part of my PhD and to complete the puzzle of Total monocular capture of the human pose ( including the face ) just working on a BVH skeleton is unfortunately not enough since the BVH joints are too sparse to give sufficient information for the level of detail required ( this is NN output for a head, and this is for a hand for comparison ).

So although for bodies and hands I used a raw BVH to derive 2D joint coordinates for my NN learning, unfortunately for regressing faces I am forced to also render a skinned model ( I intend to use makehuman for this task ) that can provide me with full skinned meshes that I can use to sample the points needed for my goal..

In a nutshell what I am trying to do is animate a makehuman 3D model ( including a face! ) using a BVH file and after feeding it to a neural network get back those 2D landmarks, and then use this as ground truth to learn how to go backwards from an observed real human person to its correct 3D BVH pose..

So having given this intro that hopefully brings some light on what I am attempting to do the next thing is explaining my issue..!

I can correctly export a makehuman model to a .dae ( opencollada ) file ( Bone orientation Local = Global ) using the export functionality and the "CMU plus face" rig I have contributed to makehuman and seen below..

and I can also animate the exported makehuman .dae model using an arbitrary BVH file and my codebase. For example using the 01_02.bvh from the CMU BVH dataset I can render this animation using my OpenGL 3 renderer ( it can be compiled and run using this bash script if you want to try it )

Using the same "CMU plus face" rig/skeleton, a T-Pose body pose, and by selecting for example the cry01 facial expression, exporting this setup to a BVH file yields this cry01.bvh file. If I however switch to the neutral face expression and save a BVH file, this time neutral.bvh notice that the change of the BVH pose happens in the OFFSET / non - motion part of the BVH file something that means that a new BVH hierarchy must be produced for every different expression that doesn't make sense..

So.. First issue I have and I believe can be easily resolved is a patch to the makehuman BVH exporter to also encode the facial OFFSET information as part of the MOTION part of the BVH file. This can be implemented easily (IMHO) in /makehuman/shared/bvh.py#L415 with an extra check that also allows outputs "Xposition", "Yposition", "Zposition" channels on BVH joints of the face..! To currently workaround this issue I have already made a standalone utility to convert the makehuman BVH exports and add these extra channels myself, this gives me files like this "merged_cry01.bvh" from the original cry01.bvh file mentioned earlier. You can try opening them in blender to see that they are valid BVH. With this change as frames of the BVH file get parsed the "Xposition", "Yposition", "Zposition" components of the motion vector override the OFFSET coordinates given for a particular frame, something that is required in order to be able to represent animated facial expressions, for example interpolating from neutral.bvh to cry01.bvh in X MOTION frames in the same file, instead of producing X different .bvh files..! So using this OFFSET always contain the neutral.bvh face ( in the same manner the body/hand offsets contain the t-pose ) and any facial animation happens in the MOTION frames ( in the same manner body animation also happens there ).

My second and unfortunately more complex issue has to do with performing the correct 4x4 bone transformations for facial expressions. When animating a makehuman skeleton for everything except the face all BVH "changes" that happen are encoded in rotation matrices that can be directly applied to the skinned mesh vertices to perform the required transformations..! In my regular transform code I just copy the 4x4 rotation matrix for the specific BVH joint to the corresponding OpenCollada bone. However on the face trying to apply the BVH position 4x4 matrices yields erroneous results and what I have currently "erroneously" end-up with that kind of works is use the neutral.bvh / neutral face expression as the default OFFSET value for each joint and then encoding the target expression ( i.e. cry01.bvh ) in the motion vector and trying to "nudge" the vertices translating them based on the distance of the target BVH joint from the neutral one that I store as the static offset.. This of course does not work, however it vaguely moves in accordance to the facial expression and does not produce crazy visual results..! Since I am not very familiar with the makehuman codebase I would very much appreciate if you could point me to the correspondence of the makehuman BVH OFFSET data to the rendered mesh, since I cannot seem to find it or derive it on my own..Testing through blender I think my transforms are ok so disregard the second issue :)Thank you once again for your work, and thank you for hopefully reading through this huge post describing my problem! Looking forward to your input!

Ammar Qammaz a.k.a. AmmarkoV