FYI, this is also called "beam racing" for others who are familiar with this term.

It's currently used in some applications like VR: https://www.imgtec.com/blog/reducing-latency-in-vr-by-using-single-buffered-strip-rendering/

That article has some neat animated graphics at low granularity (4 frameslices) that will help make it easier to understand the concept of beam-racing techniques. Fine granularity is quite doable (10-scanline frameslices and smaller) with tight racebehind.

The algorithm would be much simpler and lower-bandwidth with front buffer rendering (just add rasters) but the bandwidth of VSYNC OFF frame buffer transmissions are now sufficiently high enough on modern computers to emulate front buffer rendering without access to the front buffer -- even down to the single-emulator-scanline granularity (but realistically, with a forgiving jitter margin of a few scanlines).

An algorithm for a tearingless VSYNC OFF that creates a lagless VSYNC ON.

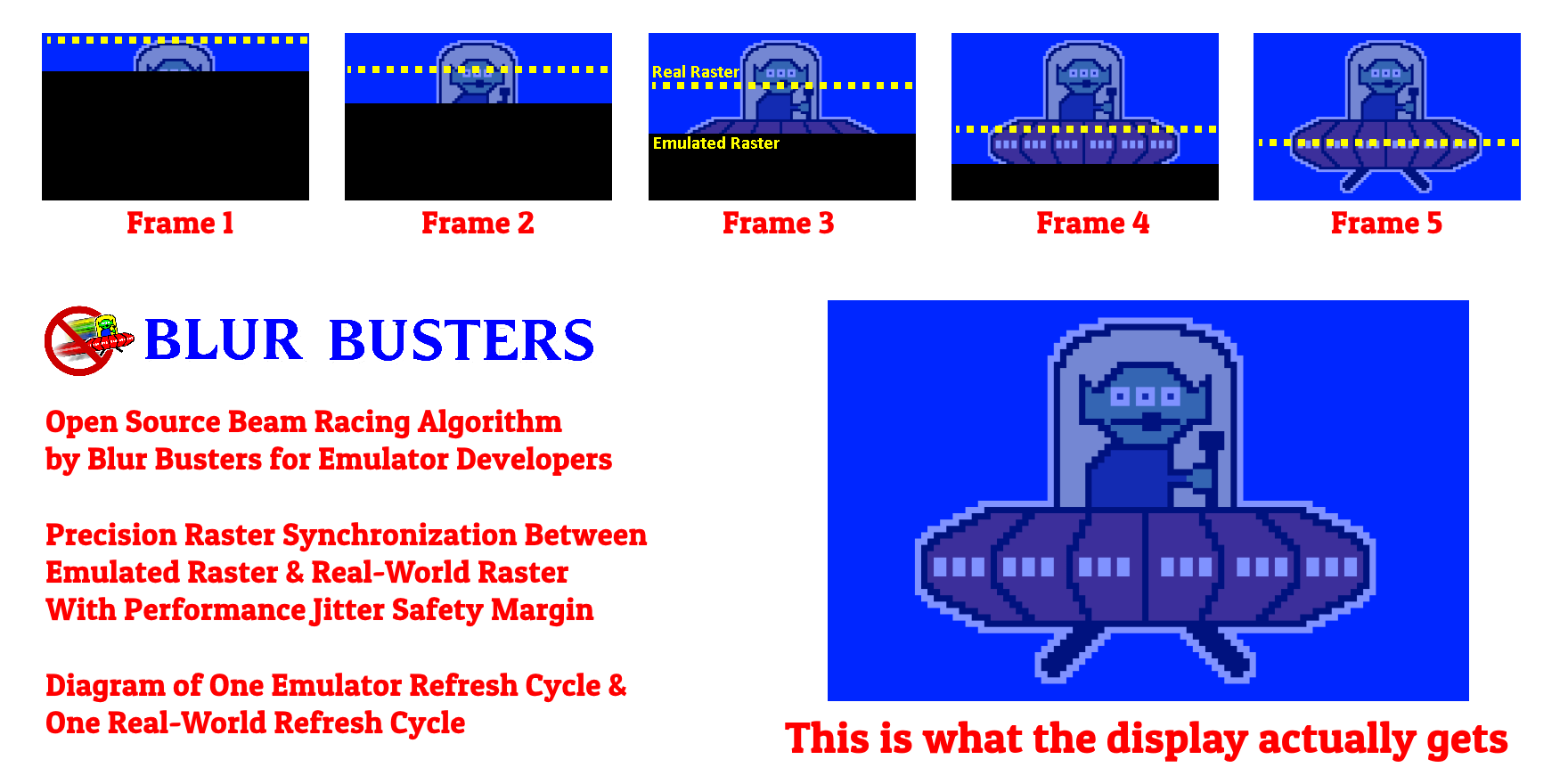

An approximate synchronization of the real-world raster to the emulated raster, with a forgiving jitter margin (<1ms).

Essentially, a defacto rolling-window scanline buffer achieved via high-buffer-swap-rate VSYNC OFF (redundant buffer swaps) -- with the emulator raster scanning ahead of the realworld raster, with a sufficient padding margin for performance-jittering making scanline-exact unnecessary -- just approximate raster sync (within ~0.1ms to ~0.2ms pratical with C/C++/asm programming).

There are raster-polling functions on some platforms (e.g. RasterStatus.ScanLine as well as D3DKMTGetScanLine() ...) to let you know of the real-world raster. (Other platforms may need to extrapolate based on time intervals between VSYNC heartbeats). This can be used to synchronize to the emulator's raster within a rolling-window margin.

Simplified diagram:

Long Explanation: https://www.blurbusters.com/blur-busters-lagless-raster-follower-algorithm-for-emulator-developers/

This is a 1/5th frame version, but in reality, frame slices can be as tiny as 1 or 2 scanlines tall - or a few scanlines tall, computer-performance-permitting.

Tests show that I successfully can do roughly 7,000 redundant buffer swaps per second (2 NTSC scanlines) in high level garbage-collected C# programming language of 2560x1440 framebuffers on a GTX 1080 Ti.

With proper programming technique, darn near 1:1 sync of real raster to virtual raster is possible now. But exact sync is unnecessary due to the jitter margin and can be computed (to within ~0.5-1ms margin) from an extrapolation between VSYNC timings if the platform has no raster-scanline register available.

Even C# programming was able to do this within a ~0.2-0.3ms jitter margin, except during garbage-collection events (which causes brief momentary surge of tearing artifact).

But C++ or C or assembler would have no problem, and probably can do it within <0.1ms -- permitting within +/- 1 scanline of NTSC (15.6 KHz scan rate). Input lag will be roughly equivalent to ~2x the chosen jitter margin you choose -- but if your performance is good enough for 1-emulated-scanline sync, that's literally 2/15625 second input lag (less than 0.2ms input lag) -- all successfully achieved with standard Direct3D or OpenGL APIs during VSYNC OFF operation for platforms that gives you access to polling the graphic's card current-raster register. The jitter margin can be automatic-adaptive or in a configuration file.

Can be made compatible with HLSL (but larger jitter margin will essential, e.g. 1ms granlarity rather than 0.1ms granularity) since you're forcing the GPU to continually reprocess HLSL. A performance optimization is that one could modify the HLSL to only process frameslice-by-frameslice worth), but this would be a difficult rearchitecturing, I'd imagine.

Most MAME emulators emulate graphics in a raster-based fashion, and would be compatible with this lagless VSYNC ON workflow, with some minor (eventual, long-term) architectural changes to provide the necessary hooks for mid-frame busywaiting on realworld raster + mid-frame buffer swaps.

To read more about this algorithm and its programming considerations, see https://www.blurbusters.com/blur-busters-lagless-raster-follower-algorithm-for-emulator-developers/