After some trial and error I found out that the problem is probably linked to the invokeOnCompletion handler in bindScopeCancellationToCall in CallExts.kt because the memory leak disappears if it is commented out:

internal fun CoroutineScope.bindScopeCancellationToCall(call: ClientCall<*, *>) {

val job = coroutineContext[Job]

?: error("Unable to bind cancellation to call because scope does not have a job: $this")

//job.invokeOnCompletion { error ->

// if (job.isCancelled) {

// call.cancel(error?.message, error)

// }

//}

}

It looks like there is memory leak in kroto+ when many unary calls are made inside a runBlocking-block, reproduced here: https://github.com/blachris/kroto-plus/commit/223417b1a05294a0387d13f421c0fa8aec4c477d

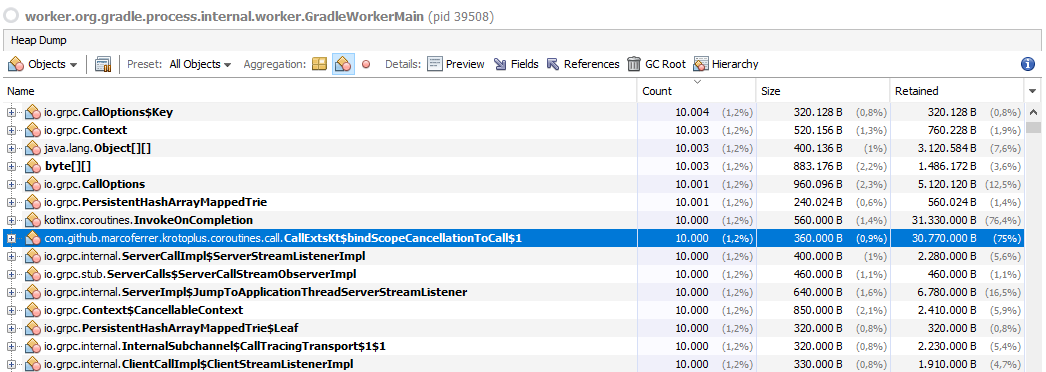

When you take a heap dump before exiting the runBlocking-block, you will see a number of instances that scale exactly with the number of calls made, for example here after 10000 completed, sequential calls:

It seems there is an unbroken chain of references with

InvokeOnCompletionthrough every single call made.After the runBlocking-block, the entire clutter is cleaned up so as a workaround, one can regularly exit and enter runBlocking. However I often see this pattern in main-functions with runBlocking which will reliably encounter this problem over time:

Could you please check if you can avoid this issue in kroto+ or if this is caused by kotlin's runBlocking?