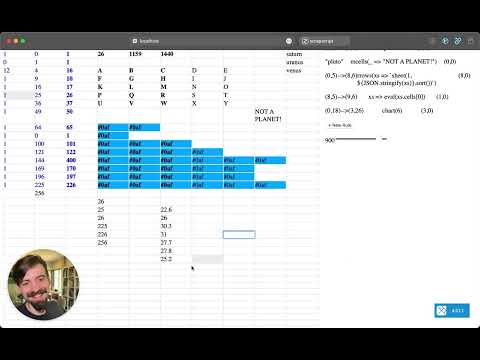

🧮 Scrapsheets demo 🎥 Why Star Trek's Graphic Design Makes Sense 🔌 Visual Neural Networks

Two Minute Week

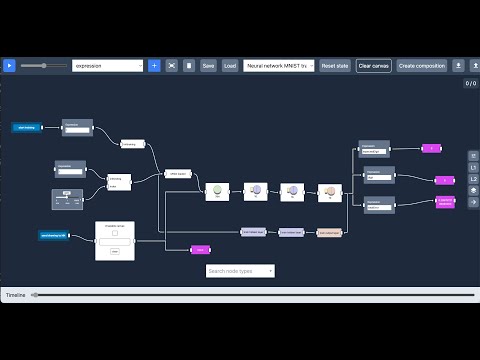

🔌🎥 Neural network training using mnist dataset in code-flow-canvas via Maikel van de Lisdonk

After lots of reading (books and other people's code), watching video's, thinking, asking chatgpt for help (it needed help itself), experimenting and frustration, I finally managed to get a neural network working including the training using backward propagation, in my visual programming system!! You can see a small video where I demo it here

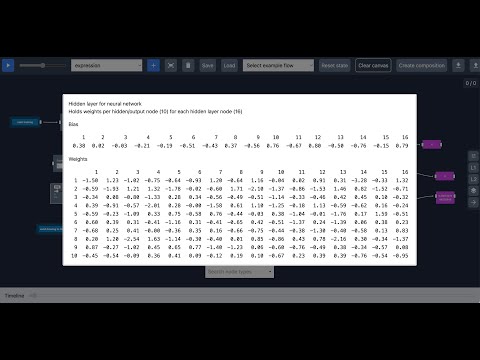

The results are far from perfect and neither is the visual flow itself. Currently I only used a subset of mnist to train (9000 images) and on every training iteration the weights are updated per training image. Also the network can for sure be improvement by using a different layer setup and configuration parameters (different learning rate and activation / cost functions). From a visual programming perspective there are also lots of things to improve: currently a lot of the needed functionality is inside the nodes instead of being visible in the flow. So the neural and training nodes are black boxes as well as the node that loads the mnist dataset and handles some of the logic in the training proces. I want to change this in the near future though. You currently can't even see the resulting weights and biases.

Hopefully it is clear from the neural node types how the neural network is structured: it shows the number of nodes in the layer and an icon illustrating whether a node represents an input, hidden or output layer.

In the video I show a slow and fast run of the training proces: by putting the speed slider to the right you can run the flow without animations otherwise it takes too long.

There's also a custom node type that can be used to draw a digit manually and provide the digit which the drawing represents for purpose of calculating the error cost/loss.

Anyway, for now I am happy with the result. More to follow in the future :-)

[ ] Change Month and Week Number

[ ] focWeekExport "2023-01-19" "2023-01-26"

[ ] Update Search Index

[ ] Download New Attachments

[ ] Update links

[ ] Check that comment links work (push weekly dump with channel summaries)

[ ] Check to mention right person for moved messages

[ ] Summary

[ ] Hashtags

[ ] Set title in newsletter

https://tinyletter.com/

https://tinyletter.com/marianoguerra/letters/

http://localhost:8000/history/

https://marianoguerra.github.io/future-of-coding-weekly/

https://stackedit.io/app#