The samples you’re showing seem to be from super early in training (3000 iterations). Do you have samples from later on?

On Fri, Sep 21, 2018 at 8:23 AM zyoohv notifications@github.com wrote:

I run your code in cifar10, but the result seems not as good as our expected.

- system information:

system: debian 8 python: python2 pytorch: torch==0.3.1

- run command:

$python main.py --dataset cifar10 --dataroot ~/.torch/datasets --cuda

- output part:

[24/25][735/782][3335] Loss_D: -1.287177 Loss_G: 0.642245 Loss_D_real: -0.651701 Loss_D_fake 0.635477 [24/25][740/782][3336] Loss_D: -1.269792 Loss_G: 0.621307 Loss_D_real: -0.657210 Loss_D_fake 0.612582 [24/25][745/782][3337] Loss_D: -1.250543 Loss_G: 0.636843 Loss_D_real: -0.667046 Loss_D_fake 0.583497 [24/25][750/782][3338] Loss_D: -1.196252 Loss_G: 0.589907 Loss_D_real: -0.606480 Loss_D_fake 0.589772 [24/25][755/782][3339] Loss_D: -1.189609 Loss_G: 0.564263 Loss_D_real: -0.612895 Loss_D_fake 0.576714 [24/25][760/782][3340] Loss_D: -1.178156 Loss_G: 0.586755 Loss_D_real: -0.600268 Loss_D_fake 0.577888 [24/25][765/782][3341] Loss_D: -1.087157 Loss_G: 0.508717 Loss_D_real: -0.522565 Loss_D_fake 0.564592 [24/25][770/782][3342] Loss_D: -1.092081 Loss_G: 0.674212 Loss_D_real: -0.657483 Loss_D_fake 0.434598 [24/25][775/782][3343] Loss_D: -0.937950 Loss_G: 0.209016 Loss_D_real: -0.310877 Loss_D_fake 0.627073 [24/25][780/782][3344] Loss_D: -1.316574 Loss_G: 0.653665 Loss_D_real: -0.693675 Loss_D_fake 0.622899 [24/25][782/782][3345] Loss_D: -1.222763 Loss_G: 0.558372 Loss_D_real: -0.567426 Loss_D_fake 0.655337

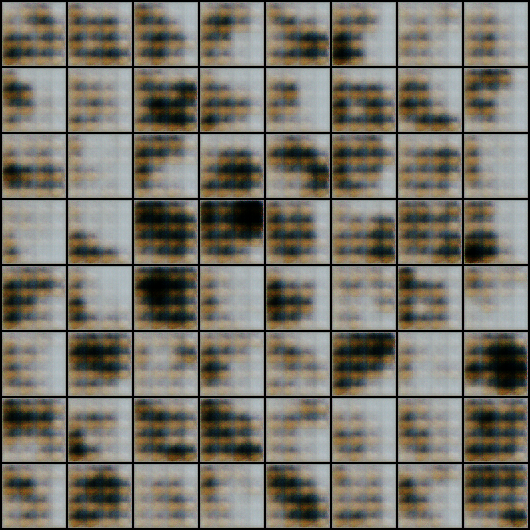

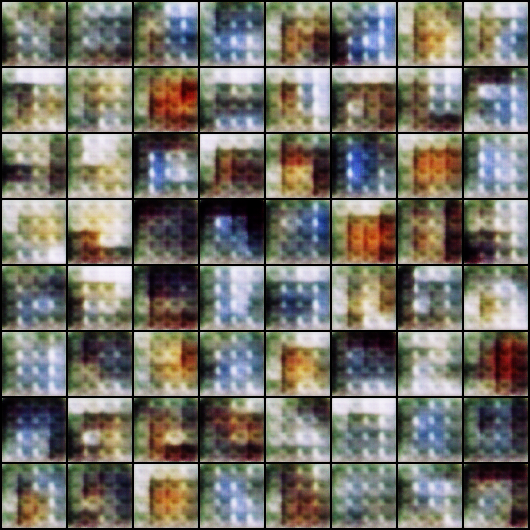

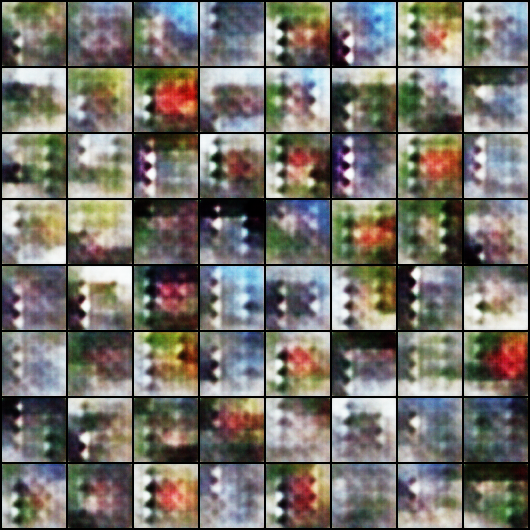

fake_samples_500.png [image: fake_samples_500] https://user-images.githubusercontent.com/16134679/45865905-9a46ea80-bdb1-11e8-99c5-7ee2c8432cf6.png

fake_samples_1000.png [image: fake_samples_1000] https://user-images.githubusercontent.com/16134679/45865910-9c10ae00-bdb1-11e8-8158-acc3f2e42146.png

fake_samples_1500.png [image: fake_samples_1500] https://user-images.githubusercontent.com/16134679/45865913-9dda7180-bdb1-11e8-9a41-0cd490c124f7.png

fake_samples_2000.png [image: fake_samples_2000] https://user-images.githubusercontent.com/16134679/45865917-9f0b9e80-bdb1-11e8-8f02-a4ddb21c2f85.png

fake_samples_2500.png [image: fake_samples_2500] https://user-images.githubusercontent.com/16134679/45865921-a0d56200-bdb1-11e8-9e2c-101644e49cda.png

fake_samples_3000.png [image: fake_samples_3000] https://user-images.githubusercontent.com/16134679/45865924-a29f2580-bdb1-11e8-9a03-f61b328b2d87.png

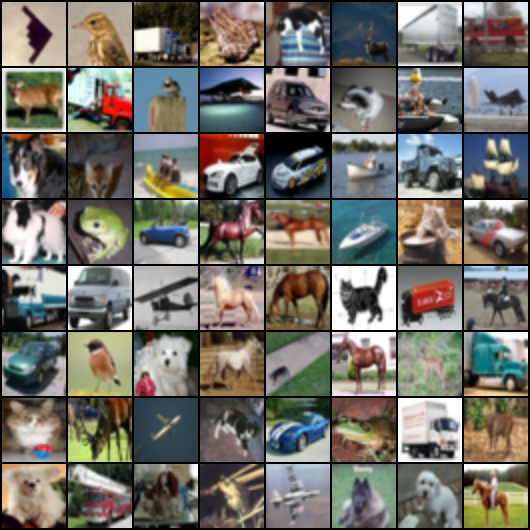

Note that this is real_samples.png!!! [image: real_samples] https://user-images.githubusercontent.com/16134679/45865928-a468e900-bdb1-11e8-8c26-5eca7b41bdfa.png

— You are receiving this because you are subscribed to this thread. Reply to this email directly, view it on GitHub https://github.com/martinarjovsky/WassersteinGAN/issues/62, or mute the thread https://github.com/notifications/unsubscribe-auth/AFB0kjsndk_WrLq8waluc-aO9ktV8o1Rks5udJQEgaJpZM4Wzl0Y .

Random Seed: 6408

G True

G True

DCGAN_G_nobn(

(main): Sequential(

(initial.100-512.convt): ConvTranspose2d(100, 512, kernel_size=(4, 4), stride=(1, 1), bias=False)

(initial.512.relu): ReLU(inplace)

(pyramid.512-256.convt): ConvTranspose2d(512, 256, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(pyramid.256.relu): ReLU(inplace)

(pyramid.256-128.convt): ConvTranspose2d(256, 128, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(pyramid.128.relu): ReLU(inplace)

(pyramid.128-64.convt): ConvTranspose2d(128, 64, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(pyramid.64.relu): ReLU(inplace)

(pyramid.64-32.convt): ConvTranspose2d(64, 32, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(pyramid.32.relu): ReLU(inplace)

(pyramid.32-16.convt): ConvTranspose2d(32, 16, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(pyramid.16.relu): ReLU(inplace)

(final.16-3.convt): ConvTranspose2d(16, 3, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(final.3.tanh): Tanh()

)

)

D True

('initial WGAN Dis: ndf csize ndf', 16, 128, 16)

('Input Feature', 16, 'output feature', 32)

('WGAN Dis: size csize ndf', 256, 64, 32)

('Input Feature', 32, 'output feature', 64)

('WGAN Dis: size csize ndf', 256, 32, 64)

('Input Feature', 64, 'output feature', 128)

('WGAN Dis: size csize ndf', 256, 16, 128)

('Input Feature', 128, 'output feature', 256)

('WGAN Dis: size csize ndf', 256, 8, 256)

('Input Feature', 256, 'output feature', 512)

('WGAN Dis: size csize ndf', 256, 4, 512)

DCGAN_D(

(main): Sequential(

(initial.conv.3-16): Conv2d(3, 16, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(initial.relu.16): LeakyReLU(0.2, inplace)

(pyramid.16-32.conv): Conv2d(16, 32, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(pyramid.32.batchnorm): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True)

(pyramid.32.relu): LeakyReLU(0.2, inplace)

(pyramid.32-64.conv): Conv2d(32, 64, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(pyramid.64.batchnorm): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True)

(pyramid.64.relu): LeakyReLU(0.2, inplace)

(pyramid.64-128.conv): Conv2d(64, 128, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(pyramid.128.batchnorm): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True)

(pyramid.128.relu): LeakyReLU(0.2, inplace)

(pyramid.128-256.conv): Conv2d(128, 256, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(pyramid.256.batchnorm): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True)

(pyramid.256.relu): LeakyReLU(0.2, inplace)

(pyramid.256-512.conv): Conv2d(256, 512, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(pyramid.512.batchnorm): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True)

(pyramid.512.relu): LeakyReLU(0.2, inplace)

(final.512-1.conv): Conv2d(512, 1, kernel_size=(4, 4), stride=(1, 1), bias=False)

)

)

Random Seed: 6408

G True

G True

DCGAN_G_nobn(

(main): Sequential(

(initial.100-512.convt): ConvTranspose2d(100, 512, kernel_size=(4, 4), stride=(1, 1), bias=False)

(initial.512.relu): ReLU(inplace)

(pyramid.512-256.convt): ConvTranspose2d(512, 256, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(pyramid.256.relu): ReLU(inplace)

(pyramid.256-128.convt): ConvTranspose2d(256, 128, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(pyramid.128.relu): ReLU(inplace)

(pyramid.128-64.convt): ConvTranspose2d(128, 64, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(pyramid.64.relu): ReLU(inplace)

(pyramid.64-32.convt): ConvTranspose2d(64, 32, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(pyramid.32.relu): ReLU(inplace)

(pyramid.32-16.convt): ConvTranspose2d(32, 16, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(pyramid.16.relu): ReLU(inplace)

(final.16-3.convt): ConvTranspose2d(16, 3, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(final.3.tanh): Tanh()

)

)

D True

('initial WGAN Dis: ndf csize ndf', 16, 128, 16)

('Input Feature', 16, 'output feature', 32)

('WGAN Dis: size csize ndf', 256, 64, 32)

('Input Feature', 32, 'output feature', 64)

('WGAN Dis: size csize ndf', 256, 32, 64)

('Input Feature', 64, 'output feature', 128)

('WGAN Dis: size csize ndf', 256, 16, 128)

('Input Feature', 128, 'output feature', 256)

('WGAN Dis: size csize ndf', 256, 8, 256)

('Input Feature', 256, 'output feature', 512)

('WGAN Dis: size csize ndf', 256, 4, 512)

DCGAN_D(

(main): Sequential(

(initial.conv.3-16): Conv2d(3, 16, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(initial.relu.16): LeakyReLU(0.2, inplace)

(pyramid.16-32.conv): Conv2d(16, 32, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(pyramid.32.batchnorm): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True)

(pyramid.32.relu): LeakyReLU(0.2, inplace)

(pyramid.32-64.conv): Conv2d(32, 64, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(pyramid.64.batchnorm): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True)

(pyramid.64.relu): LeakyReLU(0.2, inplace)

(pyramid.64-128.conv): Conv2d(64, 128, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(pyramid.128.batchnorm): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True)

(pyramid.128.relu): LeakyReLU(0.2, inplace)

(pyramid.128-256.conv): Conv2d(128, 256, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(pyramid.256.batchnorm): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True)

(pyramid.256.relu): LeakyReLU(0.2, inplace)

(pyramid.256-512.conv): Conv2d(256, 512, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(pyramid.512.batchnorm): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True)

(pyramid.512.relu): LeakyReLU(0.2, inplace)

(final.512-1.conv): Conv2d(512, 1, kernel_size=(4, 4), stride=(1, 1), bias=False)

)

)

I run your code in cifar10, but the result seems not as good as our expected.

system: debian 8

python: python2 pytorch: torch==0.3.1

fake_samples_500.png

fake_samples_1000.png

fake_samples_1500.png

fake_samples_2000.png

fake_samples_2500.png

fake_samples_3000.png

Note that this is real_samples.png!!!