Hi,

Thanks for the feedback!

the GPU continues to process the images.

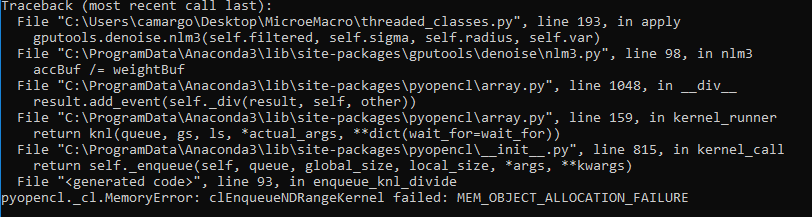

I don't fully understand that. You mean the GPU memory is still allocated? Sometimes, a memory allocation error such as this indeed leads to strange behaviour afterwards - how large was the image and what does nvidia-smi (given you have a nvidia card) shows before and after the nlm3 call?

Hi, I am using the gputools library in my project and I have a problem. When I try to render images with the nlm3 filter and the GPU does not support the amount of images, I get the error of the attached image. However, the GPU continues to process the images. Is there any solution to this problem (kill the process)? Thanks!