Hi, @PanJinquan : Can you show me the complete source code? I try to reproduce this problem and solve it.

Open PanJinquan opened 2 years ago

Hi, @PanJinquan : Can you show me the complete source code? I try to reproduce this problem and solve it.

你好,大佬,已经更新了完整的代码 @zheng-ningxin

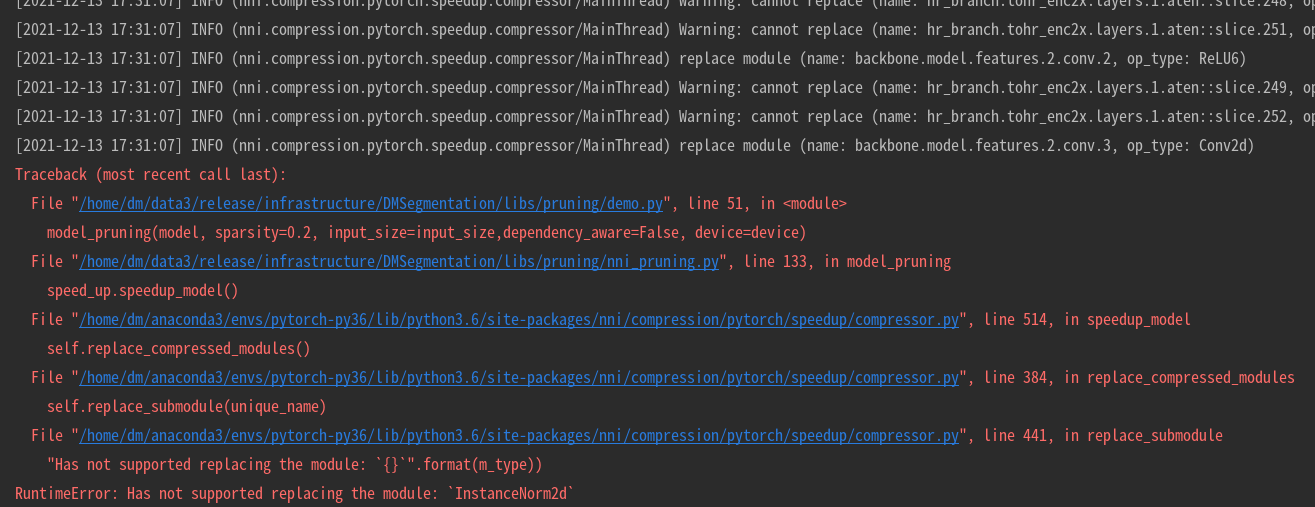

Describe the issue:

不支持Pytorch的nn.InstanceNorm2d

下面定义一个简单使用了

nn.InstanceNorm2d的SimpleModel模型, use_inorm=False可以正常pruning, 但use_inorm=True,出现:Has not supported replacing the module:InstanceNorm2d的错误Example:

@zheng-ningxin