As far as I could see from the onnx model, there are only two inputs: [input/lwir_input_data:0, input/input_data:0], they are all of float32[1,416,416,3]. So:

- do not send , "image_shape":image_size when call session.run()

- reshape to correct shape during processing:

- Your images seems gray scale, so it is of shape [416, 416]? Try some RGB to get [416, 416, 3]

- expand its dim to [1, 416, 416, 3]. Thanks, Lei

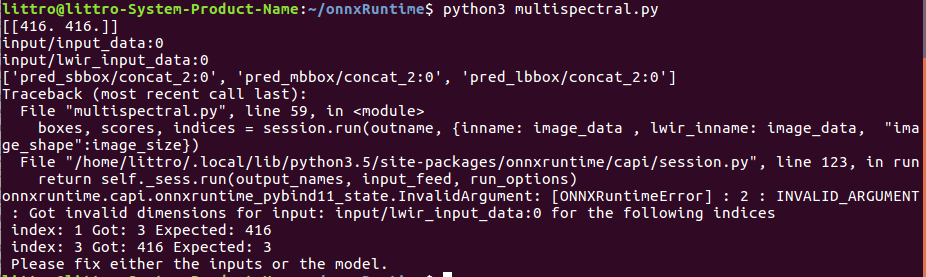

Describe the bug I used the original Yolov3 example and it can run successfully, Then I am using my own Yolov3 (it takes two inputs, visual and infrared image) and I got this error.

Urgency As early as possible

System information

To Reproduce Steps and code: I have converted .pb weights to .onnx using https://github.com/onnx/tensorflow-onnx

with command

python -m tf2onnx.convert --input modelInPb/Pedestrian_yolov3_520.pb --inputs input/input_data:0[1,416,416,3],input/lwir_input_data:0[1,416,416,3] --outputs pred_sbbox/concat_2:0,pred_mbbox/concat_2:0,pred_lbbox/concat_2:0 --output modelOut/Pedestrian_yolov3_520.onnx --opset 11Then I use this .onnx model with the following code:

https://drive.google.com/file/d/1vT5ZPH-LuW5cGrdENjWb2uOhvJBygSSk/view?usp=sharing

Expected behavior import the .onnx model and it should show the output same as the official Yolov3 example

Screenshots Attached screenshots for the error

Additional context I am also not sure if I am using in a right way

boxes, scores, indices = session.run(outname, {inname: image_data , lwir_inname: image_data, "image_shape":image_size})This model takes two inputs, so How I can pas two inputs? (visible image and infrared image), Can I use one image_size for both or I need to pass separately for both? The original Yolov3 example is

boxes, scores, indices = session.run(outname, {inname: image_data , "image_shape":image_size})How I can change this example for two inputs?