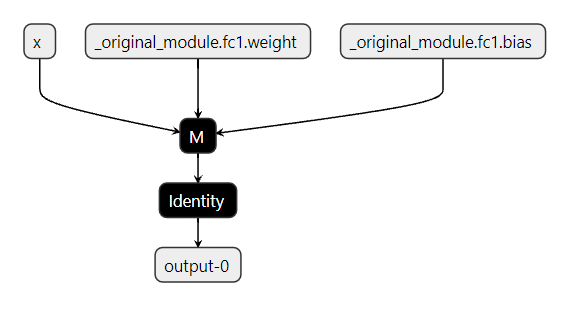

The exported model seems to be correct. User's model is wrapped around _FlattenedModule so that model input and output are flattened before exporting. Inside _FlattenedModule the user models is correctly represented as shown by the bottom right image.

The error seems to be coming from the core, during gradient build.

I'm trying to run a simple test case with ORTModule that has one linear layer inside a module as shown below:

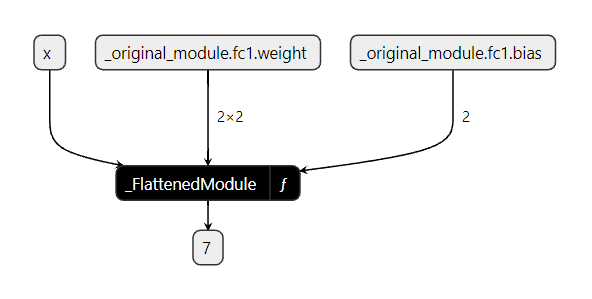

In case 1 above, the ONNX graph exported by PyTorch has the module completely inlined:

Whereas in case 2, it is wrapped inside a "flattened module" as shown below (the right image below is the graph corresponding to the function body of

_FlattenedModule):The latter case should ideally work since modules/functions are supposed to be inlined during partitioning. But I see the error below in case 2:

System information ORT version: master Torch version: Branch onnx_ms_1