Right now, this can be done by carefully setting up a GLayerMixed. Something like:

void addResidualConnection(GNeuralNet &nn, size_t fromLayer, size_t toLayer)

{

for(size_t i = fromLayer; i <= toLayer; ++i)

{

GLayerMixed *mixed = new GLayerMixed();

GNeuralNetLayer *existing = nn.releaseLayer(i);

mixed->addComponent(existing);

GLayerClassic *identity = new GLayerClassic(existing->inputs(), existing->inputs(), new GActivationIdentity());

identity->bias().fill(0.0);

identity->weights().setAll(0.0);

for(size_t i = 0; i < identity->weights().rows(); ++i)

identity->weights()[i][i] = 1.0;

mixed->addComponent(identity);

nn.addLayer(mixed, i);

}

}

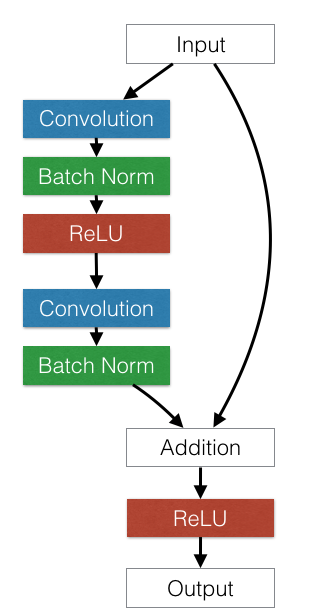

Deep Residual Networks[1]use shortcut connections between blocks of layers, which essentially allows the original input to skip along to the next block. Here is an image, the skip connection is on the right:

These residual networks are relatively popular for image problems, and you can get some extremely deep networks. (There is a caveat to that, the shortcut connection make the neural network behave like an ensemble of shallow networks[2]).

How would we implement something equivalent to this with just a normal neural network in Waffles? This is probably the simplest case of some of these shortcut connections, but they seem to be popping up in a few state-of-the-art networks.

[1] https://arxiv.org/abs/1512.03385 [2] https://arxiv.org/abs/1605.06431