Thanks for Mikel for your wonderful work.

:smile:

Have you tried to change the tracking method used?

python track.py --tracking-method ocsort --source 'myURL' --save-vid

OCSORT is a pure CPU tracking method, not as good as StrongSORT but probably good enough for your needs. Could you report back with the generated video?

Search before asking

Question

Hello, I have some issues when tracking a live stream from a youtube URL. The resolution and fps for the video is 1920*1080 and 30fps. I did run

python track.py --source 'myURL' --save-vidusing yolov5x.pt weights plus osnet_x0_25_msmt17.pt strongsort weights.During inference, yolo speed is (0.022s, 0.023s) and strongsort speed is (0.030s, 0.066s) depending on the density of objects in the frame i guess. As you can see total processing time of a frame is (0.05s, 0.09s), the fps for tracking would be 10 to 20. And the saved video is extremely laggy, frames freeze and move again. The video is not continuously played frame by frame as the original stream. The saved video's fps is correct(30fps), but the frames just freezes for seconds and updates late.

https://user-images.githubusercontent.com/94005149/198721083-4bae20a2-8518-4767-8f11-8d27eb345d0b.mp4

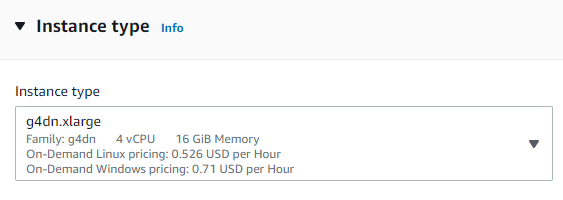

I am running the program on a AWS ec2 server, here is my settings

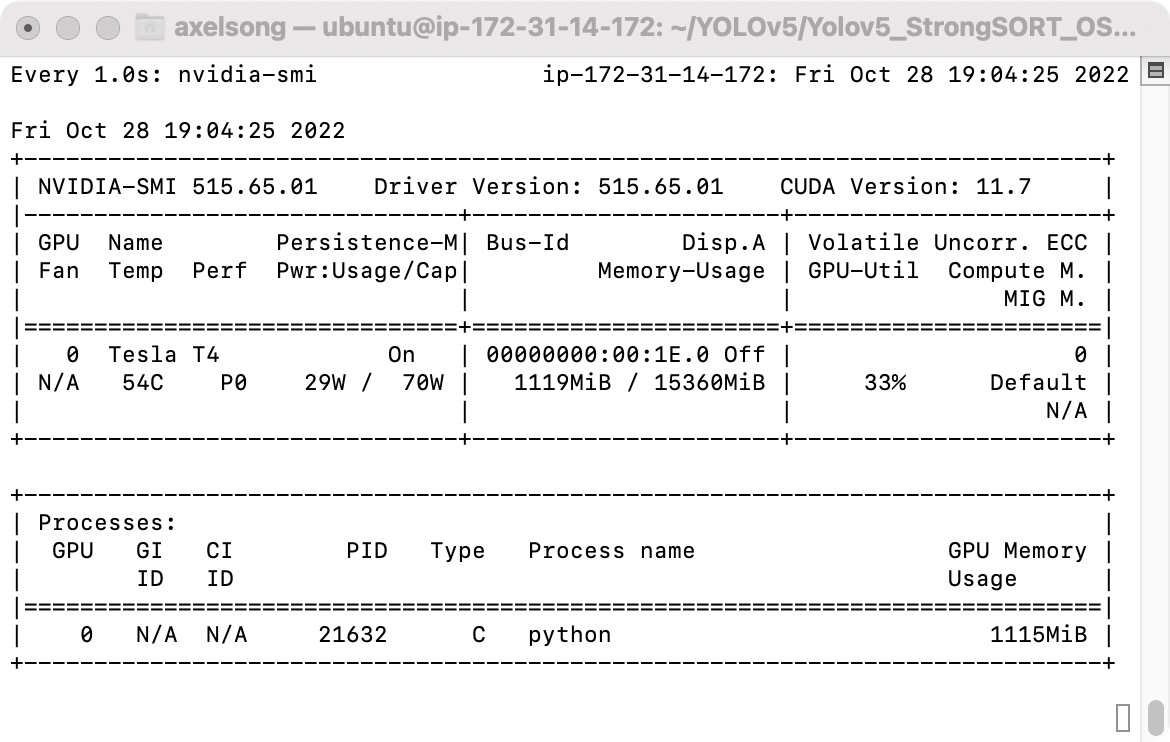

Here is my gpu usage while running the program

And CPU usage

My question is what is my bottleneck to track this stream video? I am guessing it's because my cpu is overwhelmed by strongsort. How do i fix this?

Another additional question is that, is there a way to stream the tracking video in a URL instead of the local window. Therefore, I could display the tracking stream in a different machine instead of locally.

Thanks for Mikel for your wonderful work. Any help would be appreciated!