Closed digantamisra98 closed 3 years ago

Mish is a new novel activation function proposed in this paper. It has shown promising results so far and has been adopted in several packages including:

All benchmarks, analysis and links to official package implementations can be found in this repository

It would be nice to have Mish as an option within the activation function group.

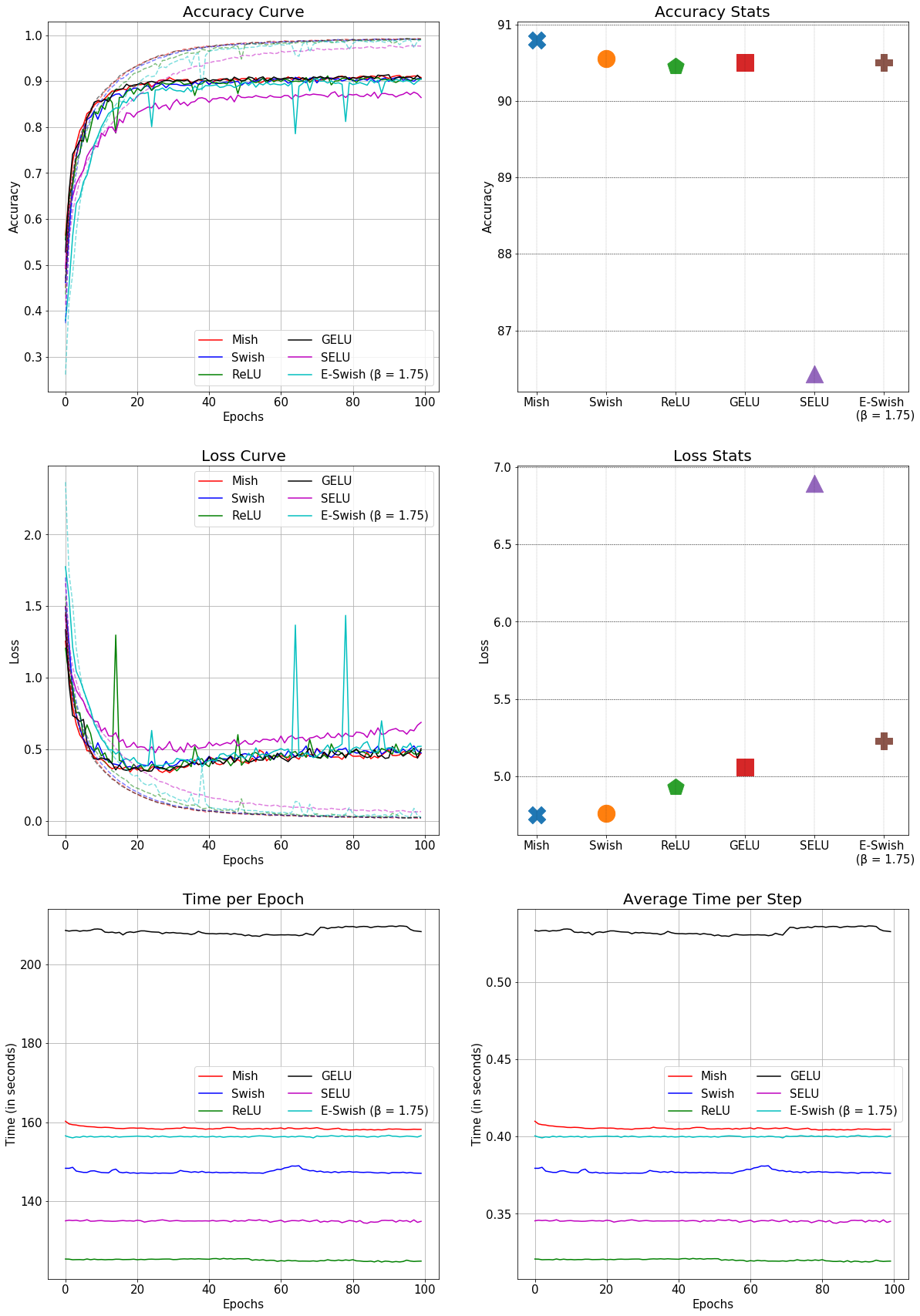

This is the comparison of Mish with other conventional activation functions in a SEResNet-50 for CIFAR-10: (Better accuracy and faster than GELU)

Mish is a new novel activation function proposed in this paper. It has shown promising results so far and has been adopted in several packages including:

All benchmarks, analysis and links to official package implementations can be found in this repository

It would be nice to have Mish as an option within the activation function group.

This is the comparison of Mish with other conventional activation functions in a SEResNet-50 for CIFAR-10: (Better accuracy and faster than GELU)