a couple thoughts:

how often are the agent actions being updated? the training occurs at dt=0.2sec but in our experiments we use dt=0.1 for execution, which leads to much better performance.

what is the model for robot dynamics? in training, our agents set their heading angle and velocity directly, so any extra acceleration-type constraints would cause the policy to be less useful.

the agents were trained in crowds of up to 10 agents, but we saw good results in a few 20-agent setups. i wouldn't expect it be super reliable in generic 20-agent cases, especially if the simulator isn't quite like the one from training.

the lack of symmetry is puzzling, since all agents should be moving identically and receiving identical observations (assuming they started in the same states). any idea if there is something in your simulation that would lead to asymmetric network inputs?

Hi Michael,

Thanks for your great jobs.

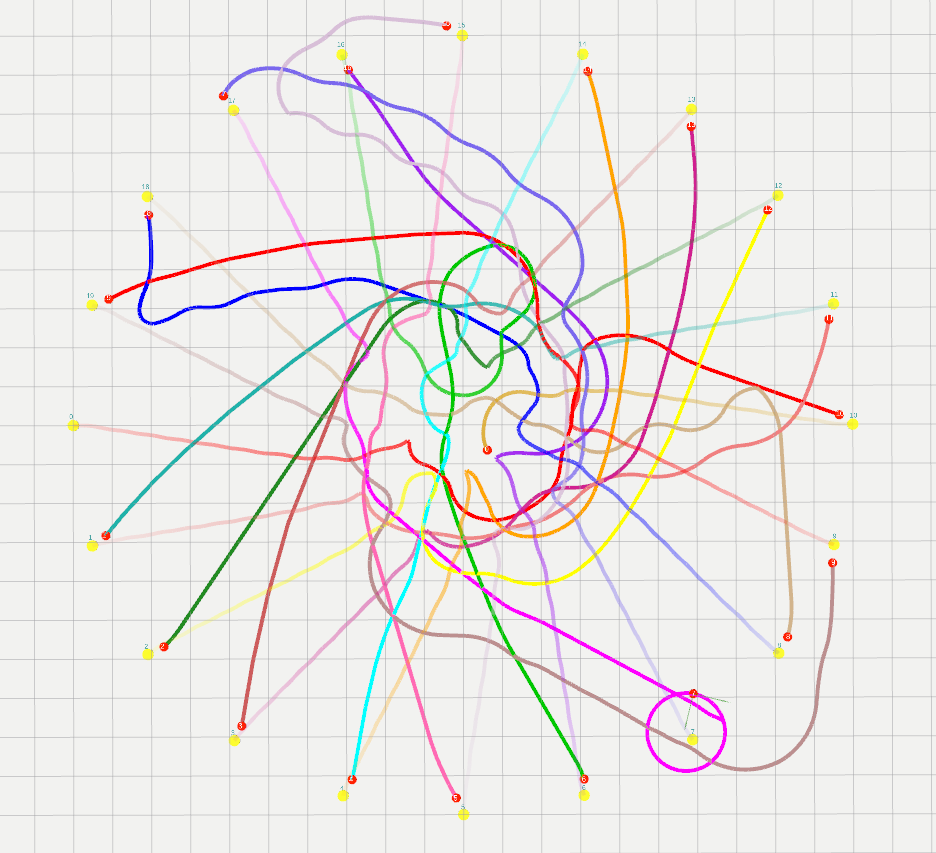

I am running this repo on Stage simulator, while the circle trajectories are not like the figure in README.md.

Here is the trajectory I run in Stage.

Some parameters of my experiments as follows:

The code details:

Do I misunderstand the code or wrongly set the parameter?

Looking forward to your reply : )