Ah, I actually managed to fix Crash 2, I guess the problem is with the active argument of the function draw_pixel. This is the original part of the code that crashes:

def draw_pixel(uv: mitsuba.core.Point2f, wavelengths: Spectrum, weights: Spectrum, block: ImageBlock, active = True):

xyz = mitsuba.core.spectrum_to_xyz(weights, wavelengths, active)

aovs = list(xyz) + [0.0, 1.0]

block.put(uv, aovs, active)

def render_pixels(sampler, distribution, block: ImageBlock):

active = Mask(True)

remaining_bounces = UInt32(1000)

loop = get_module(Float).Loop("TestLoop12345")

loop.put(active, remaining_bounces)

sampler.loop_register(loop)

loop.init()

while loop(active):

wavelengths, weights = distribution.sample_spectrum(mitsuba.render.SurfaceInteraction3f(), sampler.next_1d())

draw_pixel(32 * sampler.next_2d(), wavelengths, weights, block)

remaining_bounces -= 1

active &= remaining_bounces > 0Here is a version that does not crash, which passes the active variable:

def draw_pixel(uv: mitsuba.core.Point2f, wavelengths: Spectrum, weights: Spectrum, block: ImageBlock, active):

xyz = mitsuba.core.spectrum_to_xyz(weights, wavelengths, active)

aovs = list(xyz) + [0.0, 1.0]

block.put(uv, aovs, active)

def render_pixels(sampler, distribution, block: ImageBlock):

active = Mask(True)

remaining_bounces = UInt32(1000)

loop = get_module(Float).Loop("TestLoop12345")

loop.put(active, remaining_bounces)

sampler.loop_register(loop)

loop.init()

while loop(active):

wavelengths, weights = distribution.sample_spectrum(mitsuba.render.SurfaceInteraction3f(), sampler.next_1d())

draw_pixel(32 * sampler.next_2d(), wavelengths, weights, block, active)

remaining_bounces -= 1

active &= remaining_bounces > 0

System configuration

Same as #3

Description

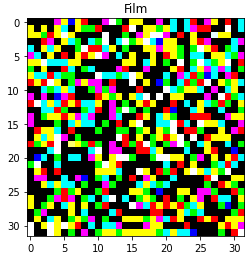

Here is a simple code that uses Enoki and Mitsuba 2 to draw 1000 random pixels into a 32x32 film using

Loop.The pixel positions are random, pixel colors are based on a spectrum

distribution.I am interested in knowing the derivative of the total film color w.r.t. the spectrum distribution.

Here is an example of how the output film looks like in RGB:

Crash 1

Sample code for a Jupyter notebook:

With

ENABLE_GRADIENTS = False, it works fine. Once you setENABLE_GRADIENTS = True, it crashes:Crash 2

Exactly the same code (without derivatives for simplicity), but now I put the rendering loop into a separate Python function:

Crashes: