Hi! You had closed the issue. Did you get your code working?

Closed IkhlasAhmad1998 closed 3 years ago

Hi! You had closed the issue. Did you get your code working?

yup, thanks one question not related to this library but hope you will help. i have individual binary masks for every class (also there is overlap among masks) so how should i preprocess them. just stack one upon other to create a mask of shape (none,none5) as 5 classes? does adding a new mask for background makes sense in this case..? Secondly, i am using sigmoid with total-loss and IOU. (should i change)..? and i have small number of images (471) which splitting ratio do i adopt for train-val and test...? lastly, does increasing number of images using augmentation helps..? Thanks

Some of the answers depend on what you want to achieve, but I can try.

(H, W, 5) if each pixel has been assigned a non-zero value in some of the 5 channels. If not, you should add the background class also. Something like this would do the trick:

targets = ... # array with shape (H, W, CH)

_bg = (targets.sum(-1) == 0)[...,None] # background pixels = pixels that are not assigned to any class

targets = np.concatenate([targets,_bg], -1) # array with shape (H, W, CH+1)categorical_focal_dice_loss with your IOU-loss implementation (or use the dice_loss https://github.com/qubvel/segmentation_models/blob/master/segmentation_models/losses.py#L243) in the cell [11]:

optimizer = tf.keras.optimizers.Adam(LR)

loss = iou_loss # <- your IOU-loss here, used to be sm.losses.categorical_focal_dice_loss

metrics = [sm.metrics.IOUScore(threshold=0.5), sm.metrics.FScore(threshold=0.5)]

model.compile(optimizer, loss, metrics)model.fit, i.e. assigning steps_per_epoch larger than len(training_generator) would throw an error with ImageDataAugmentor. However, you can simply run more epochs: augmentations take care that the model keeps on seeing new data. Your dataset is fairly small, so you can manually experiment when your model starts overfitting - or you can automatize the process using callbacks.Thank you so much @mjkvaak for such a great explanation. i am bit confused in adding background as a separate class would help or not. as my classes are already in (0,1) format where 1 is the ROI and 0 as background but there is no separate mask for background. so should i add background or sigmoid takes care of it. Thanks

one more thing as you say kfold is a better choice for small datasets but i think kfold is just used to check which model is performing better on different variants of dataset... am i right? as per i know we can't use it to make model or we can?

if yes than how can we combine 3 models from kfold into a single one to evaluate on test data and also to get predictions..? thanks

You are correct, when using sigmoid you don't need the background class in your channels: then each pixel in each channel is essentially a binary classification task. Sorry for the confusion.

You can train K models with KFold cross-validation by iteratively looping the differerent training and validation sets. Try something like this:

import numpy as np

from sklearn.model_selection import KFold

...

SEED = 1

X = np.random.randint(0, 255, (300,224,224,3)) # <- replace this with your images

Y = np.random.randint(0, 1, (300,224,224,5)) # <- replace this with your masks

kf = KFold(n_splits=3, shuffle=True, random_state=SEED)

folds = {i:[train_indices, val_indices] for i, (train_indices, val_indices) in enumerate(kf.split(X))}

# 3-fold CV training

models = []

for i in folds.keys():

print(f"Fold {i+1} / {len(folds)}")

train_images = X[folds[i][0]]

train_masks = Y[folds[i][0]]

val_images = X[folds[i][1]]

val_masks = Y[folds[i][0]]

image_datagen = ImageDataAugmentor(augment=AUGMENTATIONS, input_augment_mode='image', seed=SEED)

mask_datagen = ImageDataAugmentor(augment=AUGMENTATIONS, input_augment_mode='mask', seed=SEED)

train_image_generator = image_datagen.flow(train_images, batch_size=1)

train_masks_generator = image_datagen.flow(train_masks, batch_size=1)

train_generator = zip(train_image_generator, train_masks_generator)

val_image_generator = (ImageDataAugmentor(input_augment_mode='image', seed=SEED)

.flow(val_images, batch_size=1))

val_masks_generator = (ImageDataAugmentor(input_augment_mode='mask', seed=SEED)

.flow(val_masks, batch_size=1))

val_generator = zip(val_image_generator, val_masks_generator)

model = None

epochs = ...

callbacks = ...

model = ...

_ = model.fit(

train_generator,

steps_per_epoch=len(train_generator),

epochs=epochs,

callbacks=callbacks,

validation_data=val_generator,

validation_steps=len(val_generator),

)

model.save(f"model-fold-{i}.h5")

models.append(model)The loop will train 3 models on 3 different splits of the data. The 3 models can be later "ensembled as one" for example by taking an average of the predictions like this:

x_tensor = ... # <- your item(s) here

y_pred = np.average([model.predict(x_tensor) for model in models], 0)Again thanks @mjkvaak for being such a nice person. will try and let you know if got stuck.. one thing i noticed that you used two generators for images and masks separately then zipped together but i have done like this:

train_datagen = ImageDataAugmentor(augment=AUGMENTATIONS, input_augment_mode='image', label_augment_mode="mask", preprocess_input=preprocess_input)

train_generator = train_datagen.flow(train_images_s, train_masks_s, batch_size=b)using the keyword-argument _lable_augmentmode = "mask" and it works fine for me. is it a valid approach..? or i have to use separate for both images and masks and zip them to make a single generator..? which one should i use..?

if using kfold instead of creating a new model on every fold why wouldn't we train the same model again and again with different train and val dataset's..?

and can we change the hyper parameters in every fold like learning rate and number of epochs..? while creating new model everytime can we change backbone in each fold or we have to stick with the same configuaration?

Thanks

No probs, you may consider giving a star for the repo as a return ;). Your way of stacking the inputs and masks into a single .flow call looks legit and more elegant: there are multiple ways of achieving the same results. Good found!

Regarding your question about the Kfold approach, with the above code I posted you would be essentially training the same model architecture (not the same model) as you said "again and again with different train and val dataset's." You don't want to train the same single model with different folds, since then the train and val datasets would leak (which is obviously bad).

i have stared the repo, is there anything more that i can do..? thanks for clarification of previous comment but i have updated it : https://github.com/mjkvaak/ImageDataAugmentor/issues/17#issuecomment-914966669 waiting for your kind response. and thanks for your precious time.

About changing the hyperparameters and model architecture while iterating the folds, in my opinion you should not do that. CV-training is a technique to check that the training setup you have selected generalizes well (provides good models) and that good performance of the training setup is not just an artefact of manual overfitting.

Of course, you can always train several different CV-ensembles with different parameters, see what works well and ensemble the well-performing models, e.g. in the way that I showed above.

Good luck with the project!

@mjkvaak you just made my day 👍 its so nice of you thanks :)

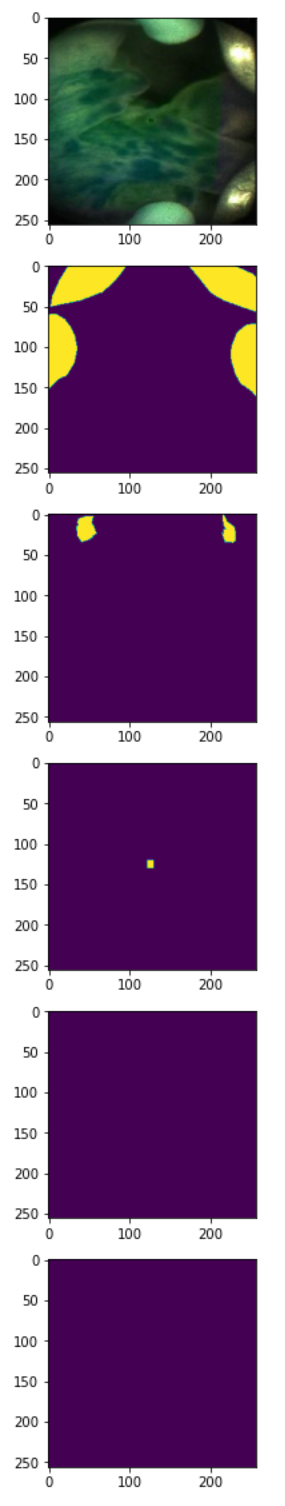

last question: as in above image there are predictions on all masks what's the solution to get the the best predicted result. i mean that how to remove those watermarks in prediction.

If the classes are mutually exclusive, you could try something like this:

y_pred = np.random.uniform(0,1, (256,256,5)) # <- your prediction here, array with shape (H, W, CH)

y_pred = [(y_pred.argmax(-1) == v) for v in range(y_pred.shape[-1])]

y_pred = np.stack(y_pred, axis=-1).astype('float')If the classes are not mutually exclusive (which I suspect they are not, because of the sigmoid activation), then you should do thresholding, e.g.

y_pred = np.random.uniform(0,1, (256,256,5)) # <- your prediction here, array with shape (H, W, CH)

y_pred = (y_pred > 0.5).astype('float') #<- all predicted values above (below) 0.5 mapped to float 1. (float 0., resp.).Thresholding will likely work well, since I believe the "watermarks" in reality have very low confidence but are overly visible in the plots because matplotlib's rescaling.

You asked above if there is anything else you could do. If this is academic work, please consider citing the repo also: instructions are at the bottom of README.md.

okay sure will cite this. Thanks 👍, will contact you for any further guidance.

https://github.com/mjkvaak/ImageDataAugmentor/issues/17#issuecomment-914956399

as in this code while creating generator for validation dataset why you are using input_augment for images and mask?

can i can do something like:

in simple word, can i use val split not from original but from augmented dataset as i am generating new samples is it good to go?

I'm using input_augment_mode for both generators, since the idea is to feed both images and masks as input for the generator instance. Notice that there were no targets specified in .flow (default is None). Later, the zip-function will tie these generators into a new instance, which correctly shoots out the inputs and masks as the desired tuples. Your approach is different since you feed both images and masks into the same generator and just specify the corresponding augmentation modes with input_augment_mode and label_augment_mode, respectively.

I would not split the data as you have planned, since there is a danger that the same original (but augmented) images are being leaked from new_dataset into both train and val sets. Furthermore, it is the best practice not to augment your validation set. This would be a better approach, IMO:

got it but actually i have very small no of images (471) after train test split (376 in train and 95 in test) that's why i am generating new images using augmentation (1504 images in train now). can i split this new dataset into train and val in kfold..? or we can't use both techniques in a single pipeline.?

At least I would not do that in the order you have suggested to prevent the data leakage.

oh okay, in my case i tried k-fold separetely and increasing dataset separately but both of them doesn't improve my results as much. i am getting iou score of 0.40 on test set while training goes to 0.60.

i am using unet architecture with resnet101 backbone and setting encoder freeze to true what could be the possible reasons?

i have stored my masks as multi page tif file and reading it using tifffle module like:

mask = tifffle.imread('mask.tif', 0)

is there something wrong..?

Based on the images you sent earlier, it seems that your model is learning: it just is not maybe learning enough. First of all, I would test a considerably smaller backbone, like resnet18, since your task seems easy. With a smaller backbone, you can also invest in a larger batch size. Large models overfit more easily and there is no need for trying to kill a fly with a cannon: you are already seeing the effects of overfitting in the discrepancy of the train vs test IOU scores. Then I would trial with different losses, learning rates and augmentations.

I encourage you to seek an answer from somewhere else, e.g. stackexchange - the previous comments are not related to IDA anymore.

will try your suggestions, thanks for your precious time and continuous support

@mjkvaak @BardiaKh needed urgent support i have multiple binary masks against single image. i am using augmentation like this:

but it applies different augmentation to images and masks as shown by the following code:

it displays:

kindly tell me, how can i augment an image with multiple targets the same way using your library. Thanks