Thanks for your interest in our paper!

- Yes

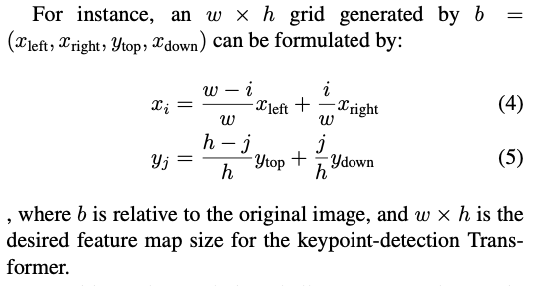

person_per_imageis constant throughout images, you can understand it as the maximum number of persons PRTR wants to take in for each image. Your understanding is correct, it is just like thehsin DETR, but only consider one single class of objects, person.- It depends. If you want feature of same spatial size, you need to do STN; if you are going to feed the feature into some space/order-insensitve architecture like Transformer, you want to add PE.

Hello, I have some questions about the sequential variant (annotated_prtr.ipynb):

In the

forward()function in cell 4, the dimension ofhsis[B, person_per_image, f]. Isfhere the transformer's dimension (similar tod_modelin DETR's transformer)?For these two lines of code in preparation for STN feature cropping in cell 4:

I am a little bit confused by

person_per_image, since the number of person is likely different in each image. Ishshere similar to thehsin DETR, whose dimension is[batch_size, num_queries, d_model]?If I only need the cropped features and don't use Transformer (

transformer_kpt) for further keypoint detection, do I have to build positional embeddings (as in cell 3) and apply them to STN feature cropping?Looking forward to your answers. Thanks in advance!