Does it still work of you load the callback like crazy? Try putting a Sleep or something in it.

For now, we're mostly using the lowest latency all the time (because we're using this for WebRTC and other real-time stuff), but we need to implement higher-latency channels in the future (for battery consumption and CPU usage reasons), so it would be super if we can have it solved and solid.

Let me know if I can help, maybe running some tests on some other sound card/OS combination that you don't have?

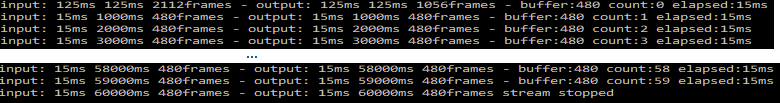

4 times it has looked like this:

4 times it has looked like this:

I believe this a limitation of WASAPI shared mode: no matter the requested buffer size, callback events still come in at the default device period.

For example, on my computer (Windows 10, Cirrus Logic CS4208 speakers set to 24-bit 48000Hz), the default device period is 10 milliseconds / 480 frames. In

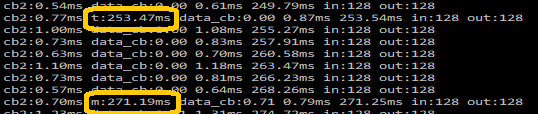

test_audio, which requests a latency of 4096 frames, the first callback gets to fill the entire buffer (4096 frames), but each additional callback gets a number of frames equivalent to the rate-adjusted device period.One strategy that seems to work is to ignore WASAPI refill events (e.g. don't call

IAudioRenderClient::GetBuffer) until the desired number of frames is available to be written to. Of course, since the buffer size is equal to the desired number of frames, this causes audio glitches since the audio engine runs out of data. The default buffer size seems to be twice the period plus some margin, so I tried a buffer that's a little more than twice the requested latency, and that seemed to fix the glitches.