I only looked at your message and comments for now, but again I'm very impressed. Will take a look at the rest soon, for now I haven't seen anything which would make me hesitant to merge this.

Closed gfoidl closed 2 years ago

I only looked at your message and comments for now, but again I'm very impressed. Will take a look at the rest soon, for now I haven't seen anything which would make me hesitant to merge this.

Is there a significant advantage of not just using the shared MemoryPool

The default MemoryPool (aka Shared instance) allocates a ArrayMemoryPoolBuffer (implementation detail, but can be seen with a profiler) for each rent. These allocations are eliminated by using a special memory pool.

That's the main point for me, as I need to run the monitoring as a service / daemon potentially on a device with as little memory footprint / usage as possible.

That pinning the memories (on .NET 5 onwards) becomes effectively free is a nice spin-off of the custom pool.

ad var:

I like to see which type it is (without the help from VS), so it's easier for my brain to follow along the code. Also for me it's additional compiler-safety to get an error if the return type (from your example) doesn't match.

Anyway, if you did mention that before, then of course I'd follow the code-style in this repo.

ad POH: Conceptionally the pinned object heap is part of GC's gen 2, same as normal gen 2 segments, and the LOH. So no other count or size limits apply, than the ones do for normal gen 2 segments. For each read from the PLC there are three rents from the pool, where two are at the same time => 10k 960 bytes 2, which is roughly 20 MB, and that isn't a problem for the GC / POH. For the "specific" use case with 1000s of concurrent reads, the pool could be split into several pool like one per core. Similar to what ArrayPool.Shared does. But this adds quite a lot of complexity, and it's hard to get this right, so that it's beneficial for perf. I doubt this will have a net-positive effect here though -- one would need to measure it. Anyway for this library something more sophisticated shouldn't be implemented, as any user can inject their special / custom pool.

ad

var: I like to see which type it is (without the help from VS), so it's easier for my brain to follow along the code. Also for me it's additional compiler-safety to get an error if the return type (from your example) doesn't match. Anyway, if you did mention that before, then of course I'd follow the code-style in this repo.

No worries, it's actually funny how the compiler-safety vs inference is a pro for one and a con for the other. I'm also unaware of what other (larger) projects are using, because for the sake of compliance I would consider different code styles in open source projects. Actually professionally I'm not using underscore prefixes for fields. Personally I'd like to change that, but the team prefers to stick to camelCase (because that's what they're used to).

Anyway, it doesn't bother me, otherwise I would've requested changes. Maybe my wording (not fond of) wasn't the best either, I guess having that as one of the two remarks should count as a compliment 😉

ad POH: Conceptionally the pinned object heap is part of GC's gen 2, same as normal gen 2 segments, and the LOH. So no other count or size limits apply, than the ones do for normal gen 2 segments. For each read from the PLC there are three rents from the pool, where two are at the same time => 10k 960 bytes 2, which is roughly 20 MB, and that isn't a problem for the GC / POH.

I the end I was thinking the same shouldn't be an issue.

For the "specific" use case with 1000s of concurrent reads, the pool could be split into several pool like one per core. Similar to what ArrayPool.Shared does. But this adds quite a lot of complexity, and it's hard to get this right, so that it's beneficial for perf. I doubt this will have a net-positive effect here though -- one would need to measure it. Anyway for this library something more sophisticated shouldn't be implemented, as any user can inject their special / custom pool.

I agree, this is out of the scope of Sally7. Actually for our case with 1000s of concurrent reads Sally7 in no way will be the bottleneck at this moment. I also don't have any performance figures for how fast we're actually performing the reads (and writes, but reads are far more important in our case), it has always been fast enough so priority has always been in other areas.

Anyway, thanks again for your contribution!

Description

Reading values from a PLC was run with a profiler, there some allocations appeared that could be avoided. This PR tries to reduce some of these allocations.

Some APIs needed for this are only available beginning with .NET 5, hence a new build-target was added.

Benchmarks

Benchmarks for reading from a SoftPLC

The PLC is run in virtual machine (https://github.com/fbarresi/SoftPlc docker), and simple short-values are read. Measured time isn't that accurate here (network latencies, etc.), and they are quite flaky, but my main point is reduction of allocations.

Baseline

Custom memory pool

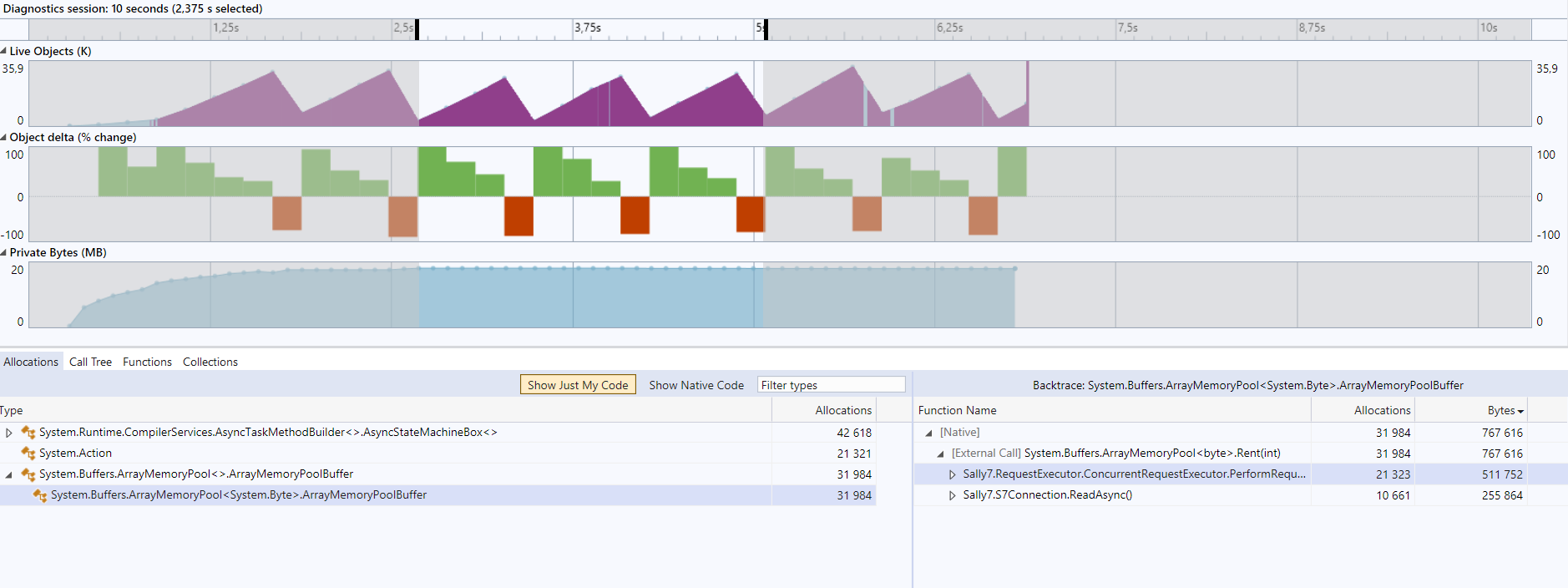

As mentioned above a profiler showed allocations for the default (= shared) memory pool.

Before:

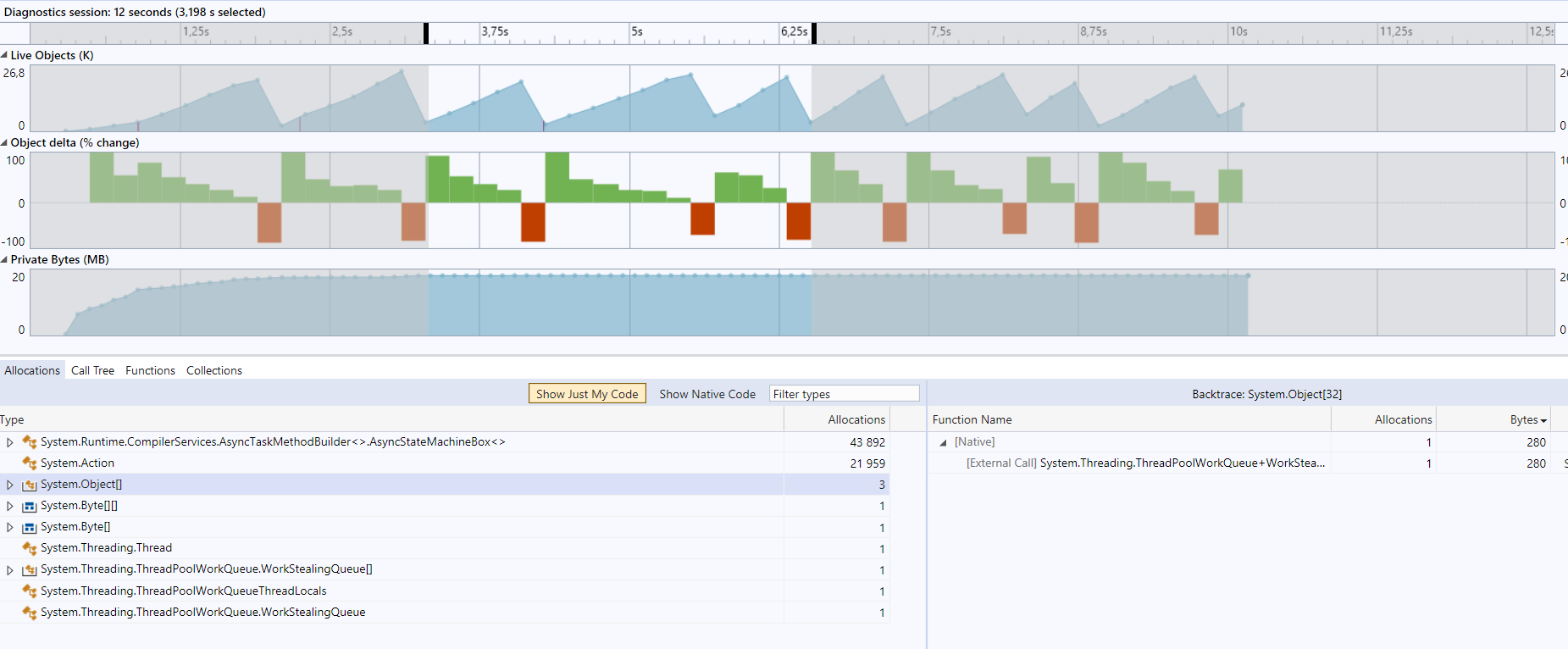

After:

The custom memory pool is free, and pinning the memory needs to be done in the socket.

Sally7MemoryPoolhas the advantage over theArrayPool<byte>.Sharedthat the memories are created from already pinned arrays (on .NET 5 onwards only), so that pinning the MemoryFinal

Custom memory pool + ValueTask (VT) based socket operations (for > .NET Standard 2.1)

ActiontoAsyncStateMachineBox<>got better from 21.959 / 43.892 to 13.461 / 53.836 -- so less allocations for action-delegates are needed.As the code for .NET 5 and .NS 2.1 is quite different from the code for older runtimes (sometimes referred as "desktop"), two separate cs-files are created, and included via settings in the csproj-file, instead of using conditional compilation.

Note: the difference of 72 bytes to the previous step (custom memory pool) is exactly the size of a task (that is saved by the value task).

The VT based version tries to read as much from the socket, namely the

SocketTpktReader, so ideally can avoid some further reads, as all data is already there.Benchmarks for the custom memory pool

To demonstrate the benefit of the custom memory pool a bit further, a benchmark that "simulates" a socket operation. I.e. rent a memory from the pool, pin the memory (for the IO-operation), then unpin and return the memory to the pool.

For .NET 5.0, and newer, the arrays are allocated on the pinned object heap (POH). Hence pinning the memory is free (or at very little cost), whilst pinning a memory, where the backing array isn't pre-allocated, has quite a huge cost. That's the main reason for the 3x speedup.

Benchmark code

```c# using System; using System.Buffers; using BenchmarkDotNet.Attributes; using Sally7.Infrastructure; [MemoryDiagnoser] public class BenchMemoryPool { private const int Size = 967; private static readonly Sally7MemoryPool s_sally7MemoryPool = new(Size); [Benchmark(Baseline = true)] public void Default() { using IMemoryOwner