import glob

import codecs

import queue

import threading

from CxExtractor import CxExtractor

cx = CxExtractor(threshold=133)

uqueue = queue.Queue()

from bs4 import BeautifulSoup

import lxml

def parserfile(f):

# f = open(fpath).read()

with open(f, encoding='utf-16le') as c:

content = c.read()

soup = BeautifulSoup(content, 'lxml')

texts = []

for item in soup.select('.selfTable'):

try:

text = item.find_all('a')[0].text

texts.append(text)

except Exception as e:

print(e)

for item in soup.select('.OuterTable'):

if item:

for sub in item.find_all('td'):

texts.append(sub.text)

return texts

# parserfile(html)

# with codecs.open(html,encoding="utf-16") as f:

# parserfile(f.read())

# parserfile('../html/Contents0.html')

# get('.OuterTable')

htmls = glob.glob("../html/*.html")

def parserfile_auto(htmlpath):

html = cx.readHtml(htmlpath, coding='utf-16le')

content = cx.filter_tags(html)

s = cx.getText(content)

return s

import os

for html in htmls:

text = parserfile_auto(html)

# text = parserfile(html)

with open('./phone-text-auto/'+os.path.basename(html) + '.txt', 'w', encoding='utf-8') as textfile:

# t = "\n".join(text)

textfile.write(text)

最近接到一批数据需要分析,当然常规的就是先用bs4解析处理,提取内容。然而很早之前就听说过了网页内容自动抽取,于是就尝试了下,

CxExtractor来自cx-extractor-python目前我了解到的网页自动抽取方式有:

本篇中尝试的方法为基于行块分布的。CxExtractor

读取->提取->过滤即可

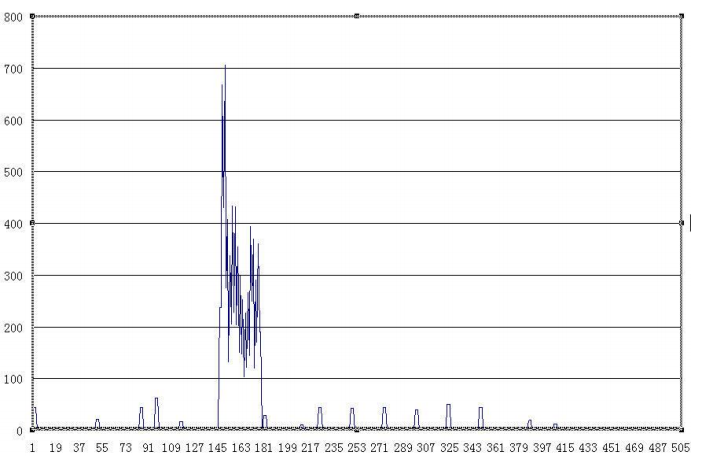

具体可以点过去看一下,不难理解,在我的应用场景里,应用起来也很简单。不过在我这边的应用场景效果并不是非常好。因为这边的html是报告形式的,提取的手机数据为所有信息例如微信聊天记录,删除后的数据也有等等,基本是所有数据。而报告形式十分规整,没有所谓的大型的主体存在,大部分数据都非常规整。所以效果并不是99%的好,但已经很不错了。再稍微进行下处理就可以了。节省了不少时间

对于提取后的数据即可进行分词,关键词提取,然后绘制成词云进行展示了。这里有一个问题就是,如果展示中文的话,

worldcloud本身是不能显示的,需要指定字体路径才行。词云的图片我就不放了,关于关联分析的话,我觉得可以做的地方有异常检测,转账记录分析,聊天记录分析,文本主题模型。很明显的例如这些个数据的分析中让我想到了

neo4j中的sanbox里的川普Twitter分析,其中有很大的相似性。