Heh, I was wondering about this recently myself. Nice investigative work with the Affine class. I think you are probably right that the correct interpretation is to compose the transformations, but the question is, in what order? And by the way, in general, I don't think we should override the scale (with nap_aff.scale =), but rather compose it with a new transform containing the scale.

Based on your suggestion (that elastix takes the scale as an input and then does the registration), it sounds to me like we should apply scale/translate first, then the given affine.

@sofroniewn, what do you think?

🚀 Feature / Motivation

I am working a bit to use transformations derived from elastix using napari's 2D linear transformation rendering. The good news is that it mostly works, but I have a question regarding physical scales. elastix takes these into account during registration and returns a transformation and the "spacing" or distance between pixels. When using this transformation matrix in napari, adding an affine transform and a scale to physical dimension aren't composed together. See example below:

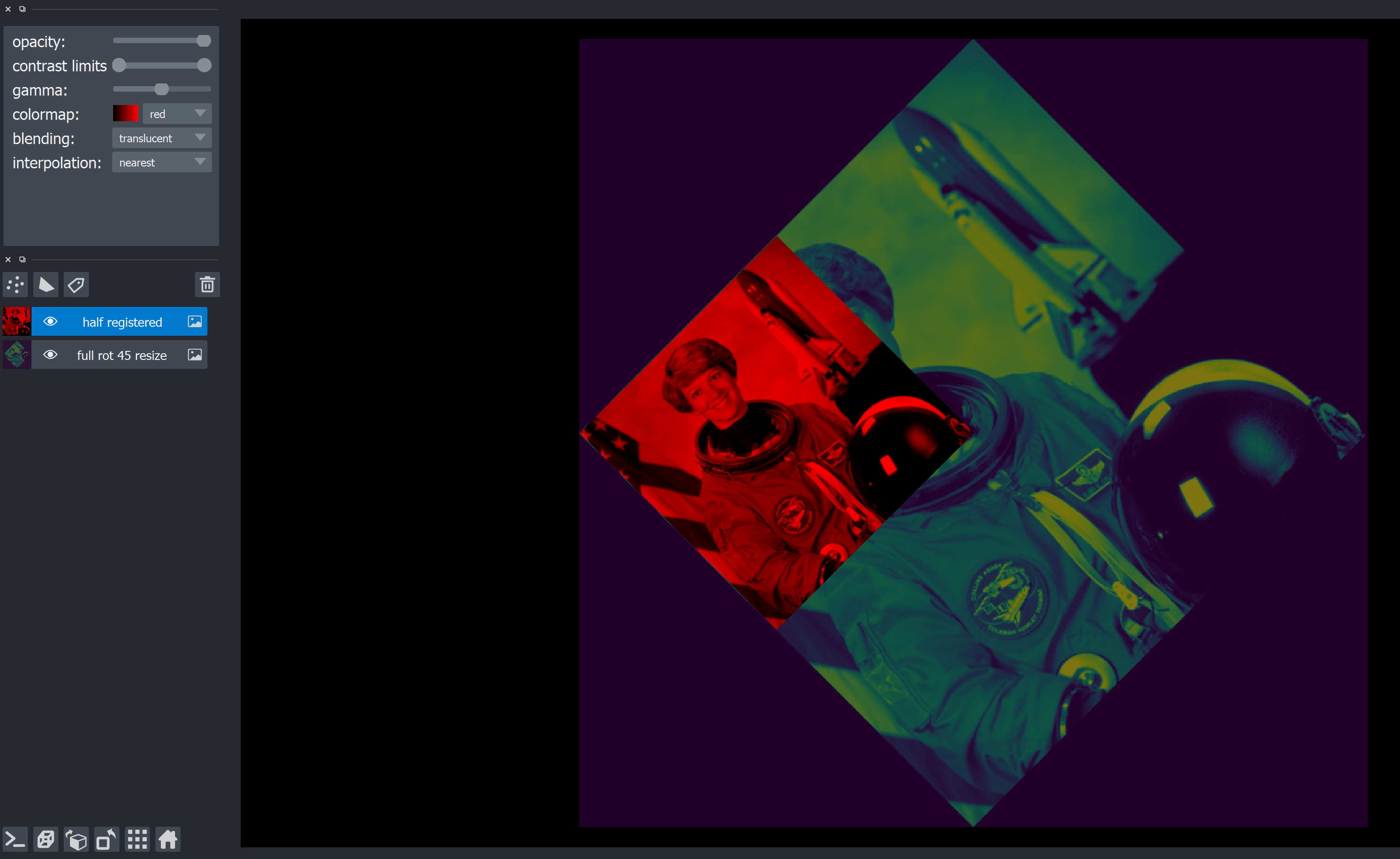

I get this:

The rotation and translation from elastix is correct as the rotation and translation is appropriate but the fact the image is half the size was ignored. The printed matrix after the layer is initialized being the same as the original indicates the

scaleargument appears to be overriden or ignored whenaffine != None.Pitch

Adding scaling to the affine matrix fixes this issue:

Curious if this is intentional. I am happy to take a stab if someone can tell me where things may need to be changed.