(rebased to pick up #30)

Closed ctk21 closed 3 years ago

(rebased to pick up #30)

I had a think about formalizing how multi_channel is turning a non blocking structure into a blocking one. If Thread A is enqueuing data and Thread B is dequeuing then

Thread A: Thread B:

A1 - enqueue data (non-blocking) B1 - poll for data (non-blocking)

A2 - check waiter queue and signal B2 - enqueue ourselves on the waiter queue

any blocking waiter B3 - poll for data again (non-blocking)

and block with waiter structureEach of A1, A2, B1, B2, B3 are ordered in the sense that completion is either Ax < By or Bx < Ay. I think we can then construct the following argument to show that Thread B will always pick up Thread A's enqueue:

if A1 < B1 then B will pick up the data in B1

else B1 < A1 then

if B2 < A1 then A will signal B in A2 and the data will be collected by B

else A1 < B2 then B3 is will execute after A1 and so B will pick up the dataI've implemented two improvements:

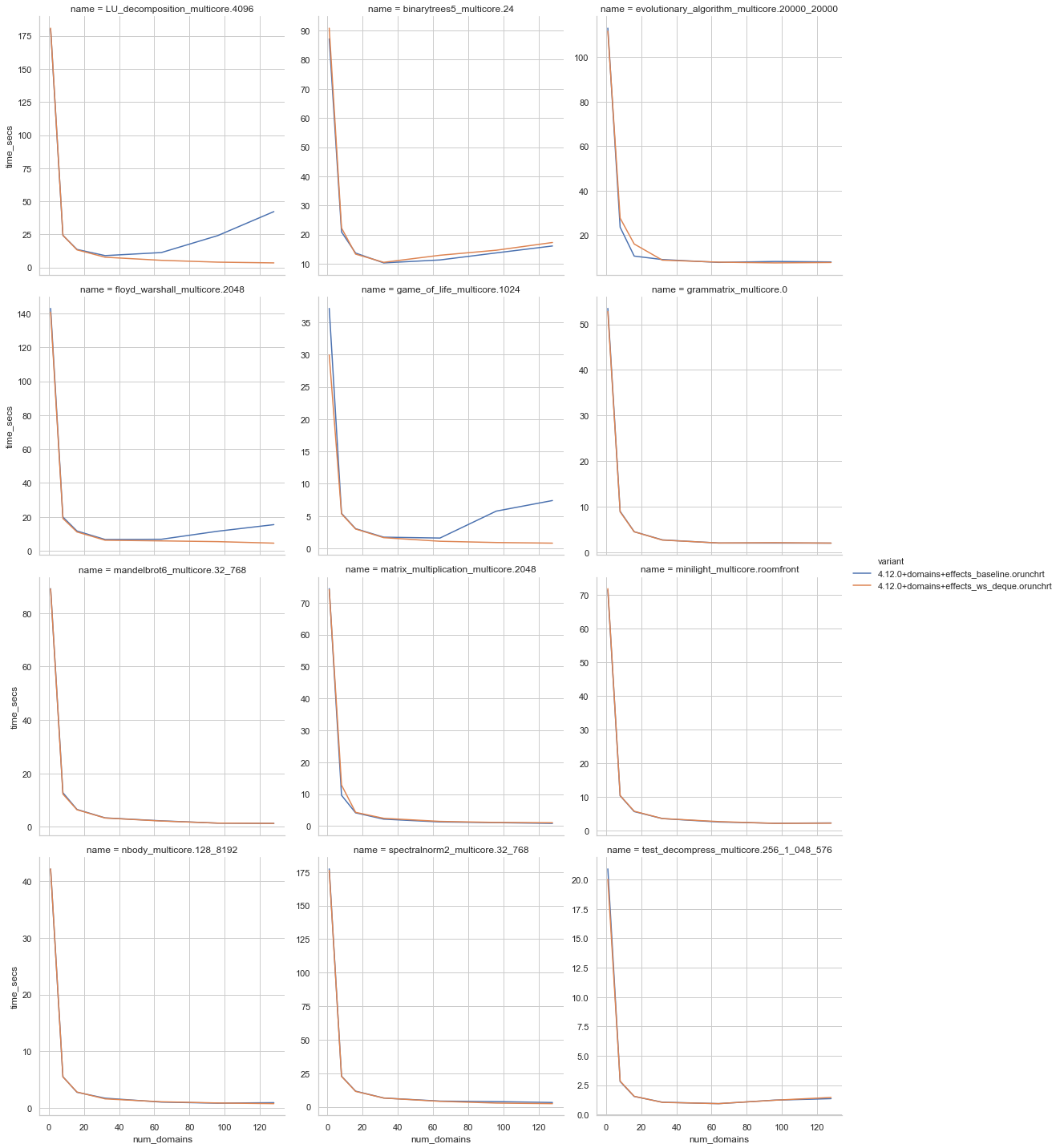

Atomic.set on the elements of the backing array in the dequeThe big benchmarks now look like this; Zen2 (not isolated) sandmark ed00c5, baseline is bc7c44, this pr is e69db8:

Broad brush, if you want to scale the task stealing is the way to go.

The benchmarks for the standard sizes show:

There is a single regression for our evolutionary_algorithm case. I spent some time with this one, there are two things to note:

I think we could do things about the first: we could throttle how we wake up blocked waiters and also limit the number of stealing domains. I'm in two minds about if we should pursue that in this PR or as follow ups.

So I did a bit more investigation on the evolutionary_algorithm results and found a memory bug. The tasks in the deque arrays will be overwritten, however all the memory held in the task closures are kept there until the memory in the deque array is overwritten.

I have implemented a fix where the deque array elements hold a reference to the item; this reference is clobbered when taking tasks. You can't touch the array itself in steal because the producer may reuse that slot.

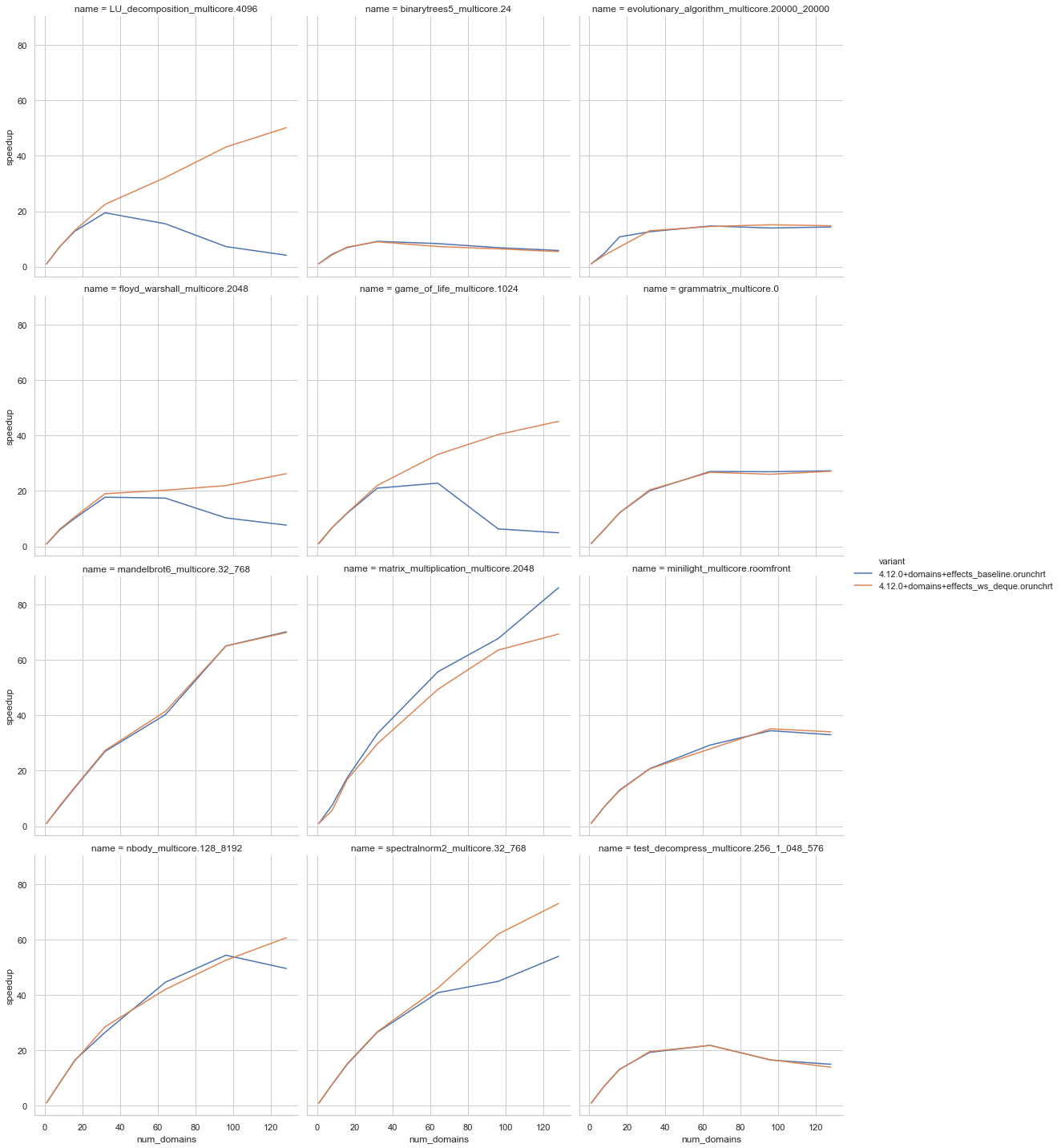

A rerun of the big benchmarks got me:

A rerun of the standard size benchmarks got me:

There remains something a bit odd about evolutionary algorithm that I don't fully understand - it looks like we do differing amounts of GC work with the old vs new code. The deques are causing about 20% more minor GCs, which is odd as I can't see significant extra allocations in the domainslib structures themselves:

I feel we can lean on the OCaml multicore memory model more in the deque rather than wrapping every operation in an Atomic indirection.

Just to connect various folks who might be interested in this, @fpottier and his students were looking at instances where Multicore OCaml explicitly takes advantage of data racy behaviours for improving performance. This looks like one. But @ctk21 mentioned that we need acquire release atomics which the OCaml memory model does not support (yet). @stedolan mentioned that while acquire load fits nicely into the model, store-release does not.

Can you merge with the master again? The diff includes changes already included in master, and it makes it hard to read. See https://github.com/ocaml-multicore/domainslib/pull/29/files#diff-1a51aea47d0ef65c5073e293d19298451614febc83360af128ded914dbb6599bL39

Can you merge with the master again? The diff includes changes already included in master, and it makes it hard to read. See https://github.com/ocaml-multicore/domainslib/pull/29/files#diff-1a51aea47d0ef65c5073e293d19298451614febc83360af128ded914dbb6599bL39

Rebased to master and pushed!

I feel we can lean on the OCaml multicore memory model more in the deque rather than wrapping every operation in an Atomic indirection.

Just to connect various folks who might be interested in this, @fpottier and his students were looking at instances where Multicore OCaml explicitly takes advantage of data racy behaviours for improving performance. This looks like one. But @ctk21 mentioned that we need acquire release atomics which the OCaml memory model does not support (yet). @stedolan mentioned that while acquire load fits nicely into the model, store-release does not.

To expand a bit. We can implement the CL deque using the Atomic module and the existing OCaml multicore memory-model. However if you look at Figure 1 in Correct and efficient work-stealing for weak memory models, then we aren't able to express the acquire-release components of that implementation in OCaml multicore today.

In other parallel data structures, for example a SPSC ring buffer, using acquire-release can be important to get a really low overhead implementation. However there is a problem to solve which is if the OCaml multicore memory-model can be extended to accommodate acquire-release atomics.

This PR is an implementation of Chase Lev deques organised for task stealing; as is traditional in Cilk, Concurrent bags, etc.

The basic idea is:

pushwithout synchronous operations andpopwill not need to synchronize with other domains unless there is only 1 element in the deque.stealtasks from randomly chosen deques belonging to other domains.recv_block_spins.Early benchmarking results (detuned Zen2 with sandmark

fb2a38) are encouraging:I wouldn't say the implementation is fully finished:

Atomicindirection.Some/Nonereturn from the deque to use exceptions.LU_decompositionandgame_of_lifestand out), it would be good to understand thematrix_multiplicationregression and take a look at thebinarytrees5where the deques might be a bit slower._The CL deque implementation was built on the queue in lockfree but I would recommend looking at the C code in Correct and efficient work-stealing for weak memory models_