We have the same issue with grouped Conv2D, any news ?

Open RicoOscar opened 1 year ago

We have the same issue with grouped Conv2D, any news ?

Hey guys, any update on this ?

The problem is that grouped convolutions are not fully supported on the CPU and keras wraps them with @tf.function(jit_compile=True), see base_conv.py#L272-L290. I use the following workaround for SegFormer:

for block in segformer.segformer.encoder.block:

for layer in block:

# monkey patch depthwise convolution `_jit_compiled_convolution_op`

# to support onnx conversion.

layer.mlp.depthwise_convolution.depthwise_convolution._jit_compiled_convolution_op = (

layer.mlp.depthwise_convolution.depthwise_convolution.convolution_op

)I think this can be generalize in tf2onnx to support any model, cause we just need to skip the _jit_compiled_convolution_op block.

@edumotya

Thanks for the help. The workaround worked for me.

Describe the bug Error when a model SavedModel in TF 2.10.0/2.11.0 that contain a TF Conv2D grouped is converted in ONNX with the API

tf2onnx.convert.from_keras()System information

To Reproduce The dummy model to reproduce the error, the error appears when "conv1" groups!=1:

The conversion script :

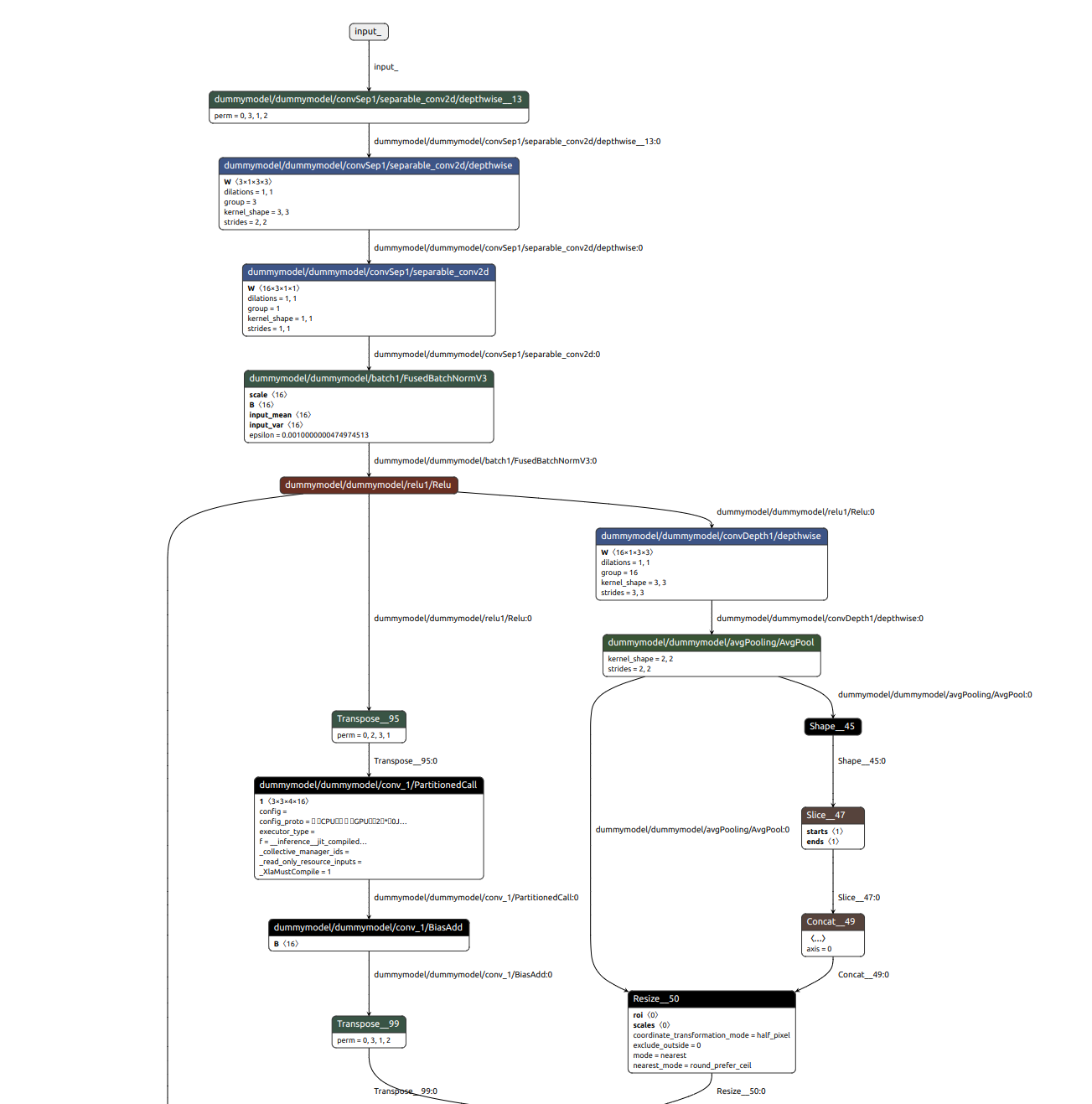

Screenshot Issue for the conversion of the layer

dummymodel/dummymodel/conv_1/PartitionedCallwhich is the Conv2d grouped