Sorry it was because the init_cfg and label assignment isn't right, the first 50 iters seem okay so far

Closed iumyx2612 closed 2 years ago

Sorry it was because the init_cfg and label assignment isn't right, the first 50 iters seem okay so far

Please feel free to create a new issue if you meet problesm

Notice

There are several common situations in the reimplementation issues as below

There are several things to do for different cases as below.

Checklist

Describe the issue

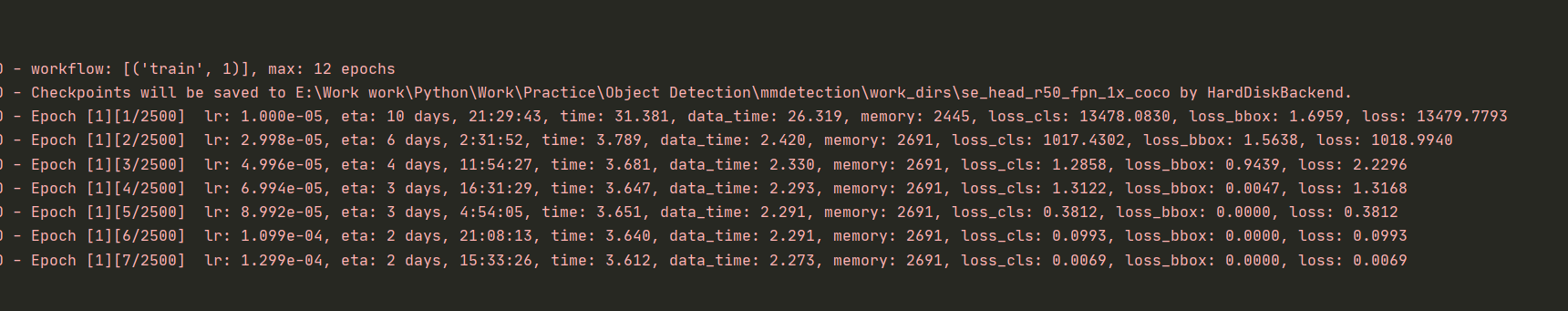

I apply QFL on ATSSHead, but loss values always come to 0

Reproduction

from mmdet.core import (anchor_inside_flags, bbox_overlaps, build_assigner, build_sampler, images_to_levels, multi_apply, reduce_mean, unmap) from mmdet.core.utils import filter_scores_and_topk from ..builder import HEADS, build_loss from .anchor_head import AnchorHead

@HEADS.register_module() class SEHead(AnchorHead): def init(self, num_classes, in_channels, stacked_convs=4, num_dcn=1, with_attn=False, conv_cfg=None, norm_cfg=dict(type='GN', num_groups=32, requires_grad=True), kwargs): self.stacked_convs = stacked_convs self.conv_cfg = conv_cfg self.norm_cfg = norm_cfg super(SEHead, self).init( num_classes, in_channels, kwargs)

sys.platform: win32 Python: 3.8.12 (default, Oct 12 2021, 03:01:40) [MSC v.1916 64 bit (AMD64)] CUDA available: True GPU 0: NVIDIA GeForce GTX 1050 CUDA_HOME: C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.0 NVCC: Not Available GCC: n/a PyTorch: 1.11.0 PyTorch compiling details: PyTorch built with:

TorchVision: 0.12.0 OpenCV: 4.5.5 MMCV: 1.4.7 MMCV Compiler: MSVC 192930140 MMCV CUDA Compiler: 11.3 MMDetection: 2.24.1+157623a

Process finished with exit code 0