So far everything you've described seems to be working as expected. What exactly is the issue you're seeing?

Closed airtonix closed 2 years ago

So far everything you've described seems to be working as expected. What exactly is the issue you're seeing?

let me start again:

fails to apply.because:

if i manually make sure the docker image for k8s_gateway is on the node before applying the helm chart, then things work. but then i have other problems.

After applying the above:

I want my cluster to not require a dns server outside my lan to resolve entries in the cluster.

I have:

/kube.lan/192.168.8.222/What I want:

*.kube.lan to be handled by 192.168.8.222*.kube.lan dns queries from the rest of my lan to be sent to 192.168.8.222*.kube.lan that originate within the cluster to instead resolve to internal cluster ip addresses, and not 192.168.8.222What I'm doing:

The order of fluxcd kustomizations:

clusters/default/flux-systemclusters/default/configclusters/default/sources, which points to manifests/sourcesclusters/default/infrastructure which points to manifests/infrastructure/defaultclusters/default/apps which points to manifests/apps/defaultI setup the single node cluster like so:

if it's not clean, ssh in and run sudo k3s-uninstall.sh, because i want all this to work without any hand holding.

install k3s on the node: with clusterfiles master <hostname> <sshuser> <sshkey>

$ clusterfiles master kube zenobius ~/.ssh/id_ed25519

# ... lots of successful log output spam here... much postive, very expected. everyone happy.bootstrap fluxcd on the master node (which triggers it to apply ☝🏻 the above sequence of kustomizations) with GITHUB_TOKEN=supersecret clusterfiles bootstrap <clustername> <githubowner> <githubrepo>

$ GITHUB_TOKEN=ghp_trolololololol clusterfiles bootstrap default airtonix clusterfiles

# ... lots of successful log output spam here... much postive, very expected. everyone happy. Now fire up a status report watching the deployment:

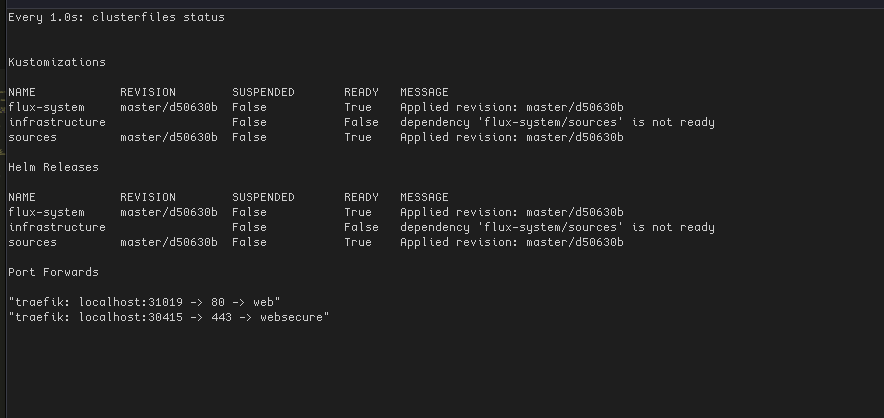

$ watch -n 1 "clusterfiles status"

I can also fire up Lens, and watch the events of the "cluster", and this is where I usually watch the following occur:

- new commit is seen on the git repo

- it applies the sources, registeres all the helm repos etc

- tries to start applying the infrastructure group, but something fails around the k8s-gateway:

- either something fails with applying the k8s-gateway helm chart

- or it succeeds and further image pulls fail.as it applies, something interfers with the ability of the cluster to pull docker images. the error looks like it can't resolve dns queries any more.

This means your k8s/k3s node DNS resolver is misconfigured.

dns queries to the outside world fail to resolve for anything. so now the rest of the fluxcd process throws errors everywhere because it can't pull other docker images.

Seems like a symptom of the same problem.

Just so that we're clear, our plugin, k8s_gateway, is designed to resolve external k8s resources (ingress, services etc.). You can combine it with other plugins, like forward if you also want it to act as a DNS forwarder, but this is entirely optional. For all intra-cluster DNS needs, you have to use standard kubernetes DNS add-on (coredns with kubernetes plugin).

Here are some of my thoughts based on what I understood from your description:

i only want dns queries for .kube.lan to be handled by 192.168.8.222 i do not want any non .kube.lan dns queries from the rest of my lan to be sent to 192.168.8.222

This should be the responsibility of dnsmasq/openwrt resolver.

i do not want dns queries for any internal cluster domain names to be leaving the cluster. i want queries for *.kube.lan that originate within the cluster to instead resolve to internal cluster ip addresses, and not 192.168.8.222

Both are the responsibility of standard kubernetes DNS add-on.

i want queries for the rest of the universe to continue to leave the cluster

You need to use the forward plugin for that.

yep ok, i get what you say k8s-gateway is doing and it makes sense that all k8s-gateway should be doing is fielding requests from outside the cluster.

sidenote: I'm currently running another host on my network that uses just docker-compose and a single traefik instance. Queries are handled by an adguard instance that rewrites requests for

*.home.lanto the ip address of that docker-compose/traefik host. I want to decomission this host for a fluxcd setup so volumes are backedup offsite and the host itself can be treated like cattle.

So here's me re-provisioning the host again:

clusterfiles on git master on aws (ap-southeast-2) took 10s

x clusterfiles master jadakren zenobius ~/.ssh/id_ed25519

Running: k3sup install

2022/09/22 18:58:47 jadakren

Public IP: jadakren

[INFO] Finding release for channel stable

[INFO] Using v1.24.4+k3s1 as release

[INFO] Downloading hash https://github.com/k3s-io/k3s/releases/download/v1.24.4+k3s1/sha256sum-amd64.txt

[INFO] Downloading binary https://github.com/k3s-io/k3s/releases/download/v1.24.4+k3s1/k3s

[INFO] Verifying binary download

[INFO] Installing k3s to /usr/local/bin/k3s

Rancher K3s Common (stable) 5.3 kB/s | 2.9 kB 00:00

Dependencies resolved.

================================================================================

Package Arch Version Repository Size

================================================================================

Installing:

k3s-selinux noarch 1.2-2.el8 rancher-k3s-common-stable 20 k

Transaction Summary

================================================================================

Install 1 Package

Total download size: 20 k

Installed size: 94 k

Downloading Packages:

k3s-selinux-1.2-2.el8.noarch.rpm 28 kB/s | 20 kB 00:00

--------------------------------------------------------------------------------

Total 27 kB/s | 20 kB 00:00

Running transaction check

Transaction check succeeded.

Running transaction test

Transaction test succeeded.

Running transaction

Preparing : 1/1

Running scriptlet: k3s-selinux-1.2-2.el8.noarch 1/1

Installing : k3s-selinux-1.2-2.el8.noarch 1/1

Running scriptlet: k3s-selinux-1.2-2.el8.noarch 1/1

Verifying : k3s-selinux-1.2-2.el8.noarch 1/1

Installed:

k3s-selinux-1.2-2.el8.noarch

Complete!

[INFO] Creating /usr/local/bin/kubectl symlink to k3s

[INFO] Creating /usr/local/bin/crictl symlink to k3s

[INFO] Skipping /usr/local/bin/ctr symlink to k3s, command exists in PATH at /usr/bin/ctr

[INFO] Creating killall script /usr/local/bin/k3s-killall.sh

[INFO] Creating uninstall script /usr/local/bin/k3s-uninstall.sh

[INFO] env: Creating environment file /etc/systemd/system/k3s.service.env

[INFO] systemd: Creating service file /etc/systemd/system/k3s.service

[INFO] systemd: Enabling k3s unit

Created symlink /etc/systemd/system/multi-user.target.wants/k3s.service → /etc/systemd/system/k3s.service.

[INFO] systemd: Starting k3s

Result: [INFO] Finding release for channel stable

[INFO] Using v1.24.4+k3s1 as release

[INFO] Downloading hash https://github.com/k3s-io/k3s/releases/download/v1.24.4+k3s1/sha256sum-amd64.txt

[INFO] Downloading binary https://github.com/k3s-io/k3s/releases/download/v1.24.4+k3s1/k3s

[INFO] Verifying binary download

[INFO] Installing k3s to /usr/local/bin/k3s

Rancher K3s Common (stable) 5.3 kB/s | 2.9 kB 00:00

Dependencies resolved.

================================================================================

Package Arch Version Repository Size

================================================================================

Installing:

k3s-selinux noarch 1.2-2.el8 rancher-k3s-common-stable 20 k

Transaction Summary

================================================================================

Install 1 Package

Total download size: 20 k

Installed size: 94 k

Downloading Packages:

k3s-selinux-1.2-2.el8.noarch.rpm 28 kB/s | 20 kB 00:00

--------------------------------------------------------------------------------

Total 27 kB/s | 20 kB 00:00

Running transaction check

Transaction check succeeded.

Running transaction test

Transaction test succeeded.

Running transaction

Preparing : 1/1

Running scriptlet: k3s-selinux-1.2-2.el8.noarch 1/1

Installing : k3s-selinux-1.2-2.el8.noarch 1/1

Running scriptlet: k3s-selinux-1.2-2.el8.noarch 1/1

Verifying : k3s-selinux-1.2-2.el8.noarch 1/1

Installed:

k3s-selinux-1.2-2.el8.noarch

Complete!

[INFO] Creating /usr/local/bin/kubectl symlink to k3s

[INFO] Creating /usr/local/bin/crictl symlink to k3s

[INFO] Skipping /usr/local/bin/ctr symlink to k3s, command exists in PATH at /usr/bin/ctr

[INFO] Creating killall script /usr/local/bin/k3s-killall.sh

[INFO] Creating uninstall script /usr/local/bin/k3s-uninstall.sh

[INFO] env: Creating environment file /etc/systemd/system/k3s.service.env

[INFO] systemd: Creating service file /etc/systemd/system/k3s.service

[INFO] systemd: Enabling k3s unit

[INFO] systemd: Starting k3s

Created symlink /etc/systemd/system/multi-user.target.wants/k3s.service → /etc/systemd/system/k3s.service.

Saving file to: /home/zenobius/Projects/Mine/Github/clusterfiles/kubeconfig

# Test your cluster with:

export KUBECONFIG=/home/zenobius/Projects/Mine/Github/clusterfiles/kubeconfig

kubectl config set-context default

kubectl get node -o wide

🐳 k3sup needs your support: https://github.com/sponsors/alexellis

✚ generating manifests

✔ manifests build completed

► installing components in flux-system namespace

CustomResourceDefinition/buckets.source.toolkit.fluxcd.io created

CustomResourceDefinition/gitrepositories.source.toolkit.fluxcd.io created

CustomResourceDefinition/helmcharts.source.toolkit.fluxcd.io created

CustomResourceDefinition/helmreleases.helm.toolkit.fluxcd.io created

CustomResourceDefinition/helmrepositories.source.toolkit.fluxcd.io created

CustomResourceDefinition/ocirepositories.source.toolkit.fluxcd.io created

Namespace/flux-system created

ServiceAccount/flux-system/helm-controller created

ServiceAccount/flux-system/source-controller created

ClusterRole/crd-controller-flux-system created

ClusterRoleBinding/cluster-reconciler-flux-system created

ClusterRoleBinding/crd-controller-flux-system created

Service/flux-system/source-controller created

Deployment/flux-system/helm-controller created

Deployment/flux-system/source-controller created

◎ verifying installation

✔ helm-controller: deployment ready

✔ source-controller: deployment ready

✔ install finishedEverything here is standard k3s, I haven't modified anything.

If I run a oneshot docker container that does a google.com ping:

$ kubectl run dnsutils-ping-google --restart=Never --rm -i --tty --image registry.k8s.io/e2e-test-images/jessie-dnsutils:1.3 -- ping google.com

If you don't see a command prompt, try pressing enter.

64 bytes from mel05s01-in-f14.1e100.net (142.250.70.206): icmp_seq=2 ttl=54 time=28.0 ms

64 bytes from mel05s01-in-f14.1e100.net (142.250.70.206): icmp_seq=3 ttl=54 time=28.6 ms

64 bytes from mel05s01-in-f14.1e100.net (142.250.70.206): icmp_seq=4 ttl=54 time=28.2 ms

64 bytes from mel05s01-in-f14.1e100.net (142.250.70.206): icmp_seq=5 ttl=54 time=36.6 ms

64 bytes from mel05s01-in-f14.1e100.net (142.250.70.206): icmp_seq=6 ttl=54 time=28.8 ms

^C

--- google.com ping statistics ---

6 packets transmitted, 6 received, 0% packet loss, time 5006ms

rtt min/avg/max/mdev = 28.013/30.528/36.619/3.156 ms

pod "dnsutils-ping-google" deletedNot sure if this matters, but also try to ping internal address:

$ kubectl run dnsutils-ping-google --restart=Never --rm -i --tty --image registry.k8s.io/e2e-test-images/jessie-dnsutils:1.3 -- ping kubernetes.default

If you don't see a command prompt, try pressing enter.

From 100.80.0.1 icmp_seq=2 Packet filtered

From 100.80.0.1 icmp_seq=3 Packet filtered

From 100.80.0.1 icmp_seq=4 Packet filtered

From 100.80.0.1 icmp_seq=5 Packet filtered

From 100.80.0.1 icmp_seq=6 Packet filtered

^C

--- kubernetes.default.svc.cluster.local ping statistics ---

6 packets transmitted, 0 received, +6 errors, 100% packet loss, time 5006ms

pod "dnsutils-ping-google" deleted

pod default/dnsutils-ping-google terminated (Error)The dns is up?

x kubectl get pods --namespace=kube-system -l k8s-app=kube-dns

NAME READY STATUS RESTARTS AGE

coredns-b96499967-8mfkb 1/1 Running 0 6m29sBut looks like the logfile is spammed with warnings... not sure how to get the start of that log file:

kubectl logs --namespace=kube-system -l k8s-app=kube-dns

[WARNING] No files matching import glob pattern: /etc/coredns/custom/*.server

[WARNING] No files matching import glob pattern: /etc/coredns/custom/*.server

[WARNING] No files matching import glob pattern: /etc/coredns/custom/*.server

[WARNING] No files matching import glob pattern: /etc/coredns/custom/*.server

[WARNING] No files matching import glob pattern: /etc/coredns/custom/*.server

[WARNING] No files matching import glob pattern: /etc/coredns/custom/*.server

[WARNING] No files matching import glob pattern: /etc/coredns/custom/*.server

[WARNING] No files matching import glob pattern: /etc/coredns/custom/*.server

[WARNING] No files matching import glob pattern: /etc/coredns/custom/*.server

[WARNING] No files matching import glob pattern: /etc/coredns/custom/*.serverrest of the kube-system

> kubectl get svc --namespace=kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-dns ClusterIP 10.43.0.10 <none> 53/UDP,53/TCP,9153/TCP 7m3s

metrics-server ClusterIP 10.43.242.36 <none> 443/TCP 7m2s

traefik LoadBalancer 10.43.160.77 192.168.8.222 80:31456/TCP,443:30222/TCP 6m5sThe dns is running on these interfaces and ports

$ kubectl get endpoints kube-dns --namespace=kube-system

NAME ENDPOINTS AGE

kube-dns 10.42.0.6:53,10.42.0.6:53,10.42.0.6:9153 6m58sThis is the core-dns configmap:

GNU nano 6.0 /tmp/kubectl-edit-2969051488.yaml

# Please edit the object below. Lines beginning with a '#' will be ignored,

# and an empty file will abort the edit. If an error occurs while saving this file will be

# reopened with the relevant failures.

#

apiVersion: v1

data:

Corefile: |

.:53 {

errors

health

ready

kubernetes cluster.local in-addr.arpa ip6.arpa {

pods insecure

fallthrough in-addr.arpa ip6.arpa

}

hosts /etc/coredns/NodeHosts {

ttl 60

reload 15s

fallthrough

}

prometheus :9153

forward . /etc/resolv.conf

cache 30

loop

reload

loadbalance

}

import /etc/coredns/custom/*.server

NodeHosts: |

192.168.8.222 jadakren

kind: ConfigMap

metadata:

annotations:

objectset.rio.cattle.io/applied: H4sIAAAAAAAA/4yQwWrzMBCEX0Xs2fEf20nsX9BDybH02lMva2kdq1Z2g6SkBJN3L8IUCiVtbyNGOzvfzoAn90IhOmHQcKmgAIsJQc+wl0CD8wQaSr1t1PzKSil>

objectset.rio.cattle.io/id: ""

objectset.rio.cattle.io/owner-gvk: k3s.cattle.io/v1, Kind=Addon

objectset.rio.cattle.io/owner-name: coredns

objectset.rio.cattle.io/owner-namespace: kube-system

creationTimestamp: "2022-09-22T09:29:17Z"

labels:

objectset.rio.cattle.io/hash: bce283298811743a0386ab510f2f67ef74240c57

name: coredns

namespace: kube-system

resourceVersion: "370"

uid: bab78907-7b4f-4a19-9f48-ffafd0efb1f0But now when i apply the k8s-gateway:

clusterfiles on git master [!] on aws (ap-southeast-2)

> helm repo add k8s_gateway https://ori-edge.github.io/k8s_gateway/

"k8s_gateway" has been added to your repositories

clusterfiles on git master [!] on aws (ap-southeast-2)

> helm install exdns --set domain=kube.lan k8s_gateway/k8s-gateway

NAME: exdns

LAST DEPLOYED: Thu Sep 22 19:15:21 2022

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

clusterfiles on git master [!] on aws (ap-southeast-2)

> kubectl get events --sort-by='.lastTimestamp'

LAST SEEN TYPE REASON OBJECT MESSAGE

15m Normal Scheduled pod/dnsutils-ping-google Successfully assigned default/dnsutils-ping-google to jadakren

21m Normal Starting node/jadakren

14m Normal Scheduled pod/dnsutils-ping-google Successfully assigned default/dnsutils-ping-google to jadakren

16m Normal Scheduled pod/dnsutils-ping-google Successfully assigned default/dnsutils-ping-google to jadakren

5m10s Normal Scheduled pod/exdns-k8s-gateway-68f497c4d8-tgm45 Successfully assigned default/exdns-k8s-gateway-68f497c4d8-tgm45 to jadakren

21m Warning InvalidDiskCapacity node/jadakren invalid capacity 0 on image filesystem

21m Normal NodeHasSufficientMemory node/jadakren Node jadakren status is now: NodeHasSufficientMemory

21m Normal NodeHasNoDiskPressure node/jadakren Node jadakren status is now: NodeHasNoDiskPressure

21m Normal NodeHasSufficientPID node/jadakren Node jadakren status is now: NodeHasSufficientPID

21m Normal NodeAllocatableEnforced node/jadakren Updated Node Allocatable limit across pods

21m Normal Starting node/jadakren Starting kubelet.

21m Normal Synced node/jadakren Node synced successfully

21m Normal NodeReady node/jadakren Node jadakren status is now: NodeReady

21m Normal RegisteredNode node/jadakren Node jadakren event: Registered Node jadakren in Controller

16m Normal Pulling pod/dnsutils-ping-google Pulling image "registry.k8s.io/e2e-test-images/jessie-dnsutils:1.3"

15m Normal Created pod/dnsutils-ping-google Created container dnsutils-ping-google

15m Normal Started pod/dnsutils-ping-google Started container dnsutils-ping-google

15m Normal Pulled pod/dnsutils-ping-google Successfully pulled image "registry.k8s.io/e2e-test-images/jessie-dnsutils:1.3" in 24.546335601s

15m Normal Started pod/dnsutils-ping-google Started container dnsutils-ping-google

15m Normal Created pod/dnsutils-ping-google Created container dnsutils-ping-google

15m Normal Pulled pod/dnsutils-ping-google Container image "registry.k8s.io/e2e-test-images/jessie-dnsutils:1.3" already present on machine

14m Normal Pulled pod/dnsutils-ping-google Container image "registry.k8s.io/e2e-test-images/jessie-dnsutils:1.3" already present on machine

14m Normal Created pod/dnsutils-ping-google Created container dnsutils-ping-google

14m Normal Started pod/dnsutils-ping-google Started container dnsutils-ping-google

5m11s Normal ScalingReplicaSet deployment/exdns-k8s-gateway Scaled up replica set exdns-k8s-gateway-68f497c4d8 to 1

5m11s Normal SuccessfulCreate replicaset/exdns-k8s-gateway-68f497c4d8 Created pod: exdns-k8s-gateway-68f497c4d8-tgm45

5m10s Normal UpdatedIngressIP service/exdns-k8s-gateway LoadBalancer Ingress IP addresses updated: 192.168.8.222

5m5s Warning Failed pod/exdns-k8s-gateway-68f497c4d8-tgm45 Failed to pull image "quay.io/oriedge/k8s_gateway:v0.3.2": rpc error: code = Unknown desc = failed to pull and unpack image "quay.io/oriedge/k8s_gateway:v0.3.2": failed to resolve reference "quay.io/oriedge/k8s_gateway:v0.3.2": failed to do request: Head "https://quay.io/v2/oriedge/k8s_gateway/manifests/v0.3.2": dial tcp: lookup quay.io on 127.0.0.53:53: read udp 127.0.0.1:46480->127.0.0.53:53: read: connection refused

5m5s Normal AppliedDaemonSet service/exdns-k8s-gateway Applied LoadBalancer DaemonSet kube-system/svclb-exdns-k8s-gateway-08adea51

4m35s Warning Failed pod/exdns-k8s-gateway-68f497c4d8-tgm45 Failed to pull image "quay.io/oriedge/k8s_gateway:v0.3.2": rpc error: code = Unknown desc = failed to pull and unpack image "quay.io/oriedge/k8s_gateway:v0.3.2": failed to resolve reference "quay.io/oriedge/k8s_gateway:v0.3.2": failed to do request: Head "https://quay.io/v2/oriedge/k8s_gateway/manifests/v0.3.2": dial tcp: lookup quay.io on 127.0.0.53:53: read udp 127.0.0.1:54085->127.0.0.53:53: i/o timeout

3m51s Warning Failed pod/exdns-k8s-gateway-68f497c4d8-tgm45 Failed to pull image "quay.io/oriedge/k8s_gateway:v0.3.2": rpc error: code = Unknown desc = failed to pull and unpack image "quay.io/oriedge/k8s_gateway:v0.3.2": failed to resolve reference "quay.io/oriedge/k8s_gateway:v0.3.2": failed to do request: Head "https://quay.io/v2/oriedge/k8s_gateway/manifests/v0.3.2": dial tcp: lookup quay.io on 127.0.0.53:53: read udp 127.0.0.1:36634->127.0.0.53:53: read: no route to host

3m4s Normal Pulling pod/exdns-k8s-gateway-68f497c4d8-tgm45 Pulling image "quay.io/oriedge/k8s_gateway:v0.3.2"

2m43s Warning Failed pod/exdns-k8s-gateway-68f497c4d8-tgm45 Failed to pull image "quay.io/oriedge/k8s_gateway:v0.3.2": rpc error: code = Unknown desc = failed to pull and unpack image "quay.io/oriedge/k8s_gateway:v0.3.2": failed to resolve reference "quay.io/oriedge/k8s_gateway:v0.3.2": failed to do request: Head "https://quay.io/v2/oriedge/k8s_gateway/manifests/v0.3.2": dial tcp: lookup quay.io on 127.0.0.53:53: read udp 127.0.0.1:58308->127.0.0.53:53: i/o timeout

2m43s Warning Failed pod/exdns-k8s-gateway-68f497c4d8-tgm45 Error: ErrImagePull

2m29s Warning Failed pod/exdns-k8s-gateway-68f497c4d8-tgm45 Error: ImagePullBackOff

2m15s Normal BackOff pod/exdns-k8s-gateway-68f497c4d8-tgm45 Back-off pulling image "quay.io/oriedge/k8s_gateway:v0.3.2"

so yeah in summary:

i also just tried manually pulling and runnin the image, ( no dramas ) :

clusterfiles on git master [!] on aws (ap-southeast-2) took 5s

> kubectl run k8s-gateway-pull-test --restart=Never --rm -i --tty --image quay.io/oriedge/k8s_gateway:v0.3.2

If you don't see a command prompt, try pressing enter.

^C[INFO] SIGINT: Shutting down

pod "k8s-gateway-pull-test" deleted😅 i might have been running an old version of your helm chart this whole time...

just updated to 1.1.15 and also removed the createNamespace part...

but it still throws an error about failing to pull the image.

if however i prewarm the image cache before trying to apply the helm chart it works.

clusterfiles on git master [!] on aws (ap-southeast-2)

x kubectl run k8s-gateway-pull-test --restart=Never --rm -i --tty --image quay.io/oriedge/k8s_gateway:v0.3.2

pod "k8s-gateway-pull-test" deleted

error: timed out waiting for the condition

once the helm chart is in deployment, nothing i do will allow me to resolve external dns queries

hm.. this is really strange. I can't imagine how k8s-gateway might interfere with node's ability to pull images. and that's even before it pulls its own image! interesting. Let's start with some obvious things:

helm template, split the result into multiple files and just kubectl apply -f them one by one. I wonder if any of them overwrite something that doesn't belong to us?

- What happens if you uninstall the helm chart? Do you have DNS connectivity after that?

clusterfiles on git master [!] on aws (ap-southeast-2)

❯ ssh jadakren -C "nslookup google.com"

;; connection timed out; no servers could be reached

clusterfiles on git master [+] on aws (ap-southeast-2)

❯ flux delete kustomization infrastructure

# time passes

clusterfiles on git master [+] on aws (ap-southeast-2)

❯ ssh jadakren -C "nslookup google.com"

Server: 127.0.0.53

Address: 127.0.0.53#53

Non-authoritative answer:

Name: google.com

Address: 142.250.71.78

Name: google.com

Address: 2404:6800:4015:802::200e

- Can you show the contents of the /etc/resolv.conf from the k3s node? does it point at the openwrt router?

Since the node is at this point just a Fedora Linux 35 (Workstation Edition):

❯ cat /etc/resolv.conf

... snip lots of commented blah blah

nameserver 127.0.0.53

options edns0 trust-ad

search lanalso since :

systemd-resolved.service, and6,192.168.8.10 which forces all my lan machines to use my adguard-home dns server:❯ cat /run/systemd/resolve/resolv.conf

# This is /run/systemd/resolve/resolv.conf managed by man:systemd-resolved(8).

# Do not edit.

#

# This file might be symlinked as /etc/resolv.conf. If you're looking at

# /etc/resolv.conf and seeing this text, you have followed the symlink.

#

# This is a dynamic resolv.conf file for connecting local clients directly to

# all known uplink DNS servers. This file lists all configured search domains.

#

# Third party programs should typically not access this file directly, but only

# through the symlink at /etc/resolv.conf. To manage man:resolv.conf(5) in a

# different way, replace this symlink by a static file or a different symlink.

#

# See man:systemd-resolved.service(8) for details about the supported modes of

# operation for /etc/resolv.conf.

nameserver 192.168.8.10

search lan

192.168.8.10 is the ip address of a third machine in my lan that holds my current docker-compose-ansible-thing. An adguard-home instance is exposed there on port :53

So something i read about k8s and core-dns etc, is that on linux it will use the nodes /etc/resolv.conf ?

Does it literally symlink mount it writable into the cluster?

- What happens if you remove the domain delegation from openwrt? remove any references to the IP of the k3s node and see if the queries are resolved again.

- Can you manually apply the helm-generated manifests one by one and see at which point it breaks? i.e. do

helm template, split the result into multiple files and justkubectl apply -fthem one by one. I wonder if any of them overwrite something that doesn't belong to us?

completely emptied the "infrastructure" kustomization:

---

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

resources:

[]

# - ../base/cert-manager

# - ../base/dns

# - ../base/cache

# - ../base/storageswaited for it to sync and remove.

/etc/resolv.conf? 🤷🏻 clusterfiles on git master [>] on aws (ap-southeast-2)

> flux resume kustomization infrastructure

► resuming kustomization infrastructure in flux-system namespace

✔ kustomization resumed

◎ waiting for Kustomization reconciliation

✔ Kustomization reconciliation completed

✔ applied revision master/344484cd226a77fe632b1d8ed731e8178fb48bbe

clusterfiles on git master on aws (ap-southeast-2) took 3m

> ssh jadakren -C "nslookup google.com"

Server: 127.0.0.53

Address: 127.0.0.53#53

Non-authoritative answer:

Name: google.com

Address: 142.250.71.78

Name: google.com

Address: 2404:6800:4015:802::200eprepare the indiviual bits:

clusterfiles/charts on git master on aws (ap-southeast-2)

x helm template k8s-gateway https://ori-edge.github.io/k8s_gateway/charts/k8s-gateway-1.1.15.tgz --set domain=kube.lan --output-dir .

wrote ./k8s-gateway/templates/serviceaccount.yaml

wrote ./k8s-gateway/templates/configmap.yaml

wrote ./k8s-gateway/templates/rbac.yaml

wrote ./k8s-gateway/templates/rbac.yaml

wrote ./k8s-gateway/templates/service.yaml

wrote ./k8s-gateway/templates/deployment.yamlsuspend flux

clusterfiles/charts on git master [?] on aws (ap-southeast-2)

> flux suspend kustomization infrastructure --all

► suspending kustomization infrastructure in flux-system namespace

✔ kustomization suspended

clusterfiles/charts on git master [?] on aws (ap-southeast-2)

> flux suspend kustomization sources --all

► suspending kustomization sources in flux-system namespace

✔ kustomization suspended

clusterfiles/charts on git master [?] on aws (ap-southeast-2)

> flux suspend kustomization apps --all

✗ no Kustomization objects found in flux-system namespace

clusterfiles/charts on git master [?] on aws (ap-southeast-2)

> flux suspend kustomization flux-system --all

► suspending kustomization flux-system in flux-system namespace

✔ kustomization suspendednow lets apply the bits individually:

serviceaccount

clusterfiles/charts on git master [?] on aws (ap-southeast-2)

> kubectl apply -f ./k8s-gateway/templates/serviceaccount.yaml

serviceaccount/k8s-gateway created

clusterfiles/charts on git master [?] on aws (ap-southeast-2)

> ssh jadakren -C "nslookup google.com"

Server: 127.0.0.53

Address: 127.0.0.53#53

Non-authoritative answer:

Name: google.com

Address: 142.250.70.206

Name: google.com

Address: 2404:6800:4006:812::200econfigmap

clusterfiles/charts on git master [?] on aws (ap-southeast-2)

x kubectl apply -f ./k8s-gateway/templates/configmap.yaml

configmap/k8s-gateway created

clusterfiles/charts on git master [?] on aws (ap-southeast-2)

> ssh jadakren -C "nslookup google.com"

Server: 127.0.0.53

Address: 127.0.0.53#53

Non-authoritative answer:

Name: google.com

Address: 142.250.67.14

Name: google.com

Address: 2404:6800:4006:811::200erbac

clusterfiles/charts on git master [?] on aws (ap-southeast-2)

> kubectl apply -f ./k8s-gateway/templates/rbac.yaml

clusterrole.rbac.authorization.k8s.io/k8s-gateway created

clusterrolebinding.rbac.authorization.k8s.io/k8s-gateway created

clusterfiles/charts on git master [?] on aws (ap-southeast-2)

> ssh jadakren -C "nslookup google.com"

Server: 127.0.0.53

Address: 127.0.0.53#53

Non-authoritative answer:

Name: google.com

Address: 142.250.67.14

Name: google.com

Address: 2404:6800:4015:802::200eservice

clusterfiles/charts on git master [?] on aws (ap-southeast-2)

> kubectl apply -f ./k8s-gateway/templates/service.yaml

service/k8s-gateway created

clusterfiles/charts on git master [?] on aws (ap-southeast-2)

> ssh jadakren -C "nslookup google.com"

;; connection timed out; no servers could be reached

deployment

clusterfiles/charts on git master [?] on aws (ap-southeast-2) took 15s

x kubectl apply -f ./k8s-gateway/templates/deployment.yaml

deployment.apps/k8s-gateway created

clusterfiles/charts on git master on aws (ap-southeast-2) took 4s

x ssh jadakren -C "nslookup google.com"

;; connection timed out; no servers could be reached

And sure enough, back in my event log:

Here's the generated templates I used above: https://github.com/airtonix/clusterfiles/commit/128126c70bd0011746ac0aaef9ad23f7a3c453ae

additionally, the situation for resolvd on the node:

clusterfiles/charts on git master on aws (ap-southeast-2)

x ssh jadakren -C "cat /etc/resolv.conf"

# This is /run/systemd/resolve/stub-resolv.conf managed by man:systemd-resolved(8).

# Do not edit.

#

# This file might be symlinked as /etc/resolv.conf. If you're looking at

# /etc/resolv.conf and seeing this text, you have followed the symlink.

#

# This is a dynamic resolv.conf file for connecting local clients to the

# internal DNS stub resolver of systemd-resolved. This file lists all

# configured search domains.

#

# Run "resolvectl status" to see details about the uplink DNS servers

# currently in use.

#

# Third party programs should typically not access this file directly, but only

# through the symlink at /etc/resolv.conf. To manage man:resolv.conf(5) in a

# different way, replace this symlink by a static file or a different symlink.

#

# See man:systemd-resolved.service(8) for details about the supported modes of

# operation for /etc/resolv.conf.

nameserver 127.0.0.53

options edns0 trust-ad

search lan

clusterfiles/charts on git master on aws (ap-southeast-2)

> ssh jadakren -C "cat /run/systemd/resolve/resolv.conf"

# This is /run/systemd/resolve/resolv.conf managed by man:systemd-resolved(8).

# Do not edit.

#

# This file might be symlinked as /etc/resolv.conf. If you're looking at

# /etc/resolv.conf and seeing this text, you have followed the symlink.

#

# This is a dynamic resolv.conf file for connecting local clients directly to

# all known uplink DNS servers. This file lists all configured search domains.

#

# Third party programs should typically not access this file directly, but only

# through the symlink at /etc/resolv.conf. To manage man:resolv.conf(5) in a

# different way, replace this symlink by a static file or a different symlink.

#

# See man:systemd-resolved.service(8) for details about the supported modes of

# operation for /etc/resolv.conf.

nameserver 192.168.8.10

search lan

clusterfiles/charts on git master on aws (ap-southeast-2)

> ssh jadakren -C "resolvctl status"

zsh:1: command not found: resolvctl

clusterfiles/charts on git master on aws (ap-southeast-2)

x ssh jadakren -C "resolvectl status"

Global

Protocols: LLMNR=resolve -mDNS -DNSOverTLS DNSSEC=no/unsupported

resolv.conf mode: stub

Link 2 (enp0s31f6)

Current Scopes: DNS LLMNR/IPv4 LLMNR/IPv6

Protocols: +DefaultRoute +LLMNR -mDNS -DNSOverTLS DNSSEC=no/unsupported

Current DNS Server: 192.168.8.10

DNS Servers: 192.168.8.10

DNS Domain: lan

Link 3 (virbr0)

Current Scopes: none

Protocols: -DefaultRoute +LLMNR -mDNS -DNSOverTLS DNSSEC=no/unsupported

Link 4 (docker0)

Current Scopes: none

Protocols: -DefaultRoute +LLMNR -mDNS -DNSOverTLS DNSSEC=no/unsupported

Link 541 (flannel.1)

Current Scopes: LLMNR/IPv4 LLMNR/IPv6

Protocols: -DefaultRoute +LLMNR -mDNS -DNSOverTLS DNSSEC=no/unsupported

Link 542 (cni0)

Current Scopes: LLMNR/IPv4 LLMNR/IPv6

Protocols: -DefaultRoute +LLMNR -mDNS -DNSOverTLS DNSSEC=no/unsupported

Link 544 (veth80e4867d)

Current Scopes: LLMNR/IPv6

Protocols: -DefaultRoute +LLMNR -mDNS -DNSOverTLS DNSSEC=no/unsupported

Link 546 (vethd93c4ed4)

Current Scopes: LLMNR/IPv6

Protocols: -DefaultRoute +LLMNR -mDNS -DNSOverTLS DNSSEC=no/unsupported

Link 549 (vethbdf5a636)

Current Scopes: LLMNR/IPv6

Protocols: -DefaultRoute +LLMNR -mDNS -DNSOverTLS DNSSEC=no/unsupported

Link 550 (vethff150521)

Current Scopes: LLMNR/IPv6

Protocols: -DefaultRoute +LLMNR -mDNS -DNSOverTLS DNSSEC=no/unsupported

Link 551 (veth27a38309)

Current Scopes: LLMNR/IPv6

Protocols: -DefaultRoute +LLMNR -mDNS -DNSOverTLS DNSSEC=no/unsupported

Link 552 (veth6bc3aab6)

Current Scopes: LLMNR/IPv6

Protocols: -DefaultRoute +LLMNR -mDNS -DNSOverTLS DNSSEC=no/unsupported

Link 553 (veth873354ce)

Current Scopes: LLMNR/IPv6

Protocols: -DefaultRoute +LLMNR -mDNS -DNSOverTLS DNSSEC=no/unsupported

Link 554 (vethe4bd35d6)

Current Scopes: LLMNR/IPv6

Protocols: -DefaultRoute +LLMNR -mDNS -DNSOverTLS DNSSEC=no/unsupported

Link 555 (veth17d83c8b)

Current Scopes: LLMNR/IPv6

Protocols: -DefaultRoute +LLMNR -mDNS -DNSOverTLS DNSSEC=no/unsupported

Link 560 (veth51585a2b)

Current Scopes: LLMNR/IPv6

Protocols: -DefaultRoute +LLMNR -mDNS -DNSOverTLS DNSSEC=no/unsupported

Link 561 (veth22d6d541)

Current Scopes: LLMNR/IPv6

Protocols: -DefaultRoute +LLMNR -mDNS -DNSOverTLS DNSSEC=no/unsupported

if i explicitly tell it to use my adguard-home dns server:

clusterfiles/charts on git master on aws (ap-southeast-2)

x ssh jadakren -C "host google.com 192.168.8.10"

Using domain server:

Name: 192.168.8.10

Address: 192.168.8.10#53

Aliases:

google.com has address 142.250.70.206

google.com has IPv6 address 2404:6800:4006:812::200e

google.com mail is handled by 10 smtp.google.com.you can probs replicate this by doing the following:

curl -L https://raw.github.com/airtonix/clusterfiles/master/pull.sh | sh on your workstationclusterfiles master fedora-34-hostname-or-ip yourusername yoursshprivatekeyGITHUB_TOKEN=THE_PAT_TOKEN clusterfiles bootstrap default airtonix clusterfilesI suspect a hacky way is to have some kind of ordering in the helm file that runs the deployment before the service.

A probably better long term fix is understanding why the nodes ability to resolve dns queries is affected by the service? (turn of systemd-resolveconf?)

oh ok, now I think I know what's happening. So your node is running systemd-resolved which is listening on 127.0.0.53:53.

When you install k8s-gateway helm chart, it creates a LoadBalancer-type service that's listening on port 53. This, in turn, creates an iptables rules to redirect all traffic for port 53 to that service's backends (which don't exist yet).

This also explains that external DNS resolution doesn't work until you add the forward plugin to the k8s_gateway, since all external DNS lookups go to 127.0.0.53 and end up redirected to the k8s_gateway pod.

I think the simplest workaround would be to change your /etc/resolv.conf to point to 192.168.8.10 directly, instead of going via systemd-resolved. Do you wanna try that?

i'll give it a go tomorrow morning. 👍🏻

Thanks heaps for your paitence and experience here ❤️

looks like this guy experienced exactly my problem: http://asynchrono.us/disabling-systemd-resolved-on-fedora-coreos-33.html

👍🏻 If i disable systemd-resolved and wipe k3s then restart, it all works.

I imagine this isn't usually a problem for people running docker via DockerForWin, or DockerForMac or some cloud-init based linux os that doesn't setup resolveconf.

#mysterysolved

Thanks for this thread. I also ran into this issue on Ubuntu with k3s. To summarize, on each node in my cluster I did the following:

/etc/resolv.conf nameserver from 127.0.0.3 to local name serversudo systemctl stop systemd-resolved

sudo systemctl disable systemd-resolved

sudo service k3s restartThe deployed the helm chart (which enables the forward plugin by default).

when deploying the following, all future attempts to resolve any other dns queries fails.

192.168.8.222/kube.lan/192.168.8.222/if i add the following :

Then dns queries for external hostnames are able to leave my local network and get resolved, but then so does every other query for the cluster.