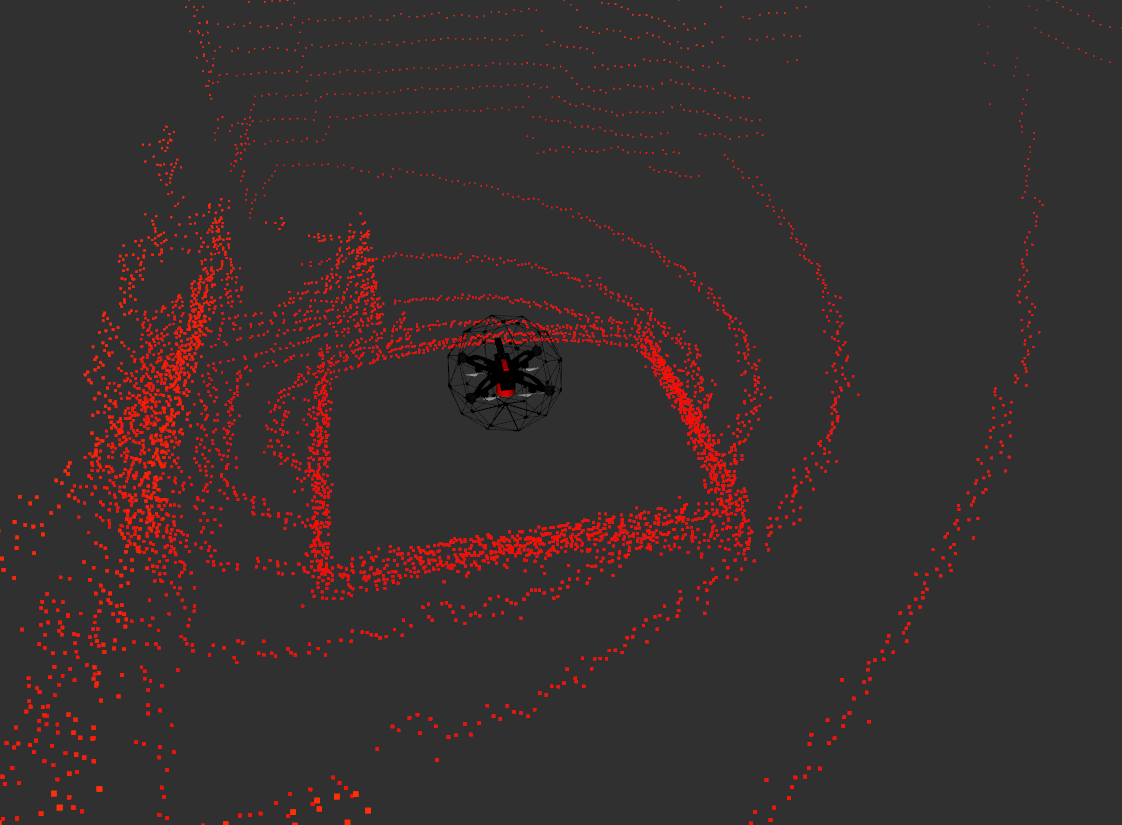

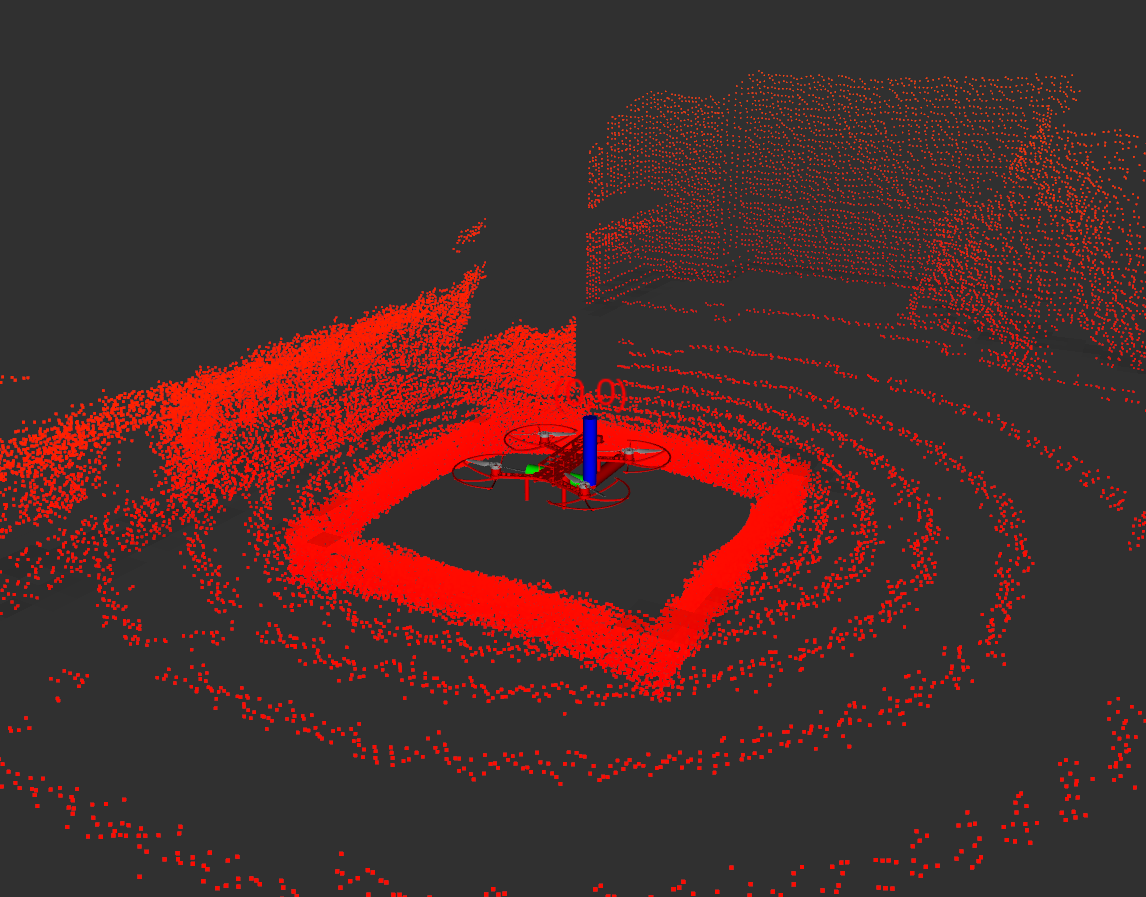

The boxes seen in the gagarin and qav500 are effect of the min range associated with the laser sensors, which are currently set at 0.8 meters.

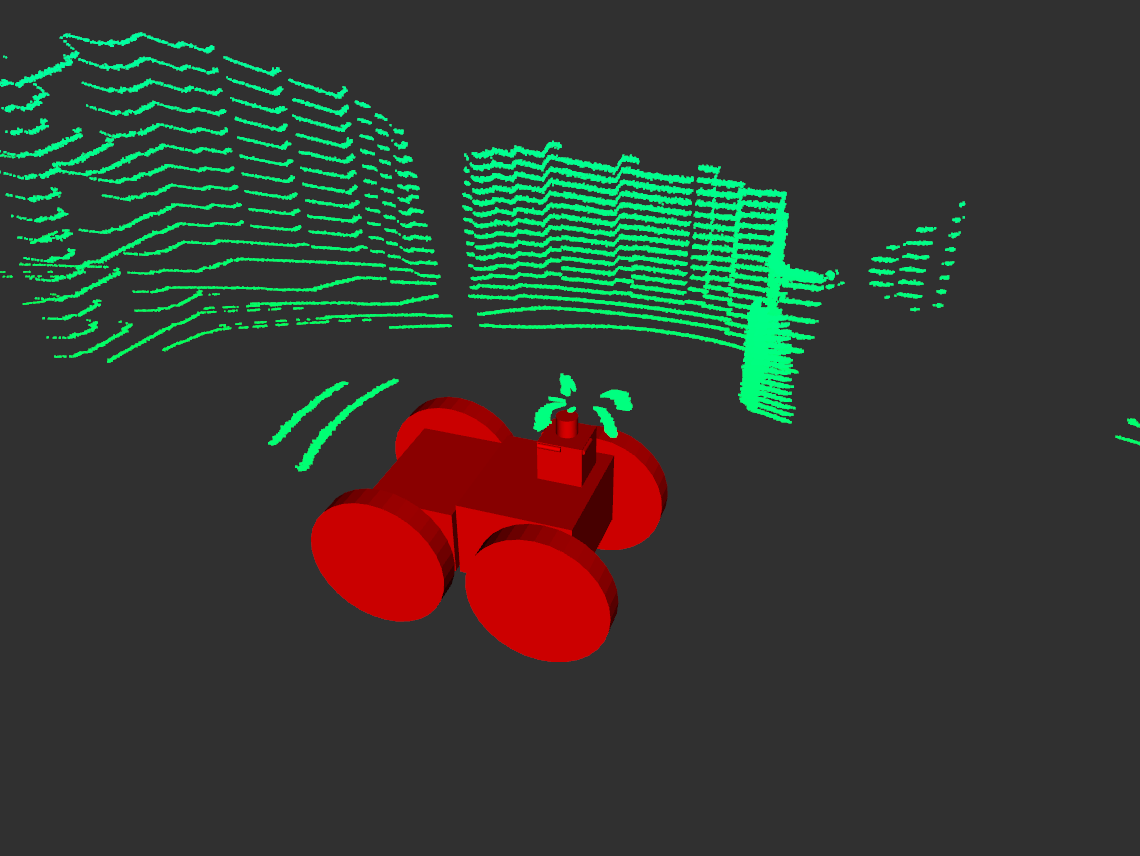

The returns for EXPLORER_R2 match the robot model in simulation. The robot description seen in rviz is not an accurate reflection of the physical robot.

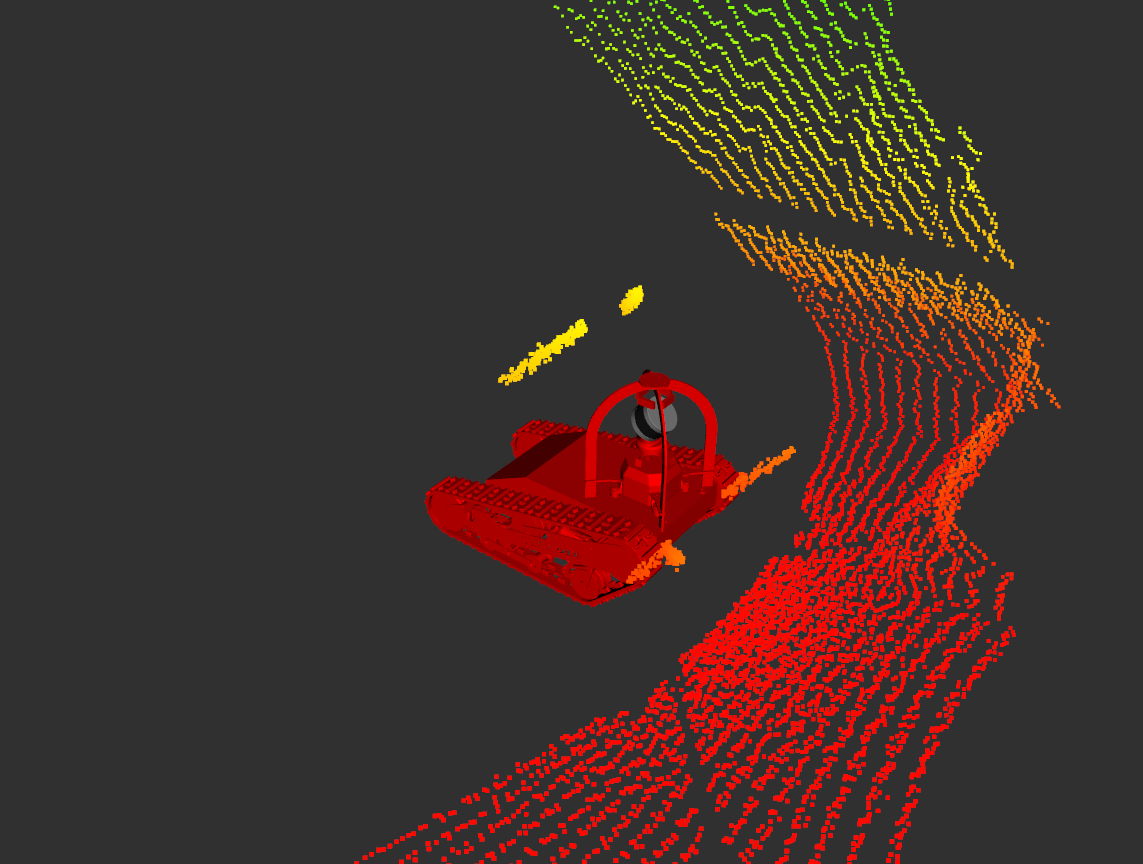

The strange returns for CSIRO_DATA61_DTR are also related to a min range of 0.3 meters.

Looking through some of the robots, I've noticed that the LIDAR returns are sometimes wonky, typically but not always if part of the scan intersects robot geometry. I haven't exhaustively checked them all but below are a few for which it is particularly noticeable. For the UGV's the self intersecting scan is somehow displaced from the actual robot geometry, and for the UAV's there is a weird box shaped thing going on.

CSIRO_DATA61_DTR

EXPLORER_R2

MARBLE_QAV500:

CERBERUS_Gagarin: