Hello,

without looking further into this, my suspicion is that the Python version runs on the CPU, whereas the TFJS model uses the GPU.

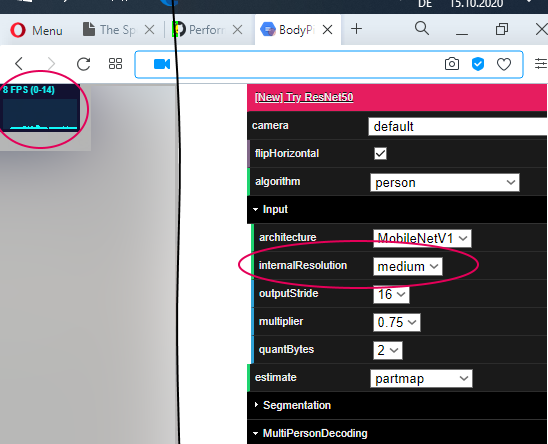

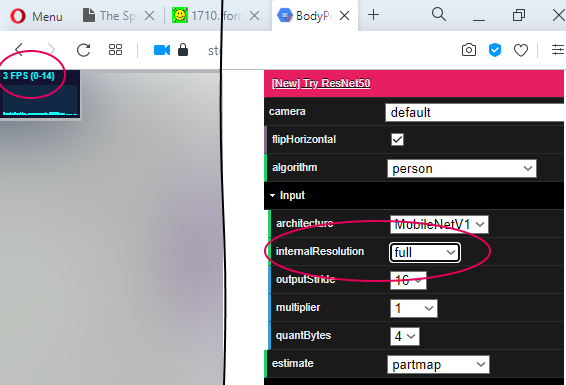

Running the demo reveals that the TFJS version hardly uses any CPU and hits the GPU instead:  .

.

I'll do some more testing, but I think I might be able to add a flag to select which target hardware to run the model on.

Hi there

I managed to convert the BodyPix model from tfjs model to SavedModel, but i observed some obvious performance drop (AP DROP on segmentation)on the python model. I tried several bodypix models in different scaling, all behaved worse than TFJS model. Any idea? Many thanks