This article is an introduction to MetalLB, a solution to expose services if the Kubernetes cluster is not deployed on a Cloud platform.

Intended audience: Kubernetes administrators.

By Abdellah Seddik TAHARDJEBBAR, Cloud Consultant @ Objectif Libre

Introduction

Kubernetes is the new hot topic in the IT world today. While widly adopted, some problems are still hard to solve, including how to expose services outside the cluster. If your Kubernetes cluster is deployed on Cloud platforms such as OpenStack, AWS or GCP, the cluster can deploy Loadbalancers exposed by the Cloud platform. But not all Kubernetes clusters are deployed on Cloud platforms. Kubernetes can also be deployed on bare metal servers. In this case, LoadBalancers will remain in the “pending” state indefinitely when created. Bare metal cluster operators are left with two tools to bring user traffic into their clusters, “NodePort” and “externalIPs” services. Both options have significant downsides for production use. A new solution called MetalLB has been introduced to help bare metal cluster operators.

MetalLB

MetalLB is a Loadbalancer implementation for bare metal Kubernetes clusters, based on standard routing protocols.

Concepts

In a Cloud environment, the creation of the Loadbalancer and the allocation of the external IP address is done by the Cloud platform. In a bare metal cluster, MetalLB is responsible for that allocation. For this a network address pool must be reserved for MetalLB. Once MetalLB has assigned an external IP address to a service, it needs to redirect the traffic from the external IP to the cluster. To do so, MetalLB uses standard protocols such as ARP, NDP, or BGP.

Layer 2 mode (ARP/NDP)

In this mode a service is owned by one node in the cluster. It is implemented by announcing that the layer 2 address (MAC address) that matches to the external IP is the MAC address of the node. For external devices the node have multiple IP address.

Architecture

With the layer 2 mode MetalLB runs two components:

- Cluster-wide controller: this component is responsible for receiving allocation requests.

- Speaker: the speaker must be installed on each node in the cluster, it advertises the layer 2 address.

Demo

First, install MetalLB using the provided manifest:

$ kubectl apply -f https://raw.githubusercontent.com/google/metallb/v0.7.3/manifests/metallb.yaml

Now new pods were created, a controller and three speaker:

$ kubectl get pod -n metallb-system -o wide controller-7cc9c87cfb-dqz6z 1/1 Running 0 145m 10.233.70.3 node5 <none> <none> speaker-2pl5m 1/1 Running 0 145m 192.168.121.170 node3 <none> <none> speaker-5ndrq 1/1 Running 0 145m 192.168.121.224 node4 <none> <none> speaker-rln5v 1/1 Running 0 145m 192.168.121.72 node5 <none> <none>

The next step is to configure MetaLB using a ConfigMap. We set the operation mode (Layer 2 or BGP) and the external IP address range:

apiVersion: v1

kind: ConfigMap

metadata:

namespace: metallb-system

name: config

data:

config: |

address-pools:

- name: my-ip-space

protocol: layer2

addresses:

- 192.168.143.230-192.168.143.250

In this configuration we tell MetalLB to hand out addresses from the 192.168.143.230-192.168.143.250 range, using layer 2 mode (protocol: layer2).

To test our Loadbalancer, we need to create a Loadbalancer service type:

$ kubectl apply -f https://raw.githubusercontent.com/google/metallb/v0.7.3/manifests/tutorial-2.yaml

Now we can see that a new Loadbalancer service was created and MetalLB successfully assigned an external IP address to it from the pool that we specified in the configuration:

$ kubectl get svc nginx NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE nginx LoadBalancer 10.233.30.62 192.168.143.230 80:31937/TCP 6h26m

Now if we tried to access to the service the client sends an ARP request to find the MAC address of the external IP address. One of the speakers responds with the MAC address of its node.

$kubectl logs -l component=speaker -n metallb-system --since=1m

{"caller":"arp.go:102","interface":"eth2","ip":"192.168.143.230","msg":"got ARP request for service IP, sending response","responseMAC":"52:54:00:a8:63:c5","senderIP":"192.168.143.1","senderMAC":"52:54:00:bd:4a:3e","ts":"2019-04-25T14:21:58.369396026Z"}

{"caller":"arp.go:102","interface":"eth2","ip":"192.168.143.230","msg":"got ARP request for service IP, sending response","responseMAC":"52:54:00:a8:63:c5","senderIP":"192.168.143.1","senderMAC":"52:54:00:bd:4a:3e","ts":"2019-04-25T14:22:29.145677Z"}

Using the Layer two mode to create a Loadbalancer is very simple but it is also limited because a service can be accessed from one and only one node. In a production environment it is best to use the BGP mode.

BGP

With the BGP mode the speakers establish a BGP peering with routers outside of the cluster, and tell those routers how to forward traffic to the service IPs. Using BGP allows for true load balancing across multiple nodes, and fine-grained traffic control thanks to BGP’s policy mechanisms.

Note :

In this demo we will not describe the router configuration. We assume the the router accepts all BGP connections coming from the speakers.

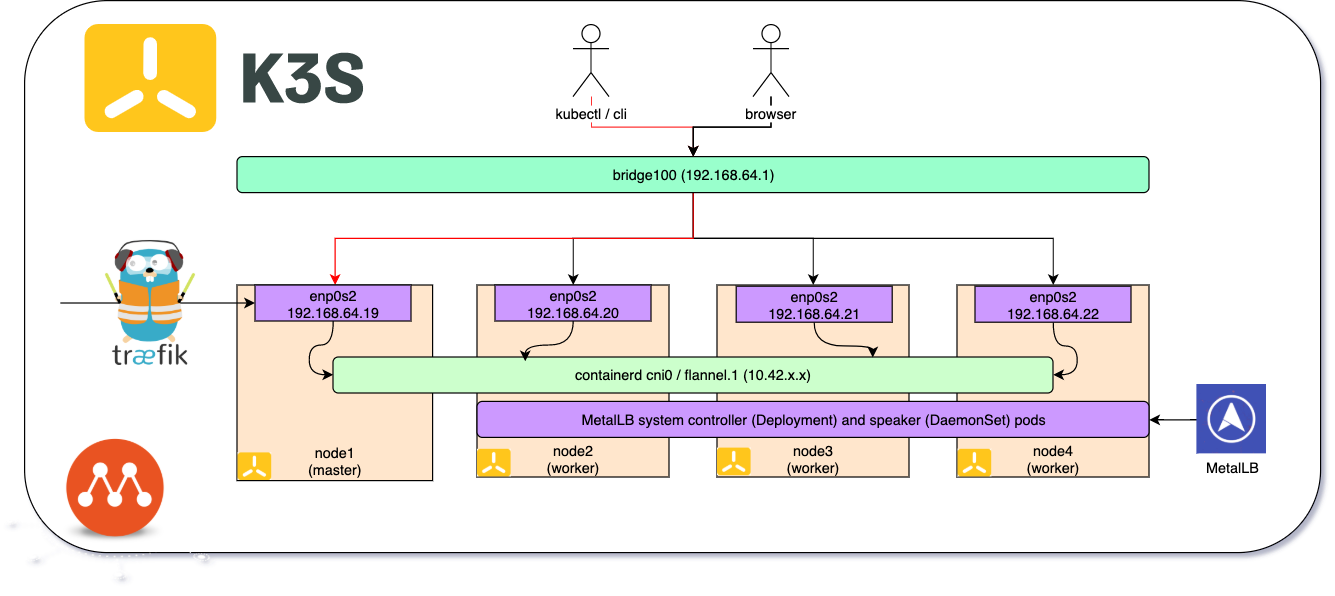

The following architecture is used in this demo:

Just like with the first mode, install MetalLB using the provided manifest:

kubectl apply -f https://raw.githubusercontent.com/google/metallb/v0.7.3/manifests/metallb.yaml

And to configure MetalLB with a ConfigMap:

apiVersion: v1

kind: ConfigMap

metadata:

namespace: metallb-system

name: config

data:

config: |

peers:

- my-asn: 64500

peer-asn: 64500

peer-address: 192.168.121.10

address-pools:

- name: my-ip-space

protocol: bgp

addresses:

- 192.168.143.230-192.168.143.250

In addition to the external IP pool we need to define the AS number that will be used by speakers and the IP address of the remote peers with their AS numbers.

We can see in the router logs that a new peer has been added to the neighbors table.

R1#show ip bgp summary BGP router identifier 192.168.143.2, local AS number 64500 BGP table version is 23, main routing table version 23 1 network entries using 144 bytes of memory 1 path entries using 80 bytes of memory 1/1 BGP path/bestpath attribute entries using 136 bytes of memory 0 BGP route-map cache entries using 0 bytes of memory 0 BGP filter-list cache entries using 0 bytes of memory BGP using 360 total bytes of memory BGP activity 5/4 prefixes, 13/12 paths, scan interval 60 secs Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd 192.168.121.72 4 64500 2 4 23 0 0 00:00:24 0 192.168.121.170 4 64500 2 5 23 0 0 00:00:24 0 192.168.121.224 4 64500 2 4 23 0 0 00:00:24 0

The next step is to create a service and let MetalLB do its job:

$ kubectl apply -f https://raw.githubusercontent.com/google/metallb/v0.7.3/manifests/tutorial-2.yaml

Just after the creation of the service we can see in the speaker logs that it announces the new external IP address to the router:

{"caller":"main.go:229","event":"serviceAnnounced","ip":"192.168.143.230","msg":"service has IP, announcing","pool":"my-ip-space","protocol":"bgp","service":"default/nginx","ts":"2019-04-25T22:14:52.082805682Z"}

{"caller":"main.go:231","event":"endUpdate","msg":"end of service update","service":"default/nginx","ts":"2019-04-25T22:14:52.082823764Z"}

{"caller":"main.go:159","event":"startUpdate","msg":"start of service update","service":"default/nginx","ts":"2019-04-25T22:14:49.878731257Z"}

{"caller":"main.go:172","event":"endUpdate","msg":"end of service update","service":"default/nginx","ts":"2019-04-25T22:14:49.878992728Z"}

{"caller":"main.go:159","event":"startUpdate","msg":"start of service update","service":"default/nginx","ts":"2019-04-25T22:14:49.885773857Z"}

{"caller":"bgp_controller.go:201","event":"updatedAdvertisements","ip":"192.168.143.230","msg":"making advertisements using BGP","numAds":1,"pool":"my-ip-space","protocol":"bgp","service":"default/nginx","ts":"2019-04-25T22:14:49.886003805Z"}

On the router we can see that a new network (external IP address) was added with three paths. Each path is linked to one of the nodes:

R1#show ip route bgp

Codes: L - local, C - connected, S - static, R - RIP, M - mobile, B - BGP

D - EIGRP, EX - EIGRP external, O - OSPF, IA - OSPF inter area

N1 - OSPF NSSA external type 1, N2 - OSPF NSSA external type 2

E1 - OSPF external type 1, E2 - OSPF external type 2

i - IS-IS, su - IS-IS summary, L1 - IS-IS level-1, L2 - IS-IS level-2

ia - IS-IS inter area, * - candidate default, U - per-user static route

o - ODR, P - periodic downloaded static route, H - NHRP, l - LISP

+ - replicated route, % - next hop override

Gateway of last resort is not set

192.168.143.0/32 is subnetted, 1 subnets

B 192.168.143.230 [200/0] via 192.168.121.224, 00:00:15

[200/0] via 192.168.121.170, 00:00:15

[200/0] via 192.168.121.72, 00:00:15

Using BGP as a load-balancing mechanism allows you to use standard router hardware. However, it comes with downsides as well. You can find out more about this limitations and how to mitigate them here.

Conclusion

MetalLB allows to create Kubernetes Loadbalancer services without the need to deploy your cluster on a cloud platform. MetalLb has two modes of operation: a simple but limited L2 mode which doesn’t need any external hardware or configuration, and a BGP mode which is more robust and production ready, but requires more setup actions on the network side.