@bauthard - It seems like we need fdmax ?

Closed ZeroDot1 closed 4 years ago

Is work being done on this? @Mzack9999. May I take a stab at it?

We have to check that all the resources used in each source are released correctly before using fdmax, it may not all be released correctly, I think it is a good exercise, there are some golang gotchas for defer, ej. it does not work as expected in iteration blocks.

Can we test this against the new 2.4 release?

@ZeroDot1 Can you try to reproduce this with the latest release?

@bauthard Yeah, I'll take another look at that.

@bauthard Yes, I succeeded reproducing the problem...

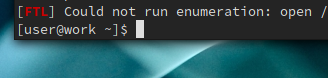

[FTL] Could not run enumeration: open /11/doipool.com.txt: too many open files

[INF] Current Version: 2.4.1

I use a list of currently 1694 URLs to check for subdomains.

Kernel: 5.8.2-arch1-1 x86_64 CPU: Intel Core i5-8600K Memory: 31983MiB Distro: Arch Linux go: 2:1.15-1

Thnka you very much, @ZeroDot1

I don't know if the right thing is to do something about at development level because this is an OS ulimit issue. I think the use of some technice to bypass the ulimit is not correct because is not legitimate it is up the the user the choice of increment this or not.

A quick solution would be to split the lists with the URLs into different files. However, this means a significant amount of additional work when maintaining the lists. With other tools, e.g. Findomain, I don't have this problem because I successfully tested a URL list with 50.000 URLs without any interruption.

While researching for the CoinBlockerLists I tried a lot of tools and solutions. Subfinder is the only solution that can deliver the most subdomains, that's why I use Subfinder a lot. Subfinder is definitely very useful for my work on the CoinBlockerLists, and I really appreciate the work you guys do. Thank you very much.

@ZeroDot1 Could you please give me an URL test file?

@vzamanillo Yes, here is a list of 1261 mixed URLs. File: testurls.txt

It's time to use fdmax, but IMO it should be used as an option. Thoughts?

Yes, it is a good idea to use this as an additional option.

It should be easy to enable it with an option e.g. --fdmax.

Example:

cat '/URLsToScan.txt' | 'go/bin/subfinder' -config '/Config/subfinder/config.yaml' -t 100 -timeout 1 -fdmax -silent -oD /11/ | tee /URLsToScanDONEsubfinder.txt

Alternatively, it should also be possible to activate the option in the configuration file.

Hi @vzamanillo @bauthard,

Thanks for the update, unfortunately I can't test it at the moment because I have problems with the internet see the following tweet: https://twitter.com/zero_dot1/status/1306782690696466435 If my internet works properly again I will test it immediately. Thanks a lot for your work, I appreciate it very much.

Hi @vzamanillo @bauthard,

Bad news, I visited a friend today because the internet is still not working properly in my home to test subfinder.

Unfortunately the problem still occurs.

Subfinder was compiled from the sources.

Subfinder was compiled from the sources.

Looks like a different error, it is not related to file descriptors limit.

Could you please paste the complete command?

I used exactly the same commands as in the first posting.

cat '/URLsToScan.txt' | 'go/bin/subfinder' -config '/Config/subfinder/config.yaml' -t 100 -timeout 1 -silent -oD /11/ | tee /URLsToScanDONEsubfinder.txt

[FTL] Could not run enumeration: open /home/user/Dokumente/GitLab/coinblockerlistspro/11/moneropool.com.txt: too many open files

I used exactly the same commands as in the first posting.

cat '/URLsToScan.txt' | 'go/bin/subfinder' -config '/Config/subfinder/config.yaml' -t 100 -timeout 1 -silent -oD /11/ | tee /URLsToScanDONEsubfinder.txt[FTL] Could not run enumeration: open /home/user/Dokumente/GitLab/coinblockerlistspro/11/moneropool.com.txt: too many open files

Oh, I see, sorry.

Hey no problem, we are all humans.

What's the problem (or question)?

While performing an automatic scan, the following problem occurred:

[FTL] Could not run enumeration: open /11/miningpools.cloud.txt: too many open filesAfter this message Subfinder stopped working.How can we reproduce the issue?

cat '/URLsToScan.txt' | 'go/bin/subfinder' -config '/Config/subfinder/config.yaml' -t 100 -timeout 1 -silent -oD /11/ | tee /URLsToScanDONEsubfinder.txtAfter 1034 URLS the error occurred. I don't know if it is a problem in Subfinder or again a problem with my internet connection.What are the running context details?

pip,apt-get,git cloneorzip/tar.gz):GO111MODULE=on go get -v github.com/projectdiscovery/subfinder/cmd/subfinder[FTL] Could not run enumeration: open /11/miningpools.cloud.txt: too many open files