Alternatively, and this I think is useful as a generic option, maybe a new grouping behavior can be introduced where alerts are only grouped inside the initial group_wait period. After notifications are sent for a group, it is effectively frozen, and new alerts matching the group key would create a new group instead. Does that make sense? I think that would work better in the context of PagerDuty, and perhaps other alert mechanisms.

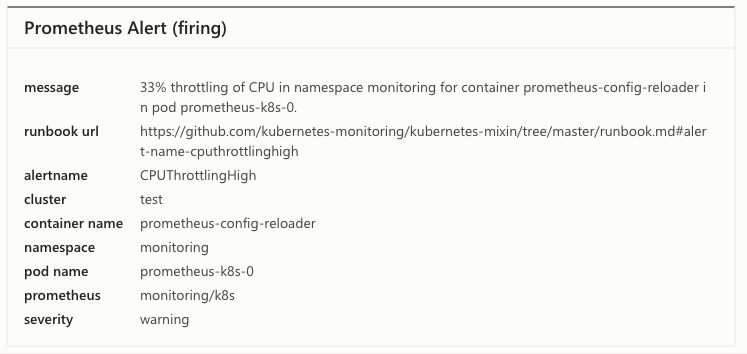

When triggering multiple alerts that get grouped together, the result in PagerDuty winds up being like this:

Only the alert summary gets updated, and subsequent messages get logged. The alert details are not updated (it still reflects the original message and summary). You have to click through the detailed log to see each individual API message sent from Alertmanager.

Ideally I'd want grouped alerts to show up individually in PagerDuty, and then be associated with the same incident, but AIUI there isn't an API for this.

The main issue here is that as further alerts are grouped together, new notifications are not triggered. This is dangerous, because it means that alerts can go unnoticed quite silently (until the operator manually polls Prometheus and/or Alertmanager). Basically, prometheus is "editing" an existing alert instead of creating new ones. The only workaround is to disable grouping altogether, but then this invalidates the grouping of simultaneous alerts, which means alert storms when several things break due to one root cause.

I'm honestly not sure what the right solution here is, but maybe something like generate new Alerts per group with a new

dedup_keywhenever additional alerts are grouped together, and try to do something smart about resolution events, like track when sent events have each of their root alerts resolved and send the resolution event then? With the current behavior, the only clean setup I can see is to just disable alert grouping altogether.alertmanager, version 0.15.2 (branch: HEAD, revision: d19fae3bae451940b8470abb680cfdd59bfa7cfa)