To be fair, while it can obviously be done, forward having side effects (here setting attributes) is not the most common use case

Open divyekapoor opened 4 years ago

To be fair, while it can obviously be done, forward having side effects (here setting attributes) is not the most common use case

@Evpok Even without the side effects, the performance gap is consistent, just check out: https://github.com/divyekapoor/ml-op-benchmarks and change the code if you'd prefer:

Outcomes:

Time (PyTorch) (ms): 4097.000675

Time (PyTorch optimized=True) (ms): 3982.672392

Time (PyTorch optimized=False) (ms): 4017.969171

Time (PyTorch from Loaded) (ms): 2879.079591

Time taken (Python3) (ms): 18.797112Code:

class TorchFizzBuzz(torch.nn.Module):

def __init__(self):

super(TorchFizzBuzz, self).__init__()

def forward(self, n: torch.Tensor):

i = torch.tensor(0, dtype=torch.int32, requires_grad=False)

fizz = torch.zeros(1)

buzz = torch.zeros(1)

fizzbuzz = torch.zeros(1)

while i < n:

if i % 6 == 0:

fizzbuzz += 1

elif i % 3 == 0:

buzz += 1

elif i % 2 == 0:

fizz += 1

i += 1

return torch.stack([fizz, buzz, fizzbuzz])@Evpok From the discussion with the Tensorflow folks (tensorflow/tensorflow#34500), we generated a NumPy baseline if that would be preferable.

| FizzBuzz Iteration Counts | 100000 | |||

|---|---|---|---|---|

| Method Latency (ms) | Iteration Latency (usec) | Python Multiplier | C++ Multiplier | |

| Tensorflow Python | 4087 | 40.87 | 227.06 | 24327 |

| Tensorflow Saved Model Python | 4046 | 40.46 | 224.78 | 24083 |

| Tensorflow Python no Autograph | 3981 | 39.81 | 221.16 | 23696 |

| PyTorch Python | 4007 | 40.07 | 222.61 | 23851 |

| PyTorch TorchScript Python (from Loaded TorchScript) | 2830 | 28.3 | 157.22 | 16845 |

| NumPy Python | 420 | 4.2 | 23.3 | 2500 |

| PyTorch TorchScript C++ (Native) | 255 | 2.55 | 14.17 | 1518 |

| PyTorch TorchScript C++ (Native + ATen Tensors) | 252 | 2.52 | 14.00 | 1500 |

| Raw Python | 18 | 0.18 | 1.00 | 107 |

| Raw C++ | 0.168 | 0.00168 | 0.01 | 1 |

Why is the numpy version faster than torchscript C++ per iteration but slower for 100,000 iterations? Seems like there's a factor of 10 out for one of those. Is iteration meant to be 4.2us?

Yes. Fixed. Thanks for pointing it out.

A colleague also got a Torchscript vectorized implementation set up with an ~8ms baseline (beating the 18ms from Python). So that would be something to think about as a reference implementation. Discussion on the equivalent TF bug is also quite useful - they have some experimental workarounds.

@xsacha @suo Could you indicate the next steps?

Hey, @divyekapoor I'd be interested to know the ultimate use case you're benchmarking for.

The reason I ask is that PyTorch is poorly optimized for doing lots of computations on scalar values—as mentioned on the TF issue, these libraries are typically targeted toward doing operations on large tensors, where the per-op overhead is dwarfed by the operator computation itself.

As such, if something fizzbuzz-like is similar to your use case, you're unlikely to get performance comparable to just writing it in C++. In other words, you are paying the cost of using PyTorch (overhead) without benefiting from its core features (autograd, rich tensor library, etc.)

That said, a few thoughts on this particular case:

Thanks for the detailed reply @suo ! Our usecase is cross features for some of our online serving models where the features cannot be prematerialized (think UserContext x ImageFeatures where both users and images are large sets O(millions/billions)). Think about these powering something like the Instagram feed / Pinterest feed.

For the purposes of our discussion, assume an LR model where Users have some topic affinities and Images have some topic affinities. Both are sparse vectors of the form { topic: weight }. The cross feature is the dot product after some sanitization, thresholding, normalization, boosts. The dot product itself is easy to vectorize but everything around it is regular control flow (eg. Boost feature value by 2x if both sides have more than 3 matches from a given list [a, b, c], if there are more than 7 matches from this other list, reduce by 0.5, one feature might be counts matching a hardcoded feature subset etc.).

To be clear, this is a hypothetical illustrative example. The actual cross features are currently in straight custom C++ in our serving binary written by model engineers and are quite varied. However, given the User and Image inputs, we’d like the serving binary to never know how to generate these crosses (it should all be part of model code). Model engineers can then be more productive (no C++) and the infra simplifies (everyone is dealing with non cross features as inputs even though these inputs may be somewhat complex eg. Maps). Similarly on training, the cross features can be backfilled or tuned (again, no materialized cross features). The end goal is some non trivial cross features produced in-model during execution using some light control flow ops without lots of overhead.

(Written on mobile, happy to add more context in a bit)

Is using tensor as a loop counter and using tensor for control flow operations necessary/makes benchmark more representative? TorchScript is fine with python numbers, and rewriting the benchmarked function as

class TorchFizzBuzz(torch.nn.Module):

def __init__(self):

super(TorchFizzBuzz, self).__init__()

def forward(self, n: int):

i = 0

fizz = torch.zeros(1)

buzz = torch.zeros(1)

fizzbuzz = torch.zeros(1)

one = torch.ones(1)

while i < n:

if i % 6 == 0:

fizzbuzz += one

elif i % 3 == 0:

buzz += one

elif i % 2 == 0:

fizz += one

i += 1

return torch.stack([fizz, buzz, fizzbuzz])gives

Time (PyTorch from Loaded) (ms): 135.470804I think the point of this issue is to illustrate how much slower a tensor is so that such bottlenecks can be avoided.

Yes you could replace it with an int, but the point is using a tensor to count should be similar in speed.

No one here is using fizzbuzz, we use much more complicated models that exhibit the same issues but are too complex to post here and identify control flow as being a slow down due to the other complexities in the model (such as matrix multiplication).

I don't think that using tensor to count should be similar in speed - it would be a nice bonus if it were, but no one is making you use tensors everywhere. Tensors should be used where it makes sense, and not used where it does not. Also, torch native c++ benchmarks listed here don't use tensors for loops and control flow https://github.com/divyekapoor/ml-op-benchmarks/blob/master/torch_fizz.cc#L17-L37, so in that sense it's not an apples-to-apples comparison.

@xsacha @ngimel - I've updated the benchmarks to address @ngimel 's comments on apples to apples.

The C++ API now has one setup with a Tensor based loop and the other one with a native loop counter.

Point to note: The benchmark is to illustrate that Tensor based ops are hundreds of times slower than just basic Python code. Even at 135ms, the Pytorch version of the program is 10x slower than writing raw Python.

The root cause is a slow Tensor class (illustrated by the fact that the torch::Tensor based loop takes 2700 ms to complete but a native counter loop takes just 200ms (and raw C++ takes just usecs)).

I'm not sure what's driving the slowness with the torch::Tensor class - what would be the best way to investigate?

Apples to Apples links: https://github.com/divyekapoor/ml-op-benchmarks/blob/master/torch_fizz.cc#L17-L37

I'm also interested in this issue. I'm relatively new to PyTorch so take everything I'm saying with a grain of salt, but thought I'd post my stuff here in case someone knows what's going on because I don't.

I'm currently looking at this because I'm doing RL and writing simple RL environments in TorchScripts since it is easier for rapid development/prototyping than writing my environments in C++.

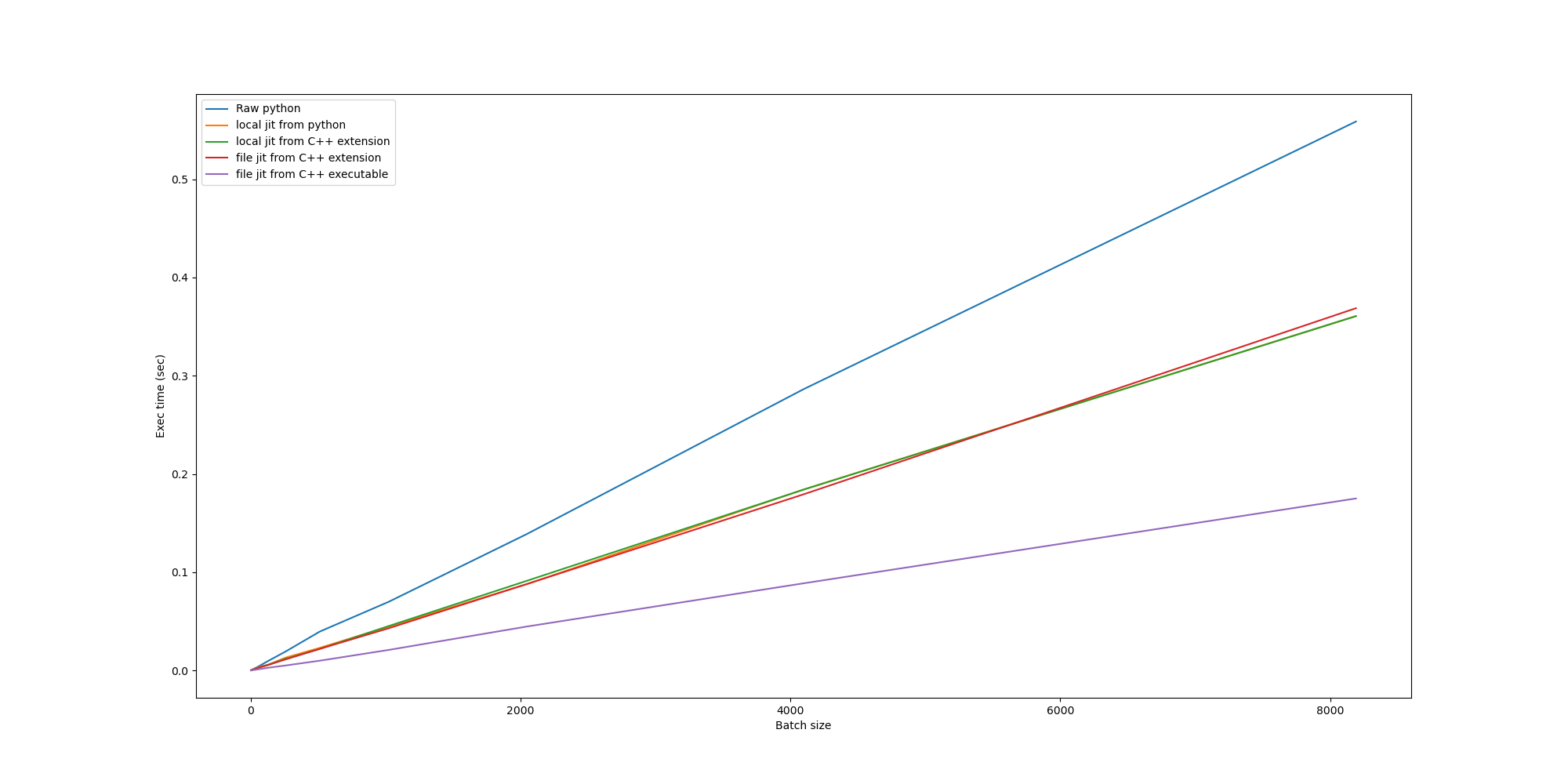

I've written this little benchmark for looking at different approaches: https://github.com/eliasffyksen/RL-env-bench/blob/main/main.py

So far this is what I've found:

The code generates a grid world environment, the model just picks randomly from the different actions (still, up, down, left, right). All the code is in the repo main.py. Batch size is the number of time steps to emulate in the environment

torch::jit::load to load the model from file, then run the model that was compiled by C++ from another C++ extension function.A few notes:

What surprises me is that there is such a big difference which seems to scale with batch size between running it in C++ extension and standalone C++ executable even if they both use torch::jit::load to load the same jit script from file.

Are they actually using different torch::jit::load functionality, or could it be that something about python interrupting the process at some intervals? If latter, could this be the case when running a C++ extension generally?

I will try rewriting the environment in C++ and execute it in a C++ extension as well as in a standalone C++ executable while calling the same model to see if there is any discrepancy between those. I'll post here if you guys are interested in the results.

🐛 Bug

There's a 150x gap in performance for TorchScript ops versus straight Python / C++. Looping over 100K numbers takes 2+ seconds instead of 18ms or better. Please see the benchmarks here: https://github.com/divyekapoor/ml-op-benchmarks

To Reproduce

https://github.com/divyekapoor/ml-op-benchmarks

Steps to reproduce the behavior:

See related TensorFlow issue for context: https://github.com/tensorflow/tensorflow/issues/34500

Expected behavior

Performance similar to raw Python is the expected behavior.

Environment

Please copy and paste the output from our environment collection script (or fill out the checklist below manually).

You can get the script and run it with:

conda,pip, source): pipAdditional context

Code:

cc @suo