TLDR - I just used plain vanilla transformer layer in my model as a decoder, to avoid extra fiddling with BERT code and tried your brand new quantization tutorial

Hope this helps and you can replicate my error!

Closed snakers4 closed 11 months ago

TLDR - I just used plain vanilla transformer layer in my model as a decoder, to avoid extra fiddling with BERT code and tried your brand new quantization tutorial

Hope this helps and you can replicate my error!

cc @zhangguanheng66

Hi , How to load the quantized model from disk for BertClassfication problem? Any assistance greatly helpful.For now, loading quantized model using below function is not working as expected. Is there any way i could load from disk ??

def load_transformer_model(self, model_path, trainargs=train_args):

model = RobertaForSequenceClassification.from_pretrained(model_path)

# model.model.eval()

return model

def save_transformer_model(self, model, output_dir):

if not os.path.exists(output_dir):

os.makedirs(output_dir)

print("Saving model to %s" % output_dir)

model_to_save = model.module if hasattr(model,

'module') else model # Take care of distributed/parallel training

model_to_save.save_pretrained(output_dir)

self.tokenizer.save_pretrained(output_dir)

torch.save(self.train_args, os.path.join(output_dir, 'training_args.bin'))nn.MHA within nn.Transformer is not supported for quantization now.

If anyone is looking for a simple solution for an encoder module, here it is! Dug a bit deeper into actiovations => multi-head attention, it is such a mess now, no wonder this issue is not fixed yet. Also wrote some naive wrapper to support loading weights from PyTorch models.

import torch.nn as nn

import torch.nn.functional as F

class MultiHeadAttention(nn.Module):

def __init__(self, dim=512, n_heads=2, has_out_proj=True, single_matrix=True):

super().__init__()

assert dim % n_heads == 0

if single_matrix:

# keep order in accordance with PyTorch implementation

# https://github.com/zhangguanheng66/pytorch/blob/6c743c7721251ca9b5046fc56a071bc1f36916be/torch/nn/functional.py#L3182

self.QKV = nn.Linear(dim, 3 * dim)

else:

self.K = nn.Linear(dim, dim)

self.Q = nn.Linear(dim, dim)

self.V = nn.Linear(dim, dim)

self.single_matrix = single_matrix

self.scale = (dim / n_heads) ** 0.5

self.n_heads = n_heads

self.has_out_proj = has_out_proj

if self.has_out_proj:

self.out_proj = nn.Linear(dim, dim)

def forward(self, x):

bsz, seq, dim = x.shape

head_dim = dim // self.n_heads

if self.single_matrix:

q, k, v = self.QKV(x).chunk(3, dim=-1)

else:

k, q, v = self.K(x), self.Q(x), self.V(x) # (bs, seq, hid)

# split heads - process them independently, just Like different elements in the batch

# (bs, seq, hid) -> (seq, bs * head, hid / head) -> (bs * head, seq, hid / head)

k = k.transpose(0, 1).contiguous().view(seq, bsz * self.n_heads, head_dim).transpose(0, 1)

q = q.transpose(0, 1).contiguous().view(seq, bsz * self.n_heads, head_dim).transpose(0, 1)

v = v.transpose(0, 1).contiguous().view(seq, bsz * self.n_heads, head_dim).transpose(0, 1)

alpha = F.softmax(k @ q.transpose(1, 2) / self.scale, dim=-1) # (bs * head, seq, hid/head) @ (bs / head, hid / head, seq)

attn = alpha @ v # (bs * head, seq, seq) @ (bs * head, seq, hid / head)

# (bs * head, seg, hid / head) -> (seq, bs * head, hid / head) -> (seq, bs, hid) -> (bs, seq, hid)

attn = attn.transpose(0, 1).contiguous().view(seq, bsz, dim).transpose(0, 1)

if self.has_out_proj:

attn = self.out_proj(attn)

return attn

class TransformerLayer(nn.Module):

def __init__(self, dim=512, heads=2, girth=1, dropout=0.1,

single_matrix=True,

has_out_proj=True):

super().__init__()

self.attention = MultiHeadAttention(dim, n_heads=heads,

single_matrix=single_matrix,

has_out_proj=has_out_proj)

self.activation = nn.ReLU()

self.linear1 = nn.Linear(dim, dim * girth)

self.linear2 = nn.Linear(dim * girth, dim)

self.norm1 = nn.LayerNorm(dim)

self.norm2 = nn.LayerNorm(dim)

self.dropout = nn.Dropout(dropout)

self.dropout1 = nn.Dropout(dropout)

self.dropout2 = nn.Dropout(dropout)

def forward(self, x):

# (batch * dims * sequence) => (batch * sequence * dims)

x = x.permute(0, 2, 1).contiguous()

attn = self.attention(x)

x = x + self.dropout1(attn)

x = self.norm1(x)

x2 = self.linear2(self.dropout(self.activation(self.linear1(x))))

x = x + self.dropout2(x2)

x = self.norm2(x)

# (batch * sequence * dims) => (batch * dims * sequence)

x = x.permute(0, 2, 1).contiguous()

return x

def load_pre_trained_transformer(original_decoder, new_decoder):

"""Load original PyTorch TransformerEncoder weights

into a simplified transformer layer for transfer learning

"""

assert len(original_decoder) == len(new_decoder)

# assume only equal dims everywhere

dim = new_decoder[0].linear1.in_features

for i in range(0, len(original_decoder)):

# load attention

if hasattr(new_decoder[i].attention, 'out_proj'):

print(f'Loading out proj layer {i}')

new_decoder[i].attention.out_proj.load_state_dict(original_decoder[i].self_attn.out_proj.state_dict())

if hasattr(new_decoder[i].attention, 'QKV'): # fused matrix

print(f'Loading fused matrix {i}')

new_decoder[i].attention.QKV.weight = nn.Parameter(

original_decoder[i].self_attn.state_dict()['in_proj_weight'].clone().detach()

)

new_decoder[i].attention.QKV.bias = nn.Parameter(

original_decoder[i].self_attn.state_dict()['in_proj_bias'].clone().detach()

)

else: # separate matrices

# keep Q K V as separate matrices

# looks like order is correct

# https://github.com/zhangguanheng66/pytorch/blob/6c743c7721251ca9b5046fc56a071bc1f36916be/torch/nn/functional.py#L3182

new_decoder[i].attention.Q.weight = nn.Parameter(

original_decoder[i].self_attn.state_dict()['in_proj_weight'][:dim, :].clone().detach()

)

new_decoder[i].attention.Q.bias = nn.Parameter(

original_decoder[i].self_attn.state_dict()['in_proj_bias'][:dim].clone().detach()

)

new_decoder[i].attention.K.weight = nn.Parameter(

original_decoder[i].self_attn.state_dict()['in_proj_weight'][dim:dim * 2, :].clone().detach()

)

new_decoder[i].attention.K.bias = nn.Parameter(

original_decoder[i].self_attn.state_dict()['in_proj_bias'][dim:dim * 2].clone().detach()

)

new_decoder[i].attention.V.weight = nn.Parameter(

original_decoder[i].self_attn.state_dict()['in_proj_weight'][dim * 2:, :].clone().detach()

)

new_decoder[i].attention.V.bias = nn.Parameter(

original_decoder[i].self_attn.state_dict()['in_proj_bias'][dim * 2:].clone().detach()

)

# load projection layers

print(f'Loading linear1 linear2 {i}')

new_decoder[i].linear1.load_state_dict(original_decoder[i].linear1.state_dict())

new_decoder[i].linear2.load_state_dict(original_decoder[i].linear2.state_dict())

return new_decoder

@raghuramank100

nn.MHAwithinnn.Transformeris not supported for quantization now.

Is there a plan to support this feature? I am getting the same error when trying to use a quantized model with nn.Transformer / MultiheadAttention (PyTorch 1.5.0 or Nightly build).

hi folks, we are working on improving quantization support for transformers and multihead_attention. We don't have a timeline to share, but hopefully it should be months (not weeks / years).

Hi,

If this is any help, my I tested my above snippet in two scenarios:

Hi @snakers4, I'm new to pytorch and trying to quantize ktrapeznikov/albert-xlarge-v2-squad-v2 from huggingface and I'm getting the same error. Could you help me?

Hi, if anyone is facing similar issue, I just stumbled upon #2542 comment which solved my error. Thanks!

I'm new to pytorch and trying to quantize ktrapeznikov/albert-xlarge-v2-squad-v2 from huggingface and I'm getting the same error. Could you help me?

When I last checked, the standard transformer layers were not quantizable, maybe it changed As for pre-trained BERT models I did not really try playing with them, we just implemented our own layer for our purposes

Hi, if anyone is facing similar issue, I just stumbled upon #2542 comment which solved my error. Thanks!

Did you check under the hood, maybe this particular model uses torch.nn.Bilinear instead of Linear layers?

This relates to the MHA, resolved by this PR: https://github.com/pytorch/pytorch/pull/49866

I'm new to pytorch and trying to quantize ktrapeznikov/albert-xlarge-v2-squad-v2 from huggingface and I'm getting the same error. Could you help me?

When I last checked, the standard transformer layers were not quantizable, maybe it changed As for pre-trained BERT models I did not really try playing with them, we just implemented our own layer for our purposes

Hi, if anyone is facing similar issue, I just stumbled upon #2542 comment which solved my error. Thanks!

Did you check under the hood, maybe this particular model uses

torch.nn.Bilinearinstead ofLinearlayers?

@snakers4 no I haven't checked it yet, will do it. But one thing I noticed was inference with the quantized model with torch.nn.Bilinear took the same time as the original one. And also, the size of the quantized model was same as the original one too, so I guess it didn't actually serve the purpose of quantizing.

@z-a-f I shall check that too, thanks!

@snakers4 no I haven't checked it yet, will do it. But one thing I noticed was inference with the quantized model with torch.nn.Bilinear took the same time as the original one. And also, the size of the quantized model was same as the original one too, so I guess it didn't actually serve the purpose of quantizing.

Looks like it did not quantize anything Make sure to print out the model and see what happened inside to check

@snakers4 , just checked printing the model out. The quantized model with torch.nn.Bilinear is the same as the orginal one. While the other quantized ones get DynamicQuantizedLinear layer after quantization

This should be resolved in https://github.com/pytorch/pytorch/pull/49866

🐛 Bug

https://github.com/pytorch/pytorch/issues/32590#issuecomment-579261982

TLDR

To Reproduce

Steps to reproduce the behavior:

I just use the same invokation as in the tutorial Should work, because your transformer and huggingface should consist of Linear layers mostly!

Expected behavior

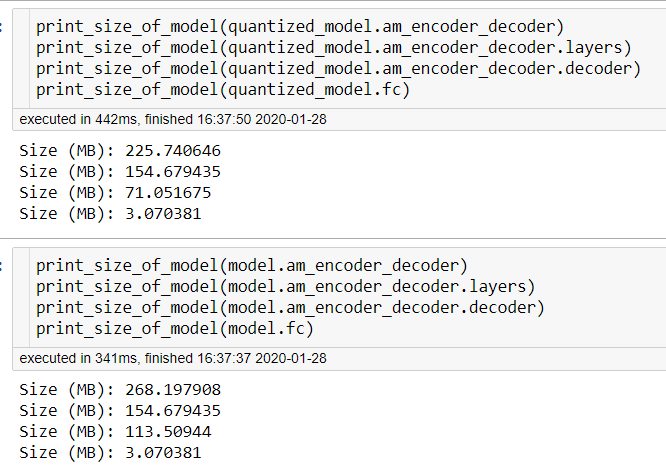

The model loses "weight" (this happens)

You are able to run it

Environment

conda,pip, source): I use this imagedocker pull pytorch/pytorch:1.4-cuda10.1-cudnn7-develcuda10.1-cudnn7-develnn.transformersAdditional context

When I run the model, I get this bug (I run on CPU)

Also, this may be relevant - my

forwardlook like thisThe problem seems to be with this function

Do I understand correctly, that it should go away if this function is replaced by nn.module version? Or is there any other way to apply a quick monkey path until 1.5 is released?

cc @jerryzh168 @jianyuh @dzhulgakov @raghuramank100 @jamesr66a