Same issue with maskrcnn_resnet50_fpn.

Any ideas?

Open dc986 opened 3 years ago

Same issue with maskrcnn_resnet50_fpn.

Any ideas?

Are you using the debug versions of libtorch and torchvision?

I've tried with both debug and release. But none of the two works

Try passing in a list of tensor images(c x h x w) instead of a single tensor that contains a batch of images:

auto imageList = c10::List<torch::Tensor>({imageTensors...});

std::vector<torch::jit::IValue> inputs;

inputs.emplace_back(imageList);

torch::jit::IValue output = module.forward(inputs);For reference, this is what I use to convert a cv::Mat to a torch tensor:

torch::Tensor createImageTensor(const cv::Mat &image)

{

cv::Mat rgbImage;

cv::cvtColor(image, rgbImage, cv::COLOR_BGR2RGB);

torch::Tensor tensorImage = torch::from_blob(

rgbImage.data, {rgbImage.rows, rgbImage.cols, 3},

torch::TensorOptions().dtype(torch::kByte).requires_grad(false));

tensorImage = tensorImage.to(torch::kFloat);

tensorImage /= 255.0;

tensorImage = tensorImage.transpose(0, 1).transpose(0, 2).contiguous();

return tensorImage;

}Thanks for you answer, this is now my code:

torch::Tensor t1 = createImageTensor(image);

torch::Tensor t2 = createImageTensor(image);

auto imageList = c10::List<torch::Tensor>({ t1, t2 });

std::vector<torch::jit::IValue> input_to_net;

input_to_net.emplace_back(imageList);

auto output = module.forward(input_to_net);Unfortunately it gives me an assert again.

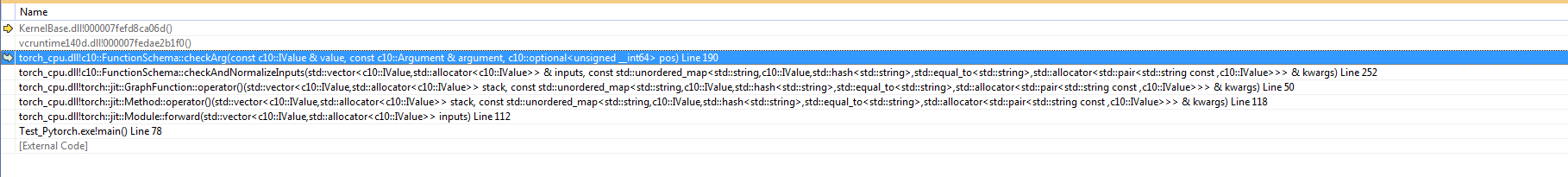

Looking at the call stack where the exception is thrown:

torch_cpu.dll!torch::jit::Module::forward(std::vector<c10::IValue,std::allocator<c10::IValue>> inputs)In file "libtorch-win-shared-with-deps-debug-1.7.1+cpu\libtorch\include\torch\csrc\jit\api\module.h" Line 112

IValue forward(std::vector<IValue> inputs) {

return get_method("forward")(std::move(inputs));

}input.size() = 0

Can you verify that you can correctly run the tracing test ?

I've tried to run the tracing test. The output is the same I had with the image.

I've tried to download torchlib-nightly + vision-master and did again the same tracing test.

This time the error is before, when I try to load the model I have the following errors:

Did you modify the source code of the test? It seems like it's trying to load a file called fasterrcnn_resnet50_fpn_1602_nightly.pth. Files with extension pth are not usually the scripted/traced ones.

Yes, sorry, I've tried both.

Extension .pt gives the same output.

You shouldn't have to modify the source code. the pt file is generated by the python file in the tracing directory, so make sure you run that one first.

I've compiled the test using cmake, it runs, the model is correctly loaded and the forward gives no problem.

When I use the model traced with the test in Visual Studio I am back to the original issue, the inference does not work. I've set Include Directories, Library Directories and Linked Input. In the post build event the torch and torchvision .dll are copied where the executable files is.

Can you share the python code you use to generate the torchscript file?

This is my code

import cv2

import os, sys, time, datetime, random

from PIL import Image

from matplotlib import pyplot as plt

import torch

import torchvision

model = torchvision.models.detection.fasterrcnn_resnet50_fpn(pretrained=False)

model.eval()

traced_model = torch.jit.script(model)

traced_model.save("my_fasterrcnn_resnet50_fpn.pt")That looks fine. If you can't correctly run your my_fasterrcnn_resnet50_fpn.pt in the tracing test, I'm out of ideas :/.

Maybe I accidently find out a solution. I came across a similar problem like yours.

orginal code:

auto InputTensor = torch::from_blob(mGlobalCam_P.data, {1, mGlobalCam_P.rows, mGlobalCam_P.cols, 3 }, torch::kFloat);

InputTensor = InputTensor.permute({ 0,3,1,2 });

the fine code:

auto InputTensor = torch::from_blob(mGlobalCam_P.data, {1, mGlobalCam_P.rows, mGlobalCam_P.cols, 3 }, torch::kByte);

InputTensor = InputTensor.permute({ 0,3,1,2 }).to(torch::kFloat);

I don't know why. It seems that we'd better use kByte in from_blob.

Tell me if this works for you or not.

🐛 Bug

module.forward() launches Debug assert

File: minkernel\crts\ucrt\src\appcrt\heap\debug_heap.cpp Line: 966

Expression: __acrt_first_block == header

To Reproduce

Loaded scripted model with

Loaded image into tensor with

Both model and tensor seem to be loaded correctly anyway

does not work.

call stack is:

Environment

OS: Microsoft Windows 7 Professional Language: C++ CMake version: version 3.17.1 Python version: 3.7 (64-bit runtime) Is CUDA available: N/A numpy==1.18.5 torch==1.7.1+cpu torchaudio==0.7.2 torchvision==nightly Python version:

Additional context

cc @vfdev-5