Thanks for the note! The 2048 was an issue where I forgot to push the updated figures to the server, and the 8k token one was an absolute oversight. You are right, the model supports more tokens. 131k actually. I updated it via #389.

Closed d-kleine closed 1 month ago

Thanks for the note! The 2048 was an issue where I forgot to push the updated figures to the server, and the 8k token one was an absolute oversight. You are right, the model supports more tokens. 131k actually. I updated it via #389.

Thanks!

@rasbt Could you please also update these figures?

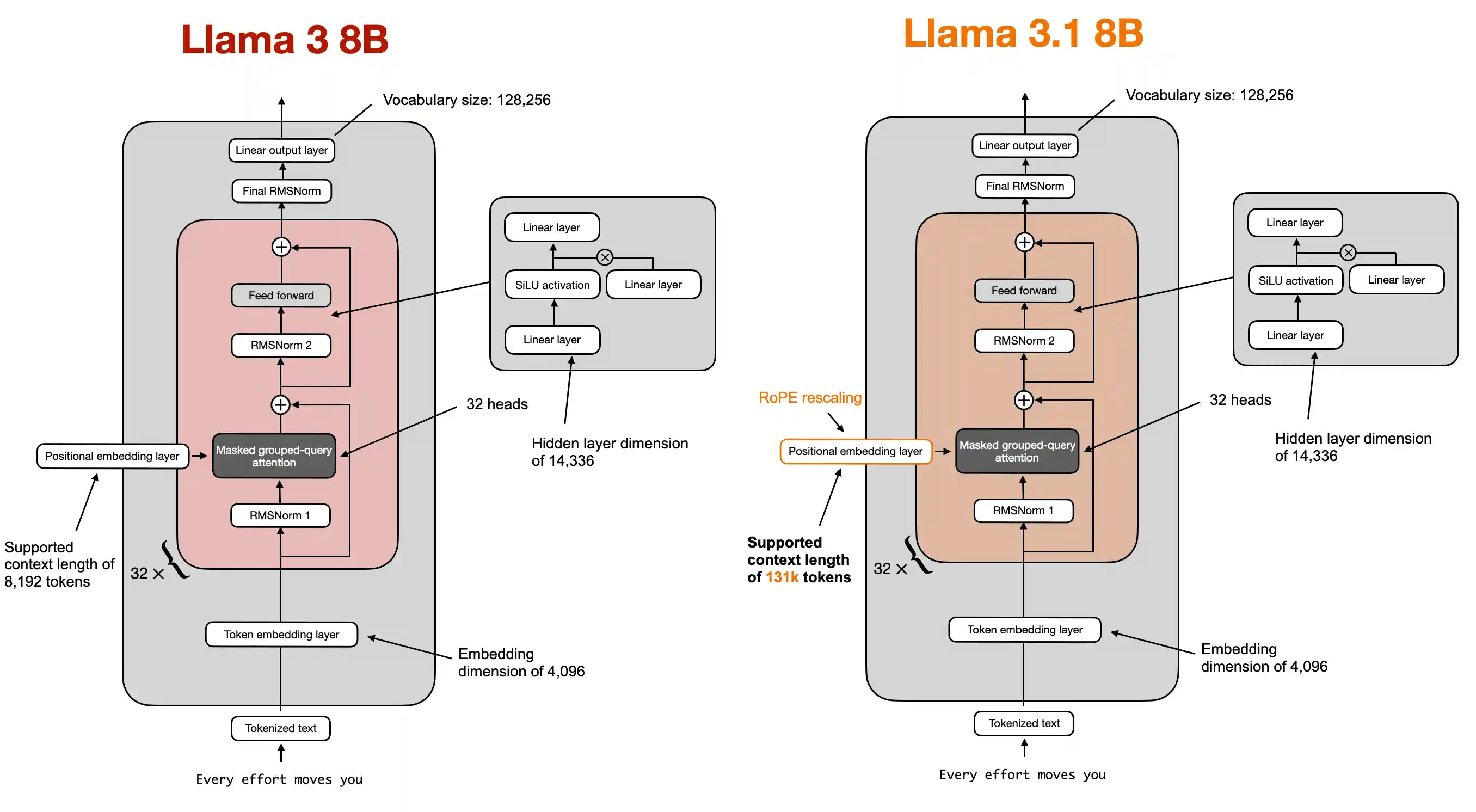

On the right, the context length for Llama 3.1 here (128k)

doesn't match with the one here on the left side:

Bug description

Hi Sebastian,

About converting-llama2-to-llama3.ipynb, I have found a inconsistency in the figure for the LLama 3.2 1B model:

I believe in the figure it should be "Embedding dimension of 2048" for the LLama 3.2 1B model figure.

About the figure and the code, isn't also the context length for both Llama 3.1 8b as well as LLama 3.2 1b much larger?

https://huggingface.co/meta-llama/Llama-3.2-1B -> A large context length of 128K tokens (vs original 8K)